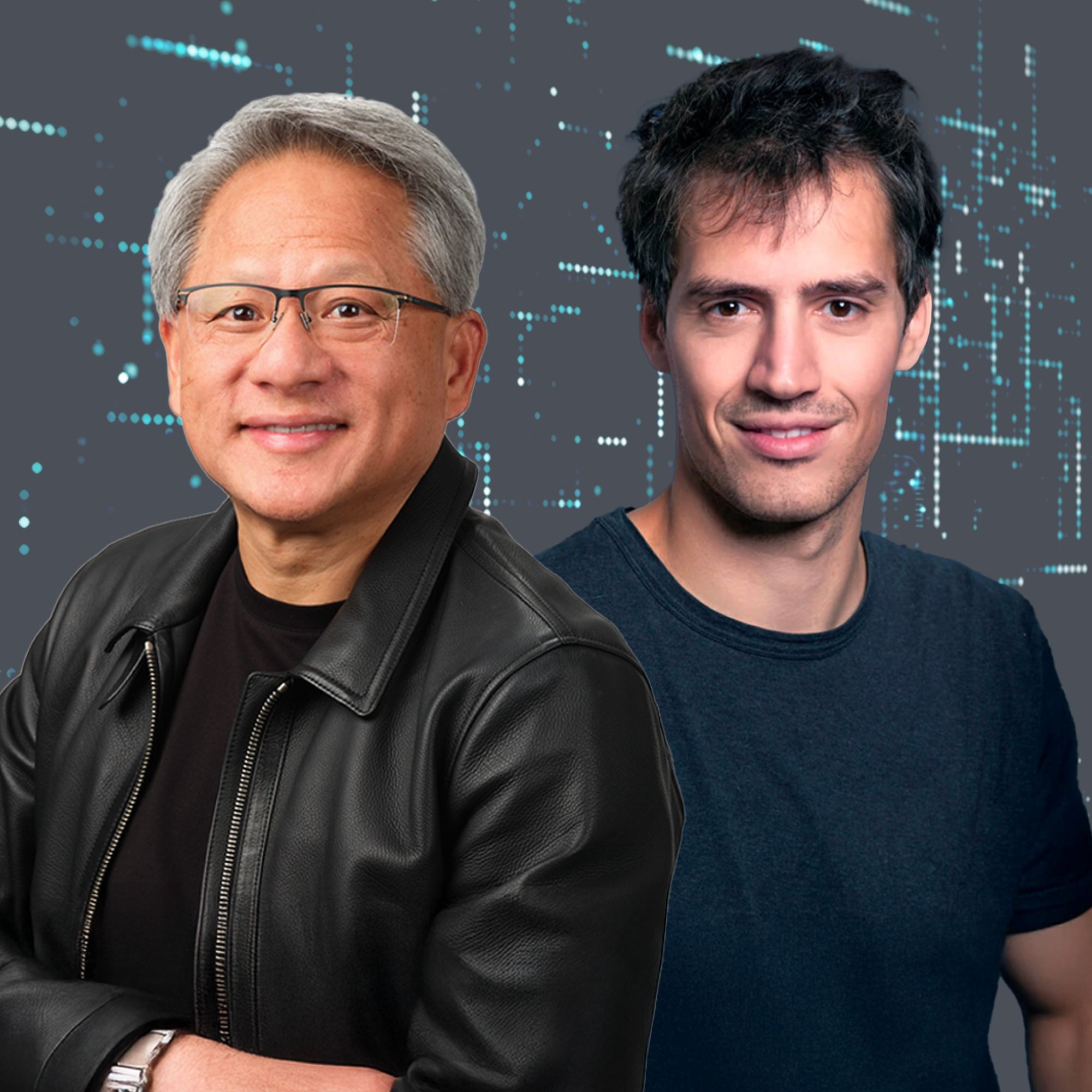

Jensen Huang and Arthur Mensch on Winning the Global AI Race

a16z Podcast

Deep Dive

Shownotes Transcript

This is the greatest force of reducing the technology divide the world's ever known. It will have an impact on GDP of every country in the double digits in the coming years. Nobody's going to do this for you. You've got to do it yourself. It's up to organizations, to enterprises, to countries to build what they need.

The stakes at play are basically the equivalent of modern digital colonialization. AI isn't just computing infrastructure, it's also cultural infrastructure.

The race for AI dominance is not only constrained to companies, but is increasingly capturing the attention of countries. And that includes the infrastructure spanning every layer of the stack. The chips, the models, the applications, plus the energy required to run these quote AI factories, the talent needed to produce them, and well-designed policy that helps not hinders this entire ecosystem. And all of this together is turning critical.

Setup is always hard. This is no different. The only question is, do you need to do it? If you want to be part of the future, and this is the most consequential technology of all time, not just our time, of all time, digital intelligence, how much more valuable, how much more important can it be?

In today's episode, we explore sovereign AI and this regional race for AI infrastructure across countries big and small. And there is truly no one better to discuss this than our guests, Jensen Huang and Arthur Mensch.

Jensen, of course, is the inimitable co-founder and longtime CEO of NVIDIA, a company known for its constant reinvention and ability to place critical bets like the GPU or graphics processing unit that has propelled it to be one of the largest companies at over $3 trillion in market cap as of this recording. Of course, the products that NVIDIA makes like the GPU are also the backbone to so much of our digital world today.

Arthur, on the other hand, is the co-founder and CEO of Mistral, a leading AI lab that focuses on customizable open source frontier models, but also a growing number of tools to help companies and even countries engage with AI. Today, Arthur and Jensen sit down with A16Z general partner, Anjana Mehta, as they explore the role of digital intelligence at the nation level and how countries should think about ownership, codifying their culture and the role that open source should play.

All right, let's get started. As a reminder, the content here is for informational purposes only, should not be taken as legal, business, tax, or investment advice, or be used to evaluate any investment or security, and is not directed at any investors or potential investors in any A16Z fund. Please note that A16Z and its affiliates may also maintain investments in the companies discussed in this podcast. For more details, including a link to our investments, please see a16z.com slash disclosures.

Today we're talking about sovereign AI, all things national infrastructure and open source. So let's just start with the first question I usually get from nation state leaders, which is, is AI actually a general purpose technology?

In the history of humanity, we've had maybe a handful of these, 22, 24 economists call these specific technologies that accelerate economic progress broadly across society. Electricity, the printing press.

And the question everybody's asking right now, is that the right way to think about AI? Or why isn't AI just another important but ultimately narrow technology? I think it's a general purpose technology because it basically revisits entirely the way we are building software and the way we are using machines. And so in the same way that internet was a general purpose technology, AI is a general purpose technology here.

It allows to build agents that are doing things on your behalf. And in that respect, it can be used in any vertical of the industry. It can be used for services, for public services. It can be used to change the life of citizens. It can be used for agriculture. It can obviously be used for defense purposes. So it covers everything that a state needs to worry about. And so in that respect, it's very natural that any state makes it a priority and makes it a dedicated national AI strategy.

By the way, everything Arthur said is 100% correct. It is also exactly the reason why everybody's given up. And it's precisely wrong. And the reason for that is this. If it's a general purpose technology and one company can build the ultimate general purpose technology, then why shouldn't anybody else do it?

And that is the flaw. Right. But that's also the mind trick to convince everyone that intelligence is only something that a few people ought to go build. Everybody ought to sit back and wait for it. I would advise...

That everybody engage AI and it is not just a few companies in the world who should build it. Everybody should build it. Nobody's going to care more about the Swedish culture and the Swedish language and the Swedish people and the Swedish ecosystem more than Sweden.

Nobody's going to care about the ecosystem of Saudi Arabia more than Saudi Arabia. And nobody's going to care about Israel more than Israel, despite the fact that the technology is general purpose. And absolutely true. How could intelligence not be general purpose? It is also hyper-specialized. And the reason for that is because, let's face it,

I don't think I'm waiting around for a general purpose chatbot to be an expert in a particular area of disease. I still think that I would prefer to have somebody who is hyper-specialized in that field to fine-tune, to train, and post-train, if you will, an AI model that's going to be specialized in that. It's a general purpose technology the same way a programming language is a general purpose technology.

And in addition to that, it's also a culture-carrying technology. So I think what that means is that

There's an infrastructure. There are chips that obviously not every country are going to build. There are general purpose models like base models, compression of the web that are eventually going to be open source and that can serve as the right basis for constructing specialized systems. But beyond that, I think it's up to organizations, to enterprises, to countries to build what they need. So the way to make it work is to take a general purpose model, like an open source model, for instance,

And to get the knowledge you have specifically or ask your citizens or ask your employees to distill their knowledge into the systems, into the agents that are going to be working on your behalf. So that progressively those agents become more accurate and following the instructions and the specifications that the country or an enterprise may have. So you need vertical communication.

experts, or you need cultural experts, or you need people with a certain national agenda to partner with technological companies that can expose the open source infrastructure in a way that is easy to use and in a way that is easy to specialize. So I think that's where the frontier lies. It's a very horizontal technology. To make anything useful out of it, you need the partnership between the horizontal providers and the vertical experts.

But unlike previous general purpose technology waves in history, like electricity or the printing press, how is this one different? If I'm a nation state leader and I'm trying to understand what the right framework is for me to think about AI in my country, should I think about it like digital labor? Should I think about it as akin to bridges?

I think it's similar to electricity in the sense that it will have an impact on GDP of every country in the double digits in the coming years. So that means that from an economical point of view, every nation needs to worry about it because if they don't manage to set up infrastructure to set up their own sovereign capacities at the right place, that means that this is money that might flow back to other countries. So that's changing the economic equilibrium across the world.

In the sense that's not very different from electricity. A hundred years ago, if you weren't building electricity factories, you were preparing yourself to buy it from your neighbors, which at the end of the day isn't great because it creates some dependencies. I think in that sense, it is similar. What is fairly different, I think there's two things. First of all,

So it's kind of an amorphic technology. If you want to create digital labor with it, you need to shape it. You need to have infrastructure, talent and software. And the talent needs to be created locally. I think this is quite important. And the reason for that is that in contrast with electricity, this is a content producing technology. So you have agents that are producing content, that are producing text, producing images, producing voice, interacting with people.

And when you're producing content and interacting with society, you become a social construct. And in that respect, social construct theory, cultures and values of either an enterprise or a country. And so if you want those values not to disappear and not to depend on a central provider, you need to engage with it more profoundly than you would be to engage with electricity, for instance. Would you agree with that, Jensen? A couple of ways to think about it.

Your country's digital intelligence is not likely something you would want to outsource to a third party without some consideration. Your digital intelligence is just now a new infrastructure for you. Your telecommunications, your healthcare, your education, your highways, your electricity. This new layer is your digital intelligence. It's your responsibility to decide how you want this digital intelligence to evolve.

and whether you want to outsource it so that you could never have to worry about intelligence again, or this is something that you feel you want to engage, maybe even control and shape into a national infrastructure. Of course, it has all the things that Arthur said.

AI factories, infrastructure, et cetera. There's another way you could think about it is your digital workforce. Now this is a new layer and you've got to decide whether the digital workforce of your country or your company is something that you decide to outsource, hope it evolves the way that you would like it to, or is it something that you want to engage, maybe even decide to control,

and nurture and make better. We hire general purpose employees all the time. We hire them out of school. Some of them are more general purpose than others. Some of them are more intelligent than others. But once they become our employees, we decide to onboard them, train them, guardrail them, evaluate them, continuously improve them,

We make the investment necessary to make general purpose intelligence into super intelligence that we could benefit from. And so I think that that second layer, thinking about it as digital workforce, in both cases, it contributes to the national economy. In both cases, it contributes to social advance. In both cases, it contributes to the culture.

And I think that in both cases, a country needs to play a very active role in it. And so I think it's back to your original question about sovereign AI, how to think about it. Yes, it is definitely a general purpose technology, but you have to decide how to shape it. Your country's digital data belongs to you. Your national library, your history, for so long as you want to digitize it,

You could make it available to everybody in the world. You could also make it available to companies or researchers and institutions in your own country. It belongs to you.

Of course, these are all vaporous things. They're very soft ideas, but it does belong to you. And you could decide it belongs to you in the sense that this is where you came from. You could decide how to put it to use for the benefit of your people. And it belongs to you in the sense that it's your responsibility to shape its future. Sovereign AI. It's your responsibility. There are several other types of assets that nation states fund and protect.

the military, your electricity grid. Let's say I've understood now the criticality of AI infrastructure and sovereign AI. Do I have to now take control of every part of the stack? So Jensen mentions, I guess, digital workforce. Right. And I think it's a very good analogy that you need an onboarding platform for your AI workforce.

Which means you need to be able to customize the models and pour the knowledge that are sitting in your national libraries into the model so that suddenly speaks better your language. You need to get your systems to know about your laws so that suddenly the guardrails that are set when you're deploying an AI software are compliant. And so that onboarding platform that requires to customize, to guardrail, to evaluate, and

And then when noticing that certain things need to be improved to fix things, to debug things, that's the platform that we are building.

Being able to deploy systems that are easy to tune and working with these platform providers to do the custom systems. And once the custom systems are made, it's important to be able to maintain them yourselves. So that means being able to deploy them on your own infrastructure, being able to ask your technological partners to potentially disappear from the loop. Your IT department is going to become the HR department of your digital workforce.

And they're going to use these tools that Arthur describes to onboard AIs, fine-tune AIs, guardrail them, evaluate them, continuously improve them. Right. And that flywheel will be managed by the modern version of the IT department. Right. And we'll have biological workforce and we'll have a digital workforce. It's fantastic.

And so nobody's going to do this for you. You've got to do it yourself. That's why even though we have so many technology companies in the world, every company still has their own IT department. I've got my own IT department. I'm not going to outsource it to somebody else. In the future, they'll be even more important to me because they'll be helping us manage these digital workforces. You're going to do this in every country. You're going to do this in every company within those countries. And so the space...

for what Arthur is describing to take this general purpose technology, but to really fine tune it into domain experts. They're national experts or they're industrial experts or they're corporate experts or functional experts.

This is the future, the giant future space of AI. So you both said something that I just want to make sure I'm understanding correctly. You called it a soft concept like your culture, and you said there are a bunch of norms that the training data has that you customize the models on. You said norms. That exactly means it's soft versus rules, which are more hard. Or algorithms and laws, which are very specific. Right.

There's different things that you want to incorporate into your AI systems. There are some elements of style and of knowledge that you're not going to enforce through strict guardrails, that you can enforce through continuous training of models, for instance. You take preferences and you distill it into the models themselves. And then you have a set of laws, you have a set of policies if you're in a company, and those are strict.

And so usually the way you build it is that you connect the models to the strict rules and you make sure that every time it answers, you verify that the rules are respected. On one side, you're pouring and compressing knowledge in a soft way into the models. And on the other side, you're making sure that you have a certain number of policies and rules that are strictly enforced and that have 100% accuracy. So on one side, this is soft, this is preference, this is culture. Preference. Somebody's preference is multidimensional.

You know, what you prefer. It depends. It's implicit many times in communication. Well, there's just so many, there's so many features that defines my preference. It takes AI to be able to precisely comply with the description that Arthur was describing just now. Could you imagine if a human had to write this in Python?

describe every one of these, capture every one of these things in C++. Based on this, I prefer that. But if you did that, I prefer that other thing. And I mean, the number of rules would be insane. Which is the reason why AI has the ability to codify all of this. It's a new programming model that can deal with the ambiguity of life.

Well, it sounds like you're saying AI isn't just computing infrastructure. It's also cultural infrastructure. Yes, it is. Is that right? And it's about making sure that your cultural infrastructure and the human expertise that are in your company or in your country makes it to the AI systems. Right. Culture reflects your values. We were just talking about how each one of these AI models, AI services, respond differently to the type of questions you're asked. Right. Because they codify culture.

the values of their service or the values of their company into each one of their services.

Could you imagine this now amplified at an international scale? This is an inherent limitation of centralized AI models, where you're thinking that you can encode some universal values and some universal expertise into a general purpose model. At some point, you need to take the general purpose model and ask a specific population of employees or of citizens, what are their preferences and what are their expectations? And you need to make sure that you're specializing the model in a soft way and

and in a hard way, through rules and through culture and preferences. And so that part is not something that you can outsource as a country. It's not something that you can outsource as an enterprise. You need to own it. Well, then is it an exaggeration to say, if it is cultural infrastructure and I don't own sovereignty of it, the stakes at play are basically the equivalent of modern digital colonialization? If you're saying, Anj, you've got to think about AI as almost like your digital workforce.

and another country or somebody who's not my sovereign nation can decide what my workforce can and can't do. That's a problem. Some of it is universal. For example, it is possible for certain companies to serve nations and society and companies around the world because it's basically universal. But it cannot be the only digital intelligence layer. It has to be augmented by something regional.

You know, I think McDonald's is pretty good everywhere. All right. Kentucky Fried Chicken is pretty good everywhere. But you still want the local style, local taste that augments on top of that. The last mile. That's right. The local cafes, the mom and pop restaurants, because it defines the culture. Right. It defines society. It defines us. I think it's terrific that you have Walmart everywhere, that you can count on everywhere. You know, I think it's fine. But you need to have local taste, local style, local preference, local excellence, local

services. Let me swing it another way. It is very likely that in the context of our digital workforce in the future, we will have some digital workers which are generic. They're just really good at doing maybe basic research or something basic. Good college level graduate. Or they're useful for every company. It's unnecessary for me to create something new. I think Excel is pretty good.

Microsoft Office is universally excellent. Right. I'm perfectly fine with it. Good reference architecture base. That's right. Right. Then there's industry-specific tools. Right. Industry-specific expertise that is really important. For example, we use Synopsys and Cadence. Arthur doesn't have to because it's specific to our industry, not his.

We probably both use Excel. Probably both use PDFs. We both use browsers. And so there's some universal things that we can all take advantage of. And there'll be universal digital workers that we can take advantage of. And then there'll be industry specific. And then there'll be company specific. Inside our company, we have some special skills that are very important to us that defines us. It's highly biased, if you will. Yeah.

very guardrail to doing very specific work, highly biased to the needs and the specialties of our company. And so we become superhuman in those areas. Well, your digital workforce is going to be the same and AI is going to be the same. There'll be some that you just take off the shelf. The new search will likely be some AI. The new research will probably be some AI. But

But then there'll be industrial versions of AIs that we'll maybe get from Cadence and others. And then we'll have to groom our own using Arthur's tools. Right. And we'll have to fine tune them. We'll onboard them. We'll make them incredible.

I very much agree with this vision of having a general purpose model and then some layer of specialization for industries and then an extra layer of specialization for companies. You will have a tree of AI systems that are more and more specialized. And maybe to give a concrete example with what we recently did, so we released in January a model called Mistral Small, and it's a general purpose model. So it speaks all of the languages, it knows mostly about most things, but then we

What we did is that we took it and we started a new family of specialized models that are specialized in languages. So we took more languages in Arabic, more languages in Indian languages, and we retrained the model. And so we distilled this extra knowledge that the initial model hadn't seen. And so in doing that, we actually made it much, much better in being idiomatic when it speaks Arabic and when it speaks languages from the Indian peninsula. And so language, it's probably like the first thing you can do when you're specializing in a model.

The good thing is that for a given size of model, you can get a model that is much better if you choose to specialize it in a language. So today, our model, which is the 24B, it's called Mistral Saba, it's a model tuned in Arabic, is outperforming every other language model that are like five times larger. And the reason for that is that we did the specialization.

And so that's the first layer. And then if you think of the second layer, you can think of verticals. So if you want to build a model which is not only good at Arabic, but also good at handling legal cases in Saudi Arabia, for instance, well, you need to specialize it again. So there's some extra work that needs to be done in partnership with companies to make sure that not only your system is good at speaking a certain language, but it's good at speaking a certain language and understanding the legal work that is done in this language.

And so it's true for any combination that you can think of, of vertical and language. I see. You want to have a medical diagnosis assistant in French. Well, you need to be good at French, but you also need to understand how to be good at speaking the French language of physicians. Right. And so those two things, it's very hard to do as a general purpose model provider.

If this is true, and what you're describing is real, that I need the capabilities to customize this AI layer on my local norms, my local data, which is fairly sophisticated from a technical capability perspective. How would you advise a big nation to think about the stack we're talking about, the chips, the compute, the data center, the models that sit on top of the applications, and then ultimately what you were describing as the AI nurse or the AI doctor?

And how would you advise someone that's a smaller nation differently?

I would say you need to buy and to set up the horizontal part of the stack. So you need the infrastructure, you need the inference primitives, you need the customization primitives, you need the observability, you need the ability to connect guardrails to models, to connect models to sources of information, of real-time information. Those are primitives that are fairly well factorized across the different countries, across the different enterprises.

And once you have that, these are things that can be bought. Then you can start working. Then you can start building. You build from these primitives according to your values, according to your expertise, and thanks to your local talent. The question is, where is the frontier in between what is horizontal? And horizontal, if you're a small enterprise or a small country, you should probably buy. And what is vertical and specific to you? And that's definitely something that you need to build.

You have to get it in your head that it's not as hard as you think it is. First of all, because the technology is getting better, it's easier. Could you imagine doing this five years ago? It's impossible. Could you imagine doing this five years from now? It'll be trivial. And so we're somewhere in that middle. The only question is, do you have to do it? Right. The truth of the matter is, I hate onboarding employees.

And the reason for that is because it takes a lot of work. But once you set up an HR organization and leadership mentoring organization and processes, then your ability to onboard employees is easier and is systematically more enjoyable for everybody involved. But in the very beginning, it's hard. Setup's always hard. Setup is always hard. This is no different. The only question is, do you need to do it? If you want to be part of the future, and this is the most

consequential technology of all time. Right. Not just our time, of all time. Digital intelligence, how much more valuable, how much more important can it be? And so if you come to the conclusion this is important to you, then you have to engage it as soon as you can, learn along the way, and just know that it's getting easier and easier all the time. The fact of the matter is if we try to do agentic systems even three years ago, it was incredibly hard. Right. But agentic systems are a lot easier today.

And all of the tools necessary for curating data sets, for onboarding the digital employees, to evaluating the employees, to guardrailing digital employees, all of those are getting better all the time. The other thing about technology is when it becomes faster, it's easier. Could you imagine back in the old days? Of course, I had the benefit of seeing computers from its earliest days. And the performance of the computers were so frustratingly slow, everything you did was hard. But these days...

The type of things we do is just magical because it's also fast. And so whether it's motivated by your institutional need to engage in

the most consequential technology of all time, or the fact that it's getting better all the time, so it's not that hard. I think the number of excuses is running out. So let's talk about that for a second, because change is hard. I've got an endless list. If I'm a nation state leader, I'm facing increasing amounts of geopolitical risk. I don't know who my allies are. Elections are coming. There's any number of things I've got to deal with.

But now let's say I understand that this is important. You guys spend so much time talking to nation state leaders who are thinking about what are the risks of adopting AI too fast? And you're right, the zeitgeist has shifted based on the Paris Action Summit. It seemed like there's a tone of optimism more than there was a tone of pessimism a year ago. But what are the most common questions you get from nation state leaders when they're asking you about risks and how to think about them?

So I've heard several questions, but one of the risks is to see your population start getting afraid of the technology for fear of it replacing them. And that is something that can actually be prevented. If we collectively make sure that everybody gets access to the technology and is trained in using it. The skilling of the various citizens of the populations is extremely important and

Stating AI as an opportunity for them to actually work better and showing the purpose of it through applications, through things that they can actually install on their smartphone, through public services. We're working, for instance, with the French unemployment system to actually connect opportunities of jobs to unemployed people through AI agents that are being actionated by obviously human operators within the agency.

And that is one opportunity. That's a very palatable opportunity for people to find a job better. And so that's part of the thing that can make sure that population understand the opportunity and the fact that AI is really just a new change for them to adopt, just the same way they had to adopt personal computers in the 90s and internet in the 2000s. The common aspect with these changes is that you need people to embrace the technology and understand

I think the biggest problem that nation states may have is to see AI increase the digital divide that is already relatively big. But if we work together and if done in the right way, we can make sure that AI is actually reducing the digital divide. AI is a new way to program a computer. It is because by typing in some words, you can make the computer do something. Just like we did in the past. Right. I know you talked to it. You can interact with it in a whole lot of ways. You can make the computer...

do things for you a lot easier today than it was before. Right. The number of people who could prompt ChatGPT and do productive things, just from a human potential perspective, is vastly greater than the number of people who can program C++ ever.

And therefore, we have closed the technology divide. Probably the greatest equalizer we've seen. It is by definition. Right. The greatest equalizer of technologies of all time. But you still need to have citizens to know about it. I think that's the thing. I'm just describing the fact. Yes. The fact is, there are more people who program computers using ChatGPT today than there are people who program computers using C++. Right. That's a fact.

And so the fact is, this is the greatest force of reducing the technology divide the world's ever known. Right. It's just perceived, and what Arthur's saying, the perception through, I don't know who, and I'm talking about it, and I don't know how, talking about it. But the fact of the matter is, it is not stopping. Right. It's not stopping anything. The number of people who are actively using ChatGPT today is off the charts. I think it's terrific. It's completely terrific. Yeah.

Anybody who's talking about anything else apparently isn't working.

And so I think people realize the incredible capabilities of AI and how it's helping them with their work. I use it every single day. I used it this morning. And so every single day I use it. And I think that deep research is incredible. My goodness, the work that Arthur and all of the computer scientists around the world are doing is incredible. And people know it. People are picking it up, obviously, right? Just the number of active users.

Let's talk about open source for a bit, because both of you have talked quite publicly about the importance of open models in the context of sovereign AI. At DeepMind, you're part of the Chinchilla scaling laws, which were openly published. Your co-founder, Guillaume, created Lama. And then last year, NVIDIA and Mistral worked on a jointly trained model called Mistral Nemo. Why are open models such a big part of your focus?

Because it's an horizontal technology and enterprises and states are going to be eventually willing to deploy it on their own infrastructure. Having this openness is important from a sovereignty perspective. That's the first point. And then the second point of importance is that releasing open source models is a way to accelerate progress. And we created Mistral on the basis that

What we've seen during our early career when we were doing AI in between 2010 and 2020 was an acceleration of progress because every lab was building on top of each other. And that's something that kind of disappeared with the first large language models from OpenAI in particular.

And so spinning back that open flywheel of I contribute something and then another lab is contributing something else. And then we iterate from that is the reason why we created Mistral. And I think we did a good job at it because we started to release models and then Meta started to release models as well. And then we had Chinese company like DeepSeq release stronger models and everybody benefit from it too.

Coming back to Mistral Nemo, one difficulty of creating AI models in an open way is that this is more a cathedral than a bazaar setting when it comes to open source. Because you have

large span to do to build a model. And so what we did with NVIDIA team is really to mix the two teams together, have them work on the same infrastructure, the same code, have the same problems, and combine their expertise to build the same model. And that has been very successful because NVIDIA brought a lot of things we didn't know. I think we brought things that NVIDIA didn't know. And at the end of the day, we produced something that was at the time the best model for its size. Right.

And so we really believe in such collaborations and we think that we should do them more and at a higher scale. And not only with only two companies, but probably with three or four. And that's the way open source is going to prevail. I completely agree. The benefit of open source, in addition to accelerating and elevating the basic science, the basic endeavor,

of all of the general models and the general capabilities is the open source versions also activate a ton of niche markets and niche innovation. All of a sudden, healthcare, life sciences, physical sciences, robotics, transportation, the number of industries that were activated as a result of open source capabilities that are sufficiently good is incredible.

Don't ignore the incredible capabilities of open source, particularly in the fringe, the niche. But mission critical where data might be sensitive. Yeah, it could be, for example, in mining energy. Right. Who's going to go create an AI company to go mine energy?

Energy is really important, but the mining of energy is not that big of a market. And so open source activates every single one of them. Financial services, it turns out, activates them. Healthcare, defense. You pick your favorites. Anything that is mission critical and that requires to do once...

own deployments that potentially requires to do on-the-edge deployment as well. And anything that requires some strong auditing and the ability to do a thorough evaluation of it. You can evaluate a model much better if you have access to the weights than if you only have access to APIs. And so if you want to build certainty around the fact that your system is going to be 100% accurate, I don't think you should be using a closed-source model. And you have to connect it into your flywheel.

How are you going to connect your local data? Yeah, you have to connect it into your own, your local data, your own local experience. The more you use it, the better it becomes that flywheel. You can't do it without open source. But let's say I'm a nation state leader. I've been considering open source. I'm starting to hear things like, hey, open source is a threat to national security.

We should not be exporting our models because these open models actually give away a ton of nation state secrets or more importantly, the bad guys can use these open models too. And so this is a threat to security. Instead, what we should be doing is locking down maybe development amongst two or three labs that have the infrastructure to get licenses from the government to do training, to do the right safety and certification work.

I've certainly been hearing that a lot. How should I think about that versus what you're telling me, which is actually no open is better for mission-critical industries? Collaboration in between labs is going to be critical for humanity's success. And if one state decides to lock things down, the only thing that is going to happen is that another state will take the leadership. Because cutting yourself from the open flywheel has just too high of a cost for you to maintain competitivity if you do that. This is a debate that has occurred in the United States recently.

And effectively, if there's some export control over weight, this is not going to stop any country in Europe, any country in Asia to continue its progress. And they will collaborate to actually accelerate that progress. So I think we just need to embrace the fact that this is an horizontal technology, very similar to programming languages. Programming languages, they're all open source, right? So I think AI just needs to be open source in that respect.

We're glad to see that this realization that we could accelerate together by being more open about the way we build the technology. And so it's great to see that open source has a lot of good days before it. It is impossible to control. Software is impossible to control. If you want to control it, then somebody else's will emerge and become the standard, just as Arthur mentioned. And the question is, is open source safer than

Open source enables more transparency, more researchers, more people to scrutinize the work. The reason why every single company in the world is built, every cloud service provider is built on open source is because it is the safest technology of all. Give me an example of a public cloud today that's built

on an infrastructure stack that isn't open source. You start from open source, you could customize it. Right. But the benefit of open source is the contribution of so many people and the scrutiny. Very importantly, you can't just put any random stuff into open source, you'll get laughed off the internet. You've got to put good stuff on the open source because the scrutiny is intense. And so

So I think open source provides all of that. Great collaboration to accelerate innovation, escalate excellence, ensure transparency,

attracts scrutiny, all of that improves safety. In a sense, you're saying it's partly more secure because as we've seen with open source databases, storage, networking, compute, you get mass red teaming. The whole world can help you red team your technology versus just a small group of researchers inside your company. Is that roughly right? Yeah, exactly.

By pulling a lot of organizations together to come up with a technology that they can all use and specialize on their own domains, you're forcing the technology to be good for every one of them. And so that means you're removing...

biases, you're really making sure that the general purpose models that you're building are as good as possible and don't have failures. And I think open source in that respect is also a way to reduce the number of failure points. If as a company today, I decide to rely fully on a single organization and on its safety principles, on its red teaming organization as well, I'm trusting it a little too much. Whereas if I'm building my

technology on open source models, I'm trusting the world to make sure that the basis on which I'm building is secure. So that's a reduction of failure points. And that's obviously something that you need to do as an enterprise or as a country. We're going to transition a little bit now into company building, which is something a lot of people are excited to hear from both of you about. So let's start with you, Jensen. You've remarked that NVIDIA is the smallest big company in the world. What enabled you to operate that way?

Our architecture was designed for several things. It was designed to adapt well in a world of change, either caused by us or affecting us. And the reason for that is because technology changes fast. And if you overcorrect on controllability, then you are underserving a system's ability to become agile.

And to adapt. And so our company uses words like aligned instead of use words like control. I don't know that one time I've used the word control.

talking about the way that the company works. We care about minimum bureaucracy and we want to make our processes as lightweight as possible. Now all of that is so that we can enhance efficiency, enhance agility, and so on and so forth.

We avoid words like division. When NVIDIA was first started, it was modern to talk about divisions. And I hated the word divide. Why would you create an organization that's fundamentally divided? I hated the word business units. The reason for that is because why should anybody exist as one? Why don't you leverage as much of the company's resources as possible?

I wanted a system that was organized much more like a computing unit, like a computer to deliver on an output as efficiently as possible. And so the company's organization looks a little bit like a computing stack. And what is this mechanism that we're trying to create? And in what environment are we trying to survive in? This is much more like a peaceful community.

countryside? Or is this like much more like a concrete jungle? What kind of environment are you in? Because the type of system you want to create should be consistent with that. And the thing that always strikes me odd is that every company's org chart looks very similar, but they're all different things. One's a snake, the other one's a elephant, the other one's a cheetah, and everybody is supposed to be somewhat different in that forest, but somehow they all

Get along. Same exact structure, same exact organization doesn't seem to make sense to me. I agree that it feels like companies have personalities. And despite the fact that they're organized sometimes similarly,

I should say that obviously we have a lot of things to learn and I mean the company is not even two years old. I guess one challenge we have with Mistrial and I think our competitors have the same challenge is that this is one of the first time that the software company is actually a deep tech company that is driven by science. Science doesn't have the same time scales as software. You need to operate on a monthly basis. Sometimes you don't know exactly when the thing will be ready but

But on the other hand, you have customers asking, when is the next model coming up? When is this capability going to be available? Et cetera. And so you need to manage expectation. And I think for us, the biggest challenge, and I think we're starting to do a good job at it, is to manage the hinge in between the product requirements and what the science is able to do. Research and product. Yes, research and product.

And you don't want the research team to be fully dedicated to making the product work. So you need to work, and I think we've started to do it,

on making sure that you have several frequencies in your company. You have fast frequencies on the product side, iterating every week. And you have slow frequencies on the science side that are looking at why profoundly the product is failing on certain domains and how they could fix it through research, through new data, through new architecture, through new paradigm. And I think that's fairly new. This is not something that you would find in a typical SaaS company because this is inherently a science problem.

I mean, NVIDIA is one of the most successful companies that have over a 30-year timeline has figured out a way to keep science and research ahead of the rest of the world, whether it was CUDA back in...

2012, where that was fundamental systems research. Or Cosmos today, which is now saying, you know, it's definitely state of the art on how simulation should work out. We've harmonized exactly what Arthur just said. Is that heuristic right for you? Yeah, we harmonize that inside our company. We have basic research, applied research, and then we have architecture, and then we have product development.

And we have multiple layers of it. And these layers are all essential. And they all have their own time clock. In the case of basic research, the frequency could be quite low.

On the other hand, all the way to the product side, we have a whole industry of customers who are counting on us. And so we have to be very precise. And somewhere between basic research and discovering, hopefully, surprises that nobody expects. Right. On the one hand. On the other hand, to be able to deliver on what everyone expects. Predictably. Okay. These two extremes...

we manage harmoniously inside our company. There's so many fascinating things about this market, but there's one in particular that I want to call out. Both of you have

customers that are also your competitors. And those competitors are huge and highly capitalized tech giants. NVIDIA sells GPUs to AWS, which is building its own chips called Tranium. And Arthur, you're training models that you sell through AWS and Azure who have funded labs like Anthropic and OpenAI. So how do you win an environment like this? And how do you manage those relationships? Because we talked about company building internally, but now I'm curious externally, how do you survive

Jensen said it well, you give up control, but you work on alignment. And despite the fact that sometimes you have certain companies can be competitors, you may have aligned interests and you can work on specific agendas that are shared. You have to have your own place. Obviously, these cloud service providers aren't working with Arthur because they already have the same thing. They just want two of the same things.

It's because Arthur and Mistral has a position in the world that is unique to Mistral. And they add value in a particular place that is unique. A lot of the conversation we've had today are areas that Mistral and the work and their position in the world makes them uniquely good at. And we are different. We're not just another ASIC. We can do things for the CSPs and do things with the CSPs that are

not possible for them to do themselves. For example, NVIDIA's architecture is in every cloud. And in a lot of ways, we are their first onboarding for amazing future startups. And the reason for that is because by onboarding to NVIDIA, they don't have to make a strategic or business or otherwise commitment to a major cloud. They could go into every cloud and they could even decide to build their own system they like because the economics turns out to be better for them at some point or they're

They would like access to capabilities that we have that are somewhat protective within the clouds. And so whatever the reasons are, in order to be a good partner to somebody, you still have to have a unique position. You need to have a unique offering. And I think Mistral has a very unique offering. We have a very unique offering. And our position in the world is important to even the people we compete against. And so I think when we are comfortable within that and comfortable with our own skin, then we can be excellent partners.

to all of the CSPs and we want to see them succeed. I know that it's a weird thing to say when you see them as a competitor, which is the reason we don't see them as a competitor. We see them as a collaborator who happens to compete with us as well. And probably the single most important thing that we do for all the CSPs is bring them business. And that's what a great computing platform does. We bring people business. I remember when Arthur and I first met

we sat down in London at a late night restaurant and sketched out the plan for his Series A. And we were figuring out why he needed so much capital for the Series A, which in hindsight was remarkably efficient. I think the Mistral Series A we put together was half a billion relative to other folks who had to spend multiple billions to get to the same place. But I asked him, what chips would you like to run on? And you looked at me so absurdly as if I had asked you a question that how could it be an answer other than NVIDIA, other than H100s? And I think that ecosystem changed

has been the startup ecosystem that NVIDIA has invested in creates so much business for the clouds. What is the philosophy that led you to invest so deeply in startups and founders so early on, even before anybody knew about them? Two reasons, I would say. One, the first reason is I rarely call this a GPU company. What we make is a GPU, but I think of NVIDIA as a computing company. If you're a computing company, the most important thing you think about is developers.

Right.

So that's number one. The second thing is we were pioneering a new computing approach that was very alien to the world of general purpose computing. And so this accelerated computing approach was rather alien and counterintuitive and rather awkward for a very long time. And so we're constantly seeking out

looking for the next incredible breakthrough, the next impossible thing to do without accelerated computing. And so it's very natural that I would find and would seek out researchers and great thinkers like Arthur because, you know, I'm looking for the next killer app. And so that's kind of a natural intuition, natural instinct of somebody who is creating something new. And so if there's an amazing computer science thinker that we haven't engaged with, that's my bad. We got to get on it.

That's a perfect segue from a computing perspective. What are the most significant trends you see on the horizon? And in particular, for an audience who might be prime ministers or presidents or ministers of IT in some of the world's fastest growing markets trying to understand where computing is going, how would you guide them?

We're moving towards workloads that are more and more asynchronous. So workloads where you give a task to an AI system and then you wait for it to do 20 minutes of research before returning. So that's definitely changing a bit the way you should be looking at infrastructure because that creates more load. So I guess it's a bull case for data centers and for NVIDIA. As I've said, I guess, in the beginning of this episode,

All of this is not going to happen well if you don't have the right onboarding infrastructure for the agents. If you don't have a proper way for your AI systems to learn about the people they interact with and to learn from the people they interact with. So that aspect of learning from human interaction is going to be extremely important in the coming years. And there's another aspect which is around personalization of having, I guess, models and systems consolidate the...

the representation of their users to be as useful as possible. I think we are in the early stage of that. But that's going to change, again, pretty profoundly the interaction we have with machines that will know more about us and know more about our taste and how to be as useful as possible towards us. As a leader of a country,

I want to think about education, about making sure that I have a local talent pool that understands AI enough to create specialized AI systems. And I want to think about infrastructure, both on the physical side, but also on the software side. So what are the right primitives? What is the right partner to work with that is going to provide you with the platform of onboarding? And so those two things are important. If you have this and you have the talent, and if you do deep partnerships,

the economy of your state is going to be profoundly changed. The last 10 years, we've seen extraordinary change in computing. From hand coding to machine learning, from CPUs to GPUs, from software to AI. Across the entire stack, the entire industry has been completely transformed. And we're going through that still. The next 10 years is going to be incredible. Of course, the industry has been wrapped up in talking about scaling laws.

And pre-training is important, of course, and continues to be. Now we have post-training. And post-training is thought experiments and practice and tutoring and coaching and all of the skills that we use as humans to learn the idea that thinking and agentic and robotic systems are now just around the corner. It's really quite exciting. And so what it means to computing is very profound.

People are surprised that Blackwell is such a great leap over Hopper. And the reason for that is because we built Blackwell for inference and just in time because all of a sudden thinking is such a big computing load.

And so that's one layer is there's a computing layer. The next layer is the type of AIs that we're going to see. There's the agentic AI, the informational digital worker AIs, but we now have physics AI that's making great progress. And then there's physical AI that's making great progress. And

Physics AI is, of course, things that obey the physical laws and the atomic laws and the chemical laws and all of the various physical sciences that we're going to see some great breakthroughs. And I'm very excited about that. That affects industry, that affects science.

affects higher education and research. And then physical AI, AI that understand the nature of the physical world from friction to inertia, the cause and effect, object permanence, those kind of basic things that humans have common sense, but most AIs don't. And so I think that that's going to enable a whole bunch of robotic systems that are going to have great implications in manufacturing and others. The U.S. economy is very heavily weighted on knowledge workers.

And yet many of the other countries are very heavily weighted on manufacturing. And so I think for many of the prime ministers and the leaders of countries to realize that the AIs that they need to transform and to revolutionize their industries that are so vital to them, whether it's energy focused or manufacturing focused, it's just around the corner. And they ought to stay very alert to this. I would encourage people not to over-respect

the technology. And sometimes when you over-admire a technology, over-respect the technology, you don't end up engaging it. You're afraid of it somehow. Some of the things that we said today about AI closing the technology divide is really something that ought to be recognized. This is of such incredible national interest that you have the responsibility to engage it. Anyhow, exciting times ahead.

That was incredible. Thank you both so much for making time. If they want to go learn more, they want to figure out how to partner with the two companies. Call us. Are you kidding me? You can call us, yes. The two of us. We'll start with listening to this podcast and then giving them a speed dial. We'll put their numbers in the show notes. JensenNVIDIA.com. Job done. You heard it here. We're very responsive. I can attest to that. All right. Thank you so much, guys. All right. Thank you, Andrew. Thank you. All right. Thank you.

All right, that is all for today. If you did make it this far, first of all, thank you. We put a lot of thought into each of these episodes, whether it's guests, the calendar Tetris, the cycles with our amazing editor, Tommy, until the music is just right. So if you like what we've put together, consider dropping us a line at ratethispodcast.com slash A16Z and let us know what your favorite episode is. It'll make my day and I'm sure Tommy's too. We'll catch you on the flip side.