#203 - Gemini Image Gen, Ascend 910C, Gemma 3, Gemini Robotics

Last Week in AI

Deep Dive

Shownotes Transcript

Hello and welcome to the Last Week in AI podcast where you can hear us chat about what's going on with AI. As usual in this episode, we will summarize and discuss some of last week's most interesting AI news. And as always, you can go to the episode description for the timestamps and links for those articles and also to lastweekinai.com where you can go and browse the web and so on.

I am one of your regular hosts, Andrey Kurenkov. I studied AI at Stanford and now work at a generative AI startup, Astrocade.

And hi, everyone. I'm Jeremy. I'm your other regular co-host. Sort of been, I guess, been in and out the last couple weeks. But yeah, great to be here. And yeah, co-founder of Gladstone AI, AI national security stuff. You know the deal, if you've been listening to the podcast. And this week, we were talking about this. This happens every once in a while, not that often these days, but we're like looking at our roster like, man, this is a light week. And I guess it's going to be a short podcast for that reason. But yeah, I'm Jeremy. I'm your other regular co-host.

But like hot air that expands to fill the entire volume that is available to it, I'm sure we'll find a way to make this a two-hour podcast. Nonetheless, it's a problem. It's a skill, you know, we really are capable of talking a lot when we have the time. But to give a quick preview of what we'll be talking about, in tools and apps, there's a variety of sort of smaller tools, launching one kind of major one from OpenAI, but to rest

are maybe less notable, but kind of varied and interesting. Applications in business, as is often the case, we're going to be talking about a lot of hardware, OpenAI, spending much money on it, some developments from Huawei, and a couple of business deals as well.

Projects in open source. There's a couple of new models out, Gemma 3 and one from Sesame. Pretty exciting ones. Research and advancements. We got Gemini Robotics, which is kind of, I don't know, unexpected for me and pretty exciting. And interesting paper about test time compute. And finally, policy and safety. Our usual mix. We got one paper on understanding and alignment. And then we have a lot of stories about China-US relations, which seems to be

Big deal these days. Yeah, it's going great. It's going great. Well, let us just go ahead and jump in. Starting with tools and apps, the first story is OpenAI launching new tools to help businesses build AI agents. So there's now a new responses API, which allows you to make custom agents that involve things like web searching and file scanning.

And that is meant to be the more autonomous capability. So it also allows you to use the computer using agent model, which can control much more varied types of things on your device. And apparently enterprises can run that computer using agent model locally. Although on the consumer version, you're going to have to only use that for web actions.

So I think kind of not too surprising, we saw Anthropic launch also a computer use API in sort of early release a while ago. And it would be very interesting for me to see if this will be part of a trend of kind of the next wave of automation after just playing LLM seems to be something like this.

Yeah, it's a really interesting moment for the agent, like agentic business model, if you want, like, here's opening, I essentially looking at how do we unbundle the package that we've offered historically through the, the agentic systems that we bought, basically, right, we built the agents, you use them, this is them saying, No, no, well, we'll give you access to the underlying tooling. And you can build your own agents, you know, like,

This comes with, for example, like a file circuit utility that can scan across files in your database and train models on those. So that's at least in principle the guarantee there. But yeah, there's a whole bunch of other, you know, you mentioned the KUA model, the computer using agent model behind operator. And that, by the way, you know, generates mouse and keyboard actions. That's actually like about computer use itself. But essentially, yeah, the unbundling of this gives customers a lot of options for how to create their own agents, right?

Eventually, the sense, though, every indication from OpenAI is they intend to have one experience to rule them all, at least on offer, right? So they are looking to build an integrated solution where it's not unbundled. But this is it's kind of the other side of the coin, right? You're either building tooling to empower your users to build their own

agentic systems, or you're building one agentic system that, you know, you imagine most kind of consumers would tend to use directly so they don't have to wrangle their own thing. They're trying to sort of have it both ways here, right? So unbundled and bundled products, which I would expect anybody with sufficient scale to do this, who can afford to focus on two things at once is going to have to do at some point.

because it's along the spectrum towards open sourcing, right? When you start unbundling the tools and let people mess around with them and see what they build. So that itself is kind of interesting. And then OpenAI can learn from how those tools are being used and unbundled in the same way that, you know, meta learns from the way people play with Lama in the open source world to then integrate those learnings into their own kind of all fully packaged, authentic system. So kind of interesting. I think a good strategic play. They're opening up a whole bunch of

including an open source toolkit called the Agents SDK.

And that gives you a bunch of free tools to integrate models with your internal systems, add in safeguards, and do monitoring stuff for your agents as well. So pretty interesting. It's, again, this sort of like straddling the line between what do we open source, what do we not? And I think a nice sort of middle ground that OpenAI has spotted there. Expect Anthropic to do the same. Expect ultimately XAI to do the same. I think a lot of this stuff is going to get picked up, but it does seem like a good strategic plan. Yeah.

Yeah, and I think also it kind of points to something I suspect. We don't know the details, but I would imagine that the API is where the real money is at, right? They have a consumer version. You can pay a subscription of $20 per month or now $200 per month if you are a total power user. And presumably enterprises are paying for that for their workers. But a bunch of companies, including DeFi,

to some extent, our company is using the API to create their own thing on top of a chat GPT or on top of cloud. And I would imagine long term, that's how OpenAI and Anthropic will be making the majority of their money. And certainly, Anthropic is targeting enterprise explicitly. So this also plays into that, I think.

Absolutely. The money as ever is always in B2B, right? And it's interesting that in a way you can view the history of chat GPT as having been just to use B2C business to consumer to build brand recognition for the B2B play that inevitably was going to be most of the value created here. I think the one caveat is when you go really long term with this stuff,

eventually, if you talk about super intelligence, if open AI plans to start to centralize more and more of the activity that's happening, the economically productive activity, which you have to imagine they would, despite everything that they say publicly about, you know, we want to empower creators, eventually, like Amazon, right with, you know, what are they called Amazon basics or whatever, you know, they spot those products that are selling really well, and boom, they'll, you know,

snap up those opportunities, expect the economics to look the same for open AI. When that happens, essentially they're cutting out the business middleman and going straight to consumer and internalizing all that value in some verticals, not all, but in some. So I think there's going to be this interesting evolution to your point, a transient where B2B is where all the money's to be made. But then because AI has the ability to eat the world, it's going to be really interesting to see, do they ultimately become...

as much a B2C company as a B2B company, right now, by the way, Anthropic has a massive advantage, relatively speaking, in terms of the balance of consumer versus business. Anthropic, much more focused on the business side, and that's reflected in just the better coding abilities of, you know, Cloud 3.7 Sonnet, Cloud 3.5 Sonnet New, and all that stuff, even over and above some of the agentic models from OVDI. But I think that's a great point. It's so interesting, right? Like these

Companies are inventing new business models. No one really knows what's going to work, how they'll evolve over time. The one thing that's guaranteed is they will evolve and we will be surprised. Next up, we have a story from Google. They are now releasing the ability in Gemini 2 Flash to have native image output.

So that allows you to do conversational image editing in the chat flow as you do chat between others, you are able to ask it to generate an image. To my understanding here, basically, this is different because it's not calling on another tool. It's built in to Gemini to flash itself as a multimodal model.

And so the results can be quite impressive in multi-turn conversation taking where one of the key sort of limitations of image generation or challenges is

If you want to edit an image, you want to also preserve, let's say, the characters, the people, various aspects of the image while still changing it. And that's one thing you get sort of for free here because Gemini 2 Flash has the context of a conversation, both in terms of the text and the images. And that means that it can really do a pretty stellar job from examples I've seen,

of maintaining consistency and also being very kind of generalized and able to do all sorts of stuff directed by your output. So initially, this was announced, it was available to some testers. Now this is rolling out to users and even developers.

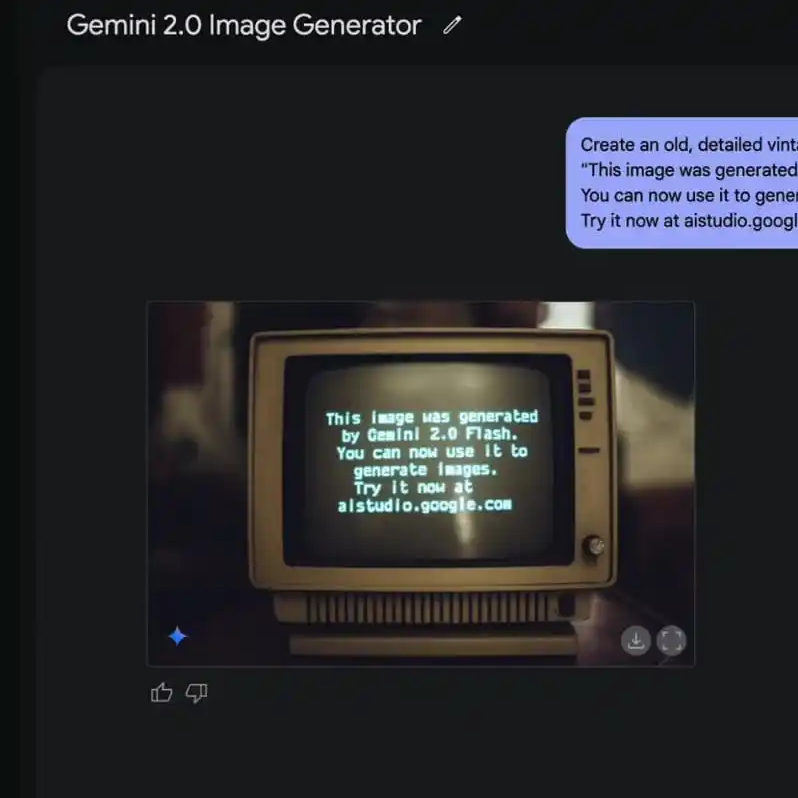

Yeah, and it's, you know, the small handful of things that you tend to turn to when you look at these days, you know, how good is this new image generator? Text, right? It handles text really well, at least based on the demos that they show, which you never know. But they have it create an old detailed vintage image.

35 millimeter photograph from a front view of a computer monitor. And then they say like, have this text displayed on the monitor. And it's about three lines and it captures every word as far as I can tell here. So, you know, that's a failure mode that we've seen before. And it's the combination, you know, once you get into text that you want to have faithfully represented in the image,

And also you want to get into this back and forth editing of the image. A lot of these things, as you stack them together, that's where you run into a lot of the problems. And at least based on what they're deciding to show us here in the demo, it does look pretty remarkably good. So as ever, I'm wondering what is the next step in image editing?

I'm sure there will be some. But for for those of us who are just sort of like lowly consumers of image generation tech, I think we're pretty close to approaching saturation point. I mean, Andre, you're obviously more plugged in on the gaming side. I'm guessing that, you know, you have specific things that you might look for just because of generating visual artifacts, avatars, things like that or.

Yeah, I mean, in our kind of testing, we found that if you have a very particular use case, these are general purpose models and they reflect our training data sets. So they're really good at generating kind of stuff you would find on the web if you have data.

a very specific set of requirements, typically these models aren't ideal. So being able to be very good at instruction following down to very minute details is very important to be able to use them kind of zero shot, whatever you're doing. So that could be one of the powers or benefits here. Another interesting aspect, just looking at their blog post is

If you look at the examples they give in the multi-turn conversational image editing, you have to wait upwards of 10 seconds for a response. And I would imagine that's one of the limitations when you are doing this kind of native image output, when you have a multimodal model that does image and text and audio, it

can output images for you and have very flexible kind of reasoning and accuracy, but then it is way slower than the sorts of image generators, text-to-image generators we see on the market. So I think that's an interesting trade-off we haven't seen necessarily. Yeah, there's almost the kind of use case issue there where

Until compute is so cheap that for most practical purposes, you can get instantaneous generation of outputs for multimodal models. Yeah, there's probably going to be a need for, you know, still specific, you know, high specificity models that only have one modality or another and maybe router models.

your queries or whatever modality to whatever modality. But we're definitely not there yet that we can have a, you know, a single Gato-like model that just does everything for everything. Yeah, people are still using plenty of Loras out there, that's for sure.

Moving on to the lightning round with a few smaller stories. First up, one of my favorite topics, apparently, since I keep bringing it up, it's Waymo. And they are yet again expanding. They are now offering robotoxy rides in a few more cities in the Bay Area, including Mountain View, Palo Alto, Los Altos, and parts of Sunnyvale, which is exciting to me because I work in Los Altos. So now I'll get to potentially use it in my commute

sometimes just because that's fun. So this is part of their rollout. It seems like they are really trying to expand a lot this year. They've expanded to Phoenix to offer their RoboTaxi services there. They're trying to expand to LA.

And they also are planning to go to Atlanta. So yeah, it seems like they feel ready to expand. And for me, the main question is, can they do it more rapidly than they have for the past year or so? I mean, it's

They were in San Francisco for now quite a while, maybe two years. And they're now moving kind of to the suburbs south of San Francisco of some of these smaller cities in their backyard, so to speak. So still moving a little slow on the expansion front, but it seems like they haven't had any kind of big crashes or anything of that sort as they've expanded, which is promising.

Yeah, it's also, it's strategically interesting, right? Because one of the big things that's come out from Waymo recently, of course, and which we covered is their partnership with Uber in Austin. And that seems to be expanding now to Atlanta, or at least it will later this year. It does make me think a little bit like Uber is at some risk here, right? Because essentially the core platform that they're using for a lot of these rides, where essentially as an Uber customer, now you can, you know, if you're in Austin, get matched

with a Waymo robo taxi. Same thing will be true in Atlanta. Yes, you're a marketplace like that's the value of Uber in this context, right? It's discovery of supply and demand. But at a certain point, if people get kind of comfortable riding in Waymo cabs, you've got the brand established. If Waymo just comes out with an app and then undercuts Uber, which presumably they may be able to do if only for a transient period to onboard people like Uber has got some some platform risk here.

And so this is it's not a coincidence, right, that Uber had made previously through Uber ATG, the self-driving piece, a priority for them. They've since ditched that just because it's it's too capital intensive. They weren't making enough progress. But that was because they saw this eventuality potentially coming and huge amounts of platform risk on the table. So, yeah, I mean, I don't love Uber's platform.

positioning here. I think, you know, they have great software, but when you're riding off a kind of hardware platform where there's a lot of efficiency to be gained from vertical integration potentially in this space because the margins are so limited, I wonder what they're thinking and how that ends up playing out. But we'll get some early indications anyway. So with these rollouts in, you know, Austin, Phoenix and elsewhere.

Right, exactly. And to that point, Waymo already has an app, a standalone app you can use, for example, in San Francisco. So they're kind of ready to get rid of Uber whenever they can. I guess the benefit for Uber and so on is just their scale. They're everywhere, obviously, across the globe. So...

it'll take quite a while for Robotaxis to be just have enough hardware in the first place to even be able to compete. And the big question as ever is, is Tesla going to be able to catch up? Because right now it seems like Waymo at this point is the only player in town on the Robotaxi business.

Next up, we have a new video generator. There's a startup called Moon Valley that has released a video generating model they call Marley with the pitch that it's trained on licensed content only. So not using any copyrighted data. This was done in collaboration with Asteria, an AI animation studio, and

is also meant to seemingly be for more, let's say, cinematic or media production type roles. It allows you to customize camera and motion controls, for example, and in-scene movements and things like that. And this allows you to produce high resolution clips for up to 30 seconds long with, again, the pitch being there, but there's low legal risk.

So this is certainly, you know, it's kind of been a little quiet on the text-to-video front. We had a big kind of momentary of Sora being released a while ago. You saw Adobe, I believe. I don't remember if it's released already, but they have announced their video generation model. So it's continuing kind of a rollout, even as the focus has definitely shifted to reasoning.

Yeah, it's also, they're apparently, so starting off with more kind of open source, openly licensed stuff in this first release, they are apparently working with partners to handle licensing agreements and packaging videos into datasets that they can then purchase, which is a lot like what Adobe is doing, right? So, you know, we see them kind of doing the same thing with their big, they were, I think the first company, the first company certainly that we covered that was doing the indemnification guarantee. If you get sued for using our software,

image, video outputs, whatever, we will indemnify, we will kind of defend you in court, if you're using our software as it's intended to be used. The interesting thing with Moon Valley, too, is like, I'm not tracking how much they've raised, but it's certainly, you know, not going to be a huge amount, it's not going to be in the orbit of what OpenAI has on hand. And so when you think about a small company like that, trying to do this stuff through licensing agreements, and you know, purchasing video content from other companies, it's

That's a much taller order. But strategically, and this is just speculation, there is actually kind of an interesting symbiotic relationship here, a relationship here potentially between companies that put out licensable video content. I imagine if I'm a company that's pumping out videos that might be used for training for these models, I might actually want to

partner with a company like Moon Valley, give them very, very cheap access, but still sell to them access to the licenses for these videos, if only to set the precedent so that opening eye then feels pressure to come in and buy. And once you get the big companies to come to you, then you charge the full amount, if that makes sense. So I don't know. I don't know the legalities of how that would play out. You know, if there's there's an issue here with kind of like selective pricing with different players like that. But

There's a kind of interesting potential partnership here with up and coming companies for these content creation platforms to license stuff for cheap just to get that flywheel going, set the precedent, and then, you know, cabbage the bigger companies that can afford it. It's sort of interesting and not saying that's part of this, but it kind of makes me think in that direction when you look at this.

Right. And to a point about funding, I just looked it up. They got a seed round of 70 million back in late 2024. That was when they announced it, at least. So a significant amount, not a huge amount. And that's another part of the story, I think, is it turns out you can get pretty good video models these days for not

a ton of money, not like, you know, hundreds of millions of dollars. We'll get to that also in the open source section. When the cost of compute collapses, right? 10X every year, you know, a $70 million raise is effectively a $700 million raise, at least, you know, if you're comparing CapEx to CapEx. Yeah. Mm-hmm.

Next up, we have Snapchat and they are introducing AI video lenses that use their own model that is built in-house. So if you're a Snapchat Platinum subscriber, I'm sure we have many listeners who use Snapchat.

you can pay $60 per month to be able to use these basically filters, not quite filters, I guess it's kind of like video editing, where they have free AI video lenses, currently raccoon, fox and spring flowers, which basically takes your video and adds in a raccoon or adds in a fox or adds in flowers. And there's some sample videos and

you know, I don't know. They look fun feature for so long. I can't, I, you know, if I have to use another fucking image editing platform that doesn't have an editable Fox or raccoon feature, I'm going to lose it. Yeah.

You know, I didn't know that this is such a highly desired feature. I'm going to be honest with you. I didn't know raccoons are such a big deal on Snapchat. Apparently they are. But anyway, I think interesting to see Snapchat investing in an in-house generative AI model. And it's a real question mark as to whether this would be an incentive to actually pay $16 per month

But yeah, I don't know much about Snapchat. So I don't know if users are big on video filters of this sort. Yeah, I've never felt more disconnected from like the median content

consumer of a product. Like $16 a month for the... Okay. I mean, I could see other uses, but there's other stuff too, obviously, that will come with this and I'm sure they'll roll it out. It is interesting that they chose to go in-house with this. Maybe not too surprising given the sheer volume of data that they have and also the fact that when you look at Snapchat video...

not gonna lie, it's been a while for me, but it does have like a certain aspect ratio, it has a certain, you know, people tend to frame their shots a certain way. There's a whole culture around the use of the app. And so you might expect that, you know, having a fine tuned model, but maybe I guess, even a pre trained model like this, that's done all in house, you can actually make sense there. So they're presumably also training on other open source at a minimum open source video data, I would assume, but certainly,

When you have such a huge amount of data in-house, it kind of causes you to lean in that direction, especially if you've got the funds.

And one more story. And this one is kind of one I have a soft spot, not one that is covered in a lot of media, but I think it's kind of neat. The headline is pseudo right launches muse AI model that can generate narrative driven fiction. So pseudo right is a platform with the intent to basically have an AI assistant for writing generally fiction and potentially also blog posts. And they've been around for years and years and

it was one of the tools I used going back a few years ago when I was playing around with these things. So they were kind of early on the LLM train and now they have this news model that they say is actually capable of producing better literature, so to speak, can better assist you in writing and that's kind of one of the

Thanks to highlight, pseudo-write is meant to be as an assistant, your type and then their suggestions, ability to suggest structure, characters, and so on. Another kind of slightly interesting idea here where we know that on the one hand, things like Chieshi Buki can write a whole entire short story that is kind of logical and you can read.

On the other hand, it's generally true that if you just ask an LLM to write you something, it's going to be pretty generic and pretty just lame to read. So I could plausibly see that there is space to, with some data, get to a model that is much better by default at producing good suggestions for writing that aren't, let's say, cliche or just boring.

Yeah, unusable if you're trying to write something a little more out there than what you see typically. Yeah, well, and I don't think we have a story for this specifically because it's just sort of rumors and pre-announcements, but

OpenAI, right, sort of came out, I think, yesterday as of time of recording to say, hey, we have this new model that we're working on. It's really good at creative writing. Sam A tweeted about it or X'd about it. It's definitely something that people have thought would be a struggling point for LLMs, right? It's easy to train them, especially agentic models, but even just

sort of general pre-trained LLMs, it's easy to train them to do coding and things that you can objectively quantify and evaluate. But, you know, the creative writing stuff is harder. So, you know, maybe we'll see more of a push in this direction. I'm sort of curious, you know, if you compare the pseudo-write model and the upcoming OpenAI model in terms of the performance, but also in terms of like, what does that training process look like to get more creative outputs?

Because it's not obvious to me, at least how you would, other than just curating your data more carefully, which is sort of the obvious thing here, or maybe just putting more weight on it, you know, changing the order in which you train and making sure it's at the very end of your training that you're putting in sort of the highest quality sources. These are all kind of standard tricks that are used. But yeah, I'm curious to see how this differs both in performance and in training procedure.

Yeah, unfortunately, they didn't release too many technical details as to what is actually involved here. I guess the cool story or what would be neat if

This came out as a result of sort of the actual platform, Souterite, having such a particular use case where you have actual people using it and rejecting suggestions or accepting suggestions, rewriting parts of the output, picking from various suggestions. So that is like a goldmine of data for this particular use case that nobody else has.

If that was part of how they did this, and they did also say that they talked to thousands of users of a platform, you know, could be an interesting example of a more niche use case platform that is then able to become a leader for that application.

Yeah, opening for like kind of process and a kind of pseudo process reward or even RL type stuff you get into just based on like, like you said, the editing history would be an interesting thing to get into not just the outputs, but yeah.

And onto applications and business, we have a story about OpenAI. They are in a $12 billion agreement, a five-year agreement with cloud service provider CoreWeave. So that is partially investment. OpenAI is getting $350 million in equity from CoreWeave. And

that will presumably play into their need for infrastructure, OpenAI's need for infrastructure. CoreWeave has an AI-specific cloud service with 32 data centers and over 250,000 NVIDIA GPUs.

Microsoft is a big user of CoreWeave, actually. And it seems that OpenAI now is also planning to have additional compute providers outside of Microsoft, who is presumably still their number one source of compute.

Yeah, this is a really interesting story in a couple different ways. I think we covered last week, CoreWeave is planning an IPO that's coming up. One of the concerns there is that yeah, Microsoft is the lion's share of CoreWeave's revenue, right? 62%. Given that, you know, that's a source of pretty significant risk for CoreWeave. This deal with OpenAI is a probably very refreshing injection of funds and potential for partnership. So kind of diversifying a little bit the portfolio of

customers at scale. CoreWeave is, by the way, backed by NVIDIA. That's how they've been able to access GPUs, so many GPUs so soon. They're now in the process of adding Blackwells already. So that's a big deal for compute capacity. But the other dimension of this too, besides just the IPO and how this sort of helps CoreWeave strategically, is that partnership between Microsoft and OpenAI that you referenced. So there is a bit of sort of deteriorating relationship there, right? I mean, we talked about a few weeks back,

in the context of the big Stargate builds that are happening right now, right? Big partnership between OpenAI and not Microsoft. It's supposed to be Microsoft, but instead Oracle and Crusoe. So Crusoe being a big data center company and then Oracle being a sort of hydration partner to provide a lot of the GPUs. That's a great deal for those companies, but it does mean that OpenAI is kind of breaking off this reliance that it had with

Microsoft. The story there seems to be that Microsoft's sort of risk appetite to keep on with the Stargate build has been more limited than OpenAI's. I think that's somewhat overblown. My understanding is that behind the scenes, Microsoft is actually a major funder of the Stargate initiative. It's just not a

sort of publicly recognized thing. But still, this is OpenAI kind of finding even more diversity in their supplier and their vendor portfolio, if you will, for compute. And that gives them a bit more leverage over Microsoft. So you see each company kind of trying to position itself, right? Microsoft is going off and making their own reasoning models, and OpenAI is going off and finding their own compute partners. And there's this very uneasy equilibrium between Microsoft and OpenAI that kind of seems to be falling apart a little bit kind of death by a thousand cuts style.

Next story, we're getting into chips being made in China, or possibly China, actually, as we'll get to. The story is that Huawei has now a new chip line, Ascend 910C, which is seemingly entering production and would be the leading AI accelerator product that is made by a Chinese company.

So this is part of their chip line, the Ascend 910. There's various analysis, I guess we don't know exactly, but from one person commenting, Leonard Haim, it seems that this will be sort of along the lines of an H100, so not...

near NVIDIA's flagship chips now of B200. It's probably a fraction, one third of the computational performance, much less computational memory and so on. But still, this is a domestically made product for AI exploration that

is moving closer to NVIDIA. At least this is comparable to an A100 GPU, for instance. Yeah, this is a... It's a really... By the way, Leonard Heim, great guy to follow if you're interested in anything kind of China chip-related, a lot of export control stuff. He has this great tweet storm that's worth checking out too on this. But yeah, it is a big story, right? So one of the big dimensions of this is that China's been able to get its hands on the...

Ascend 910Bs. So just for context, the 910C is the kind of next generation Huawei chip that's just entering production. That's the one that's like, depending on how you calculated about 80% of the performance of an NVIDIA H100. So, you know, the H100...

I think sort of came off or started production, you know, like three-ish years ago. So 80% of a chip that came out three years ago, presumably, I mean, there's a little fog of war here. A lot of these chips seem to have been sourced illicitly from TSMC. I've seen some differing accounts there, both from the CSIS post. There's a, it was a TechRadar article or something like people are disagreeing over this a little bit, but it definitely seems like there are a lot of

illicitly acquired chips and including potentially these 910Bs that were produced presumably by TSMC. So two of these 910B dies, these little logic dies, if you go back to our hardware episode, logic dies actually run the computations. They're distinct from the memory, the high bandwidth memory that sits on the chip.

But these two 910B dyes need to be packaged together to make a 910C. And so apparently, they seem to have gotten their hands on about 2 million of these 910Bs enough to make

around a million 910 C's. And essentially around a million H100 equivalents this year seems to be well within reach. There are all kinds of caveats around die-to-die bandwidth and how the packaging process that Huawei actually has available to it sucks compared to TSMC's.

But, you know, this is a lot of stockpiling like that China has done and Huawei has done an amazing job of stockpiling a whole bunch of chips ahead of export controls. This doesn't just include logic, logic dies like the nine tens, but also high bandwidth memory. So HBM2E that they sourced from Samsung, apparently they have enough for about 1.4 million nine tens CO2.

So even though these chips are now controlled, they were stockpiled previously. A similar thing, by the way, happened with the actual NVIDIA chips. We talked about that back in the day when NVIDIA

essentially had new export controls that they knew the US government was going to bring in to prevent them, for example, from shipping at the time, I guess, hopper chips and Ampere chips like A100s, for instance. And what they do is they know that the export control is going to come in. And so they try to throw as many of these chips into the Chinese market. In fact, the

preferring Chinese customers over American ones for the purpose because they know they'll still be able to sell to America once the export controls come in. And so this is sort of Huawei and Nvidia in a way, like through incentives, essentially being pushed into partnering together to jack up Huawei supply of everything from supply of ready to go chips. So the stockpiling strategy is something that happens all the time. China's really good at it. It complements the illicit acquisition aspect

after the export controls come in as well. And the net result is they end up with a lot of these chips. You shouldn't think of export controls as being meant to get a perfect block on

on Huawei or SMIC or any of these companies being able to acquire technology. It's a matter of slowing them down. And it's something that compounds over time to create more of a gap. But anyway, it's sort of really interesting that when you get it to a million H100 equivalents in the Chinese market, just from Huawei, one thing you got to think about is the CCP is really good at centralizing

their compute. So if they want to do a big, big training run, a national scale project, they can do that much more easily than the US. So even though we're producing way more chips, ours are spread out across like a whole bunch of different hyperscalers and smaller companies. In principle, China can just step in and say, hey, you know, we are commandeering these chips, throwing them together, doing a really big training run. So that's an important distinction. And why those 1,000,000 H100 equivalents that we could see again in just 2025 from just Huawei is pretty remarkable and important.

And we have a follow-up to that story. As you mentioned, the other article kind of along with this that came out is that Huawei did reportedly acquire 2 million Ascend 910 AI chips from TSMC last year through shell companies. So a little bit more on that. They acquired seemingly more than 2 million Ascend 910B logic dyes. 910C is...

Kind of a combination of two 910Bs from what I could tell. And they did go kind of around. They didn't directly get the product from TSMC. TSMC seemingly caught this happening and halted the shipments after an internal investigation.

And so this kind of was unintended partnership, you could say. And from what I also saw, it seems like a seven nanometer process. So it's not the most advanced technology TSMC has, which is probably not surprising. Other customers are using up all that capacity. But it does demonstrate that, as we know, the expert controls are in place. And we've seen a lot of

leakage through expert controls. And this is seemingly a pretty big example. Absolutely. And the fact is, as you alluded, right, the seven nanometer process that they're using, that's something that variants of that with debatable yields and other properties are things that SMIC can do domestically. So SMIC being China's TNR.

TSMC, which also was founded based on very clearly SMC-derived IP that was effectively stolen. A pretty cool story, I will say, SMIC. There's some interesting people from TSMC who... It's a real story, let's say.

Yeah, yeah, yeah. It's sort of a classic Chinese corporate espionage story, right? Like poach some of the most senior figures at the company, have them come over. And I think, I mean, they stood up SMIC and got it to reasonable production on a

close to leading node in like suspiciously close to record time thing might have been 12 months or something outrageous like that. So like an unheard of speed of takeoff, which is part of what triggered the whole suspicion from TSMC that this is going on and lawsuits galore and all that.

So yeah, yeah. I mean, it's essentially like China has a pretty solid domestic capacity to produce chips. Huawei is roughly their NVIDIA. SMIC is roughly their TSMC. But they're both under the same roof, if you will, under the CCP umbrella. So you can see a potentially more integrated partnership there as they form one kind of Huawei-SMIC complex, a bit tighter than NVIDIA-TSMC. But one important difference is NVIDIA.

SMIC does not have access to EUV machines. And so you're pretty much bottlenecked at the seven nanometer node. Maybe they can push to five nanometers with multi patterning, but it seems like you're going to run out of steam pretty quickly. And the other interesting thing, too, is if you look at TSMC, we've talked about

this a lot, but their leading node is all, you know, iPhones. So that means that it's the node up right now, the five nanometer, four nanometer node that goes off to GPUs. Well, you don't have that with SMIC. SMIC is having to balance their leading node at seven nanometers between, you know, Huawei smartphones and other smartphones and the GPU supply. So there's kind of a lot of interesting stuff going on here. Yields seem to kind of be shit at SMIC. The last I

checked, I think it's around 75% or so, which is not

economically great. But when you have, you know, the Chinese government subsidizing you to blazes, you don't necessarily need the same yields that you might have to be economically viable if you're TSMC. So anyway, yeah, interesting situation. And definitely, they've gotten their hands on an awful lot of these illicit chips. We've covered a lot of stories like this in the past. And this honestly hasn't come as too much of a surprise to a lot of people I talked to on the export control side. But anyway, it's all kind of locked in.

And now let's take a short break from talking about chips. We'll get right back to it. But a quick story about investing. We now know that Google has a substantial investment in Anthropic. It seems that Google owns 14% of Anthropic.

Although that doesn't come with any sort of control mechanisms. They don't have voting rights or board seats or board observer rights, something that Microsoft has at OpenAI, for example. In total, Google has invested over $3 billion in Anthropic. So kind of an interesting note, maybe it seemed like Anthropic is aligned with Amazon as their primary, let's say, ally, sort of an ally seat to OpenAI and Microsoft.

And Google investing this much in sort of a rival is, at least to me, a bit of a surprise.

Yeah, I mean, they're, you know, they're going to be hedging their bets. I guess if you're Google, one of the things you think about, you look at search GPT, you look at perplexity, obviously the search space is changing. And sooner or later, someone's going to do something that goes after your search market share in a big way. And because Google owns so much of the search market, like well over 90%, that and because the search market represents such a big fraction of Google's overall revenue, that

They kind of have no choice but to make sure that they own a piece of the search pie wherever it goes in the future. But still, like, yeah, I mean, it's an interesting play. One of the consequences of the Google and Amazon investments in Anthropic is Anthropic's reliance increasingly on TPUs and Tranium chips, Tranium being what?

what Amazon has and TPUs being what Google has. And that's, we've covered stories, you know, that involve that and some of the challenges associated with training on that kind of infrastructure. But yeah, I mean, it is interesting. It is also something that we're learning because of the antitrust case that's been brought

on Google in this case, yeah, on Google in this case, looking into basically, you know, are you controlling too much of the market? One thing that we do know is that Google is now being required to notify antitrust enforcers before investing in any more AI companies. This is based on a revised Justice Department proposal

that was filed Friday. So if that holds, this is an interesting requirement to kind of pre-register what they're going to do. There was an initial proposal, by the way, that would have required Google to fully unwind its investments in companies like Anthropic, but that's no longer apparently on the table. Would have been a very big deal, right? So as you can imagine, Google pushing back really hard. There's also a whole bunch of stuff that the DOJ has proposed, including a forced

sale of the Chrome web browser that is still on the table. So the claim here is that the government is, quote, concerned about Google's potential to use its sizable capital to exercise influence in AI companies. So yeah, no surprise there, but still forcing a little bit more disclosure than normally would have and kind of interesting to note the percentage ownerships and the board structure.

And as promised, going right back to chips and now to Meta, we got the story that they're reportedly testing an in-house chip designed for AI training. This is the one that was made in collaboration with TSMC and is in the initial testing small deployment phase. Meta has used custom chips for inference, but not for training.

And we have reported on them doing this kind of development and then trying to essentially have something to compete with TPUs.

So seems interesting. Like, I don't know what sort of timeline is safe to project for this kind of project. I would imagine that would take years of engineering. So I don't know if them doing testing in-house is indicative of very rapid progress or wherever at. Yeah.

Yeah, I mean, so one interesting thing is I haven't seen any word of collaboration between Meta and a separate entity that would help them with chip design. So they are like, you know, OpenAI partnered famously with Broadcom. So did Google, right, to make the TPU. We're not seeing any indication of that here with Meta. So it does seem like they are fully going in-house with this. They do seem to think of their...

inference chips as having been a big success case, those are only being used for recommender systems right now. So obviously that's a huge part of Meta's business, right? Having to serve up ads and content. And so the recommender systems at scale have specific requirements that Meta's tracking. Where we are now with this, to speak to your question about timeline. So right now Meta's finished what's called their first

tape out of the chip. This is basically a threshold where you send an initial design through a chip factory. And this is super costly, right? 10s of millions of dollars. Well, not super costly for meta. But anyway, 10s of millions of dollars, and it can take three to six months to complete no guarantee that things will actually succeed at the end of the day. And if there is a failure,

Which, by the way, has happened before for Meta at this very state. They've gotten to this stage before with previous chips meant for the same thing. If that happens, then they have to go back, diagnose the problem, repeat the tape out step and sets them back, presumably that additional three to six months.

And so the in-house custom inference chip that they built previously had flopped just before the sort of small scale test deployment. That's the stage we're at right now. Meta wants to do this little deployment, kind of see how things work in practice. And the interesting thing is like after they did that, after the first time that they had this decision, this kind of in-house design blow up in their face, they probably

had to figure out an alternative strategy, right? Like we need to now have the compute to do what we're going to do with these chips. And so they were forced to place a multi-billion dollar GPU order with NVIDIA, which then, you know, gives them a later start on that as well. So it's this interesting balance between how do we hedge our downside and make sure that we have standing orders with NVIDIA, say.

But also, we want to have independence from NVIDIA. So we kind of need to double dip and have an investment in in-house chip design. So yeah, I mean, we're still seeing as well in the case of this particular chip, a recommender system focus, though the plan is eventually that they do want to start using their own chips for training. And that would be, they think, around 2026.

And one more story about hardware, this time data centers. It's about XAI and they have bought a 1 million square feet site for a second Memphis data center. So this is an $80 million acquisition. They are seemingly aiming for this new data center to be able to support up to 33

350,000 GPUs up from the 100,000, is it 200,000? I've lost track in their existing Memphis facility. Yeah, the initial rollout was 100,000 and now they have, yeah, a plan exactly to double it. So you're 100 or 200,000 is exactly right. It depends on when. And yeah, the interesting thing with this new facility that they're standing up, it's next to

the South Haven Combined Cycle Natural Gas Power Plant, and that generates about 780 megawatts of power. So then there's the question of, okay, well, sure, but how much of that power is already in use? For context, when you think about one megawatt of power, order of magnitude gets you to about a thousand GPUs. So one GPU is around, it's a little over a kilowatt.

that you're going to consume, which is about the power consumption of like one home, right? An average home in America. So if you look at 780 megawatts of power, if you had that all available, which of course you do not because there's industry and their houses using this and all that. But if you just pour that into GPUs, you could already kind of get to the several hundred thousand GPUs that are powered by that, which is exactly where you're getting that 350,000

presumably black wells that they'd be looking at there. The other thing is that we know is that apparently Memphis Light Gas and Water, which is a local energy company, said that XAI has requested a system impact study for up to 260 megawatts of power. So

And presumably that means that they only expect, at least in the near term, to use 260 megawatts. If that's the case, then you're looking more at like maybe 200,000 GPUs, depending on power usage efficiency and a whole bunch of other factors that go into your data center. But generally speaking, this is another big build. The data center apparently will be home to what they claim will be the world's largest deployment of Tesla Megapack batteries as well, which is something that, so when your power goes in,

Sometimes you get power spikes. This is actually a big problem with NVIDIA GPUs that they're trying to sort out right now. But essentially, when you start the training process, you get a massive burst of power consumption, which can be on the order of 30% or so. That's a real problem because your power infrastructure may not actually be able to handle that. For that reason, you often want batteries that can be connected to your data center that can deal with that spike in power demand. And that's where the Tesla Megapacks become important.

It's a source of kind of capacitance. It's also there for when the grid load is just too high or you just need to inject more power for whatever reason. So yeah, really big build. XAI continuing to be at the forefront of this stuff, right? We haven't heard the big Stargate announcement, but when you look at the numbers, they're actually up there with OpenAI right now.

And on to our last story. It's not a business section if you don't have a $100 million startup as ever in AI. So we've got a new one. And yet again, it is founded by DeepMindX researchers. So they are launching Reflection AI. They are two former Google DeepMind researchers and they have $130 million in early stage research.

Not too many details that I was able to find here. The co-founders are Misha Laskin and Jonas Antongolo. I don't know how that's said. They worked on Gemini training systems and now they're aiming to develop superintelligence, much like SSI and others in that space. Seemingly, they're starting by building an autonomous programming tool.

Yeah, it's $130 million in funding, not a ton of money. It sort of makes me think of thinking machines, you know, that Miramarati startup. You're seeing a lot of super intelligence companies that aren't raising the giant amounts that...

the scaling laws suggest, at least nominally, you would need to compete. But, you know, these are smart people. So we'll see. By the way, the cap table is pretty wild, right? So funding round for their seed was $25 million. This is now all, by the way, being announced at the same time. So they're coming out and saying, hey, it's not just one fundraiser, 130 million. We actually had raised a seed at 25 million. We raised a 105 million series after that. But the seed round was led by Sequoia.

So like basically the best VC on planet Earth. Then the Series A was led by Lightspeed Venture Partners. So really, really solid VC. And then there are other investors. Reid Hoffman, right? LinkedIn co-founder. Alex Wang from Scale AI. That's really interesting because he's been historically a kind of AI safety focused guy, including sort of loss of control line and stuff. And then there's SV Angel and importantly, the VC arm of NVIDIA.

So that's a really, really big partner to have on hand. We saw how it moved the needle for CoreWeave in terms of getting allocation for GPUs. So that's kind of interesting. Latest valuation, half a billion dollars, not half bad. I would take that. Anyway, we'll see. They have paying customers, by the way. So that's at least different from safe superintelligence. So there's that. But as you said, it's really unclear what exactly they're doing. Just working with apparently...

fields that have large coding teams, such as in the financial services and technology sector. So very specific. Right. Yeah. In their blog post, they mentioned, for instance, imagine autonomous coding agents working tirelessly in the background and then work out the slowest team down. So even though they say they're working on superintelligence, it does seem like

In practice, they're producing a product that is more of a tool to be used and similar, for instance, to Cloud Code from Anthropic that was just released. Coding seems like increasingly the new frontier and letting an agent do its thing. So I could see this coming out of a product relatively soon.

And onto projects and open source, we have a couple exciting new models, starting with Gemma 3 from Google. So Gemma is kind of a little sibling of Gemini, and this is a multi-model addition to that family. They have this at scales from 1 to 27 billion parameters.

The new thing here is vision understanding capabilities. They also cover more languages, 35 languages and a longer context, 128,000 tokens and some kind of architectural changes to be able to leverage that context effectively.

They mentioned that this was done via distillation and are, as you might expect, much better than GEMMA 2 for both pre-training and fine-tuning. So GEMMA, another one of these small to medium-scale models, increasingly you are seeing these 20, 20, 27, 12 billion parameter models

And you see them being pretty performant. So Gemma 327B, they say, has an ELO score on Chatbot Arena that is higher than DeepSeq V3, for instance, and also higher than Lama Free 405B.

Actually, they have a nice handy illustration too on that chatbot arena ELO score chart where they show the estimated GPUs that you need to run each of these models. And I think that's actually a really important dimension that Google is articulating better than I've seen it articulated before. They're referring to this as the world's best single accelerator model. In other words, single GPU model.

And when we talk about, I think we'd previously been referring to it as the Karenkov scale of model size. Basically, you'd have these like, how small does a large language model have to be or before it's not called large anymore? Anyway, there's been this debate, right, that a model is not truly open source if it requires so much hardware to run that.

you might as well be a big company to run it, right? So if you open source a, as, you know, DeepSeek did with R1, if you open source a model that requires like dozens of GPUs to run, like, yeah, you've open sourced it, the code's out there, that's great. But this model has, you know, 671 billion parameters, you know,

you just can't fit that on one GPU. And so is it really there for the people? You know, can the people really use this meaningfully? And the answer is kind of, I mean, you can have companies that run their own instance of it, and that is a kind of open sourcing, but everything's along a spectrum. The big flashy point here is one GPU to rule them all, one GPU to run this entire model. I will say there is a kind of an important distinction when we talk about what is impressive and what's not impressive in this space. So

you might look at DeepSeq R1 and go, oh my God, that's almost 700 billion parameters. Compare that to Gemma 3, 27 billion parameters. So a fraction of that, this must mean that Google just like knows what they're doing a lot better. The answer is that these are different use cases fundamentally. So DeepSeq R1, when you actually like,

push a token through it and do inference, you're only using about 35 billion odd parameters in the model. So not all parameters get activated. It is a mixture of experts model. MOEs tend to have far more parameters relative to their performance. That's just how they're set up.

So you go with an MOE typically when you care more about the performance than the infrastructure costs of hosting the model. Whereas you might go with a smaller kind of like monolithic model if you want to just compress it, have it running like on the edge or something with sort of as high performance as you can. So that's part of the trade-off here. These are just different things to care about. But certainly if you care about like, I want to be able to run this locally on my own hardware. Yeah, like this is a big step forward. And again, I like this

This line, the world's best single accelerator model, it's better than some of the previous statements we've heard of like the world's best 7 billion parameter model or 22 billion. Because those just seem too specific. This ties it to something that we actually care about. And that's a moving target too, right? Accelerators are improving all the time, but still feels more concrete and useful.

Right. And as with other releases of this flavor, you know, you can get the weights, the code to run it on Hugging Face, for instance, and you have a proprietary license that allows, quote, responsible commercial use. So they have a bunch of things that you're not allowed to, you know, let's say broadly do bad things. A lot of restrictions, unlike something like Rock, Rock 2,

So yeah, there's so many of these good, small, smaller models these days. And I think that really showcases the power of distillation when you train a giant model like Gemini 2.

Next story, we have a model from Sesame and they just covered this pretty recently, their virtual assistant Maya, this kind of pretty impressive conversational AI that you could talk to and have a sort of naturalistic interaction that

we had demoed from, let's say, OpenAI, for example. So we have now released the base model, CSM1B, that powers that model, sorry, that personal assistant Maya. So 1 billion parameters in size available under the Apache 2.0 license, meaning that you can use it for commercial applications. You have pretty relatively few restrictions here. And

They have, of course, a fine-tuned version of CSM-1B for Maya. So this isn't the exact same model they used for that demo that they launched a couple of weeks ago, but you can use this as a very powerful starter model to do, for instance, voice cloning with relatively little data, and it's capable of producing really pretty naturalistic speech. So...

This is an area where you don't have too many models capable to produce really good outputs. Audio generation in general, there's less open source stuff there than LLMs. And this is certainly like a pretty exciting new model for that application.

Yeah, it's also, you know, not a monolithic model, but it's a model that comes in parts. So you have a base Lama model, which is kind of the backbone, and then it's paired with a decoder component. That's what they fine tune to build Maya anyway. So it is kind of interesting. They talk about the data as being

not revealed by Sesame, so we don't actually know what went into it. They say it's capable of producing a variety of voices but has not been fine-tuned on any specific voice. And they say also that the model's been some capacity for non-English languages due to data contamination in the training data, but likely won't do well. They are also not including any real safeguards. They have an honor system and they just urge developers and users not to use the model to mimic a person's voice without consent. So

Cool. That's good shit. The journalist, I guess, who wrote this said he tried the demo.

And apparently cloning your voice on the system takes less than a minute. And from there, it was easy to generate speech to my heart's desire, including on controversial topics like the election. So that's fun. Sesame, by the way, is a well-funded or at least I shouldn't say well-funded. It's a well, their cap table. It looks really good. So they've got Andreessen Horowitz, Spark Capital. So really like top line VCs and yeah, it's

Yeah, interesting. As you say, I mean, it is a differentiated space. We'll see how long it remains differentiated. If we end up with like the world of multimodal models that eat everything, then maybe they get gobbled up by open AI. But certainly for now, haven't seen that many of these models.

And just to come up with more stories, jumping back to the smaller, large language model side, we have a new model from Rekka AI, Rekka Flash 3, which they say is a general purpose reasoning model trained from scratch using a combination of public and synthetic datasets. And

And in their comparison, it's able to go head to head with O1 Mini and QWQ32B. And this is a 21 billion parameter model. You know, overall, not super strong. It has a relatively short context length of 32,000. It has also a budget forcing mechanism, which allows you to limit the model's thinking and

And it's similar to Gemma 3, is possible to run on a single device. So not super lead of a pack in the open source front, but an ever useful model, smaller model for other people to build upon. Yeah.

Yeah, as ever with these models, especially for the smaller developers, we just can't afford to keep up with frontier capabilities. It's all about what your comparison points are, right? Who you choose as your opponent. And so in all their kind of headline figures, they are comparing mostly to, so like Quinn with questions, 32 billion, right? So making the argument that, hey, almost all these benchmarks, it's actually behind Quinn with questions, 32 beep.

But it is a smaller model. So I guess the case is like, hey, we're almost as good as a 32 billion parameter model with a 21 billion parameter model. Not obvious to me how much that buys in terms of impressiveness. One thing I will note, like they...

generally, I mean, I would call this model on par with 01 mini, which dropped, I mean, like, when was that, you know, late last year. So when you look at like, how fast open sources come, it's like four or five months behind. Granted, 01 mini is something that OpenAI had been sitting on for a while,

right? And it's also, it was their mini, it didn't represent the kind of true cutting edge of what they could do at the time. But still, you know, like open source being six months, seven months behind something like that, that is a closing of the gap. And we've seen that trend hold for some time now. So not just Chinese open source models, but now sort of like Western open source models in all forms. So there you go, the rising tide of reasoning models.

Right. And to a point, the reasoning model, they do have some indicators of what you would hope for reasoning models that as you use more test time computes, you get better accuracy on tough benchmarks like AIME. They have that here. If you go up to, you know, 20,000 tokens, you're able to do substantially better than with pure outputs. So, yeah.

I guess it's significant in the sense that also there's not too many reasoning models. Of course, now we have R1, which is a pretty big one, certainly, but as a smaller reasoning model, it's still significant.

And one more story on the open front. We actually have a paper we're not going to be doing a super deep dive on, but still worth noting. The report is OpenSora 2 training a commercial level video generation model in $200,000. So this report shows how they trained a pretty performance model, not up to the level of

but relatively close and better than other models like cog video and Hunyun video. And there's essentially a whole large bag of tricks they go through. They have, you know, data creation, training strategies, AI infrastructure, lots and lots of details that allow you to train this model for, you know, what is relatively cheap, $200,000. So I think

So I think interesting or exciting always to see these in-depth technical reports that really showcase the nitty gritty of how to be able to train this kind of model.

Yeah, and it really is. It is always like nitty gritty when you look under the hood, right? The age of just coming up with a new architecture and oh, it's beautiful, arguably never really existed. But now it really, really doesn't exist. Every time we do get an open source release, we get to look under the hood. It's all these, you know, like optimizer tweaks and, you know, batching and like finding ways to get to get your accelerators to work properly.

efficiently and overlap communication computation, all these things, the engineering is the outcome over like over and over again. So yeah, I mean, it's another example of that now with with Sora type models. And, and you can see like for on a 200k budget, right, that's training of engineers

If you look at the benchmarks, yeah, it's pretty solid, pretty solid set of win rates relative to other models that are comparable. And yeah, so I mean, what this means for people's ability to not just train, but also fine tune their own video models and, and eventually inference super cheaply, like that's pretty significant.

And on to research and advancements. We begin with an announcement from DeepMind. They are announcing what they are calling Gemini robotics models that are optimized for robotic control in a general purpose way. So

These are, I guess, built on top of Gemini 2 and are meant to really focus on the reasoning side of robotics. So they are calling this Gemini Robotics. That's an advanced vision language application.

action model VLA that incorporates physical actions as an output modality to control robots. They also have Gemini Robotics ER, where ER stands for embodied reasoning, and they highlight the focus on advanced spatial understanding for, you know, things like motion prediction, things like physics prediction,

3D space, all that sort of stuff. So they published a very meaty technical report, lots of details, a lot of focus on the perception side, on the various kind of general capabilities you're seeing that are very useful in the context of embodiment.

With respect to the general purpose nature of robotic control, they collected a bunch of data over the last 12 months with these Aloha 2 robots that are like two arms. And they then are able to give it an image, give it an instruction with text.

and the model plans and outputs code and the code is then executed. I'm not even sure what it is, so it's not

quite the same as what I think Figueroa 1X, one of those two announced with a dedicated model for control. This is kind of a planning model than an execution model. That's not, as far as I can tell, not real time. But either way, really deep investment in the robotic space. They compare to things they're

re-implementation of Pi Zero, where Pi Zero was also meant to be this general purpose robotics foundation model. And they show that this is capable of doing a whole bunch of manipulation tasks without fine tuning necessarily, where you can just give it objects and instructions and is able to pull things off.

Yeah. And Gemini Robotics itself, you alluded to it, it's like it's two component architecture. So they have the VLA backbone, which is a distilled version of Gemini Robotics ER, right? So they have this model, essentially Gemini Robotics ER, its job is to kind of understand the

the world and reason about, you know, kind of spatial information and things like that. But then the action model itself is tacked on sort of like local action decoder that runs the VLA backbone. So the Gemini Robotics ER, the distilled version of that, that runs on the cloud. And then the thing that's local to the robot is the action decoders.

So it's on the onboard computer, smaller model, and therefore low latency. So it is optimized for real-time control. They have apparently latency, query-to-response latency, that has been reduced from seconds for previous iterations to under 160 milliseconds for the backbone.

end-to-end latency if you include the action decoder is closer to a quarter of a second, 250 milliseconds. So, you know, that's pretty quick, like getting into the domain where you can see interactions with these systems. And importantly, you know, 250 milliseconds means you're able then to respond to what you see and touch, right, in a relatively reasonable time period. I actually don't

remember how long it takes humans to kind of, you know, respond to stimuli in the environment. I wouldn't be surprised if it was in that ballpark. But this is, by the way, a supervised fine-tuned model, right? So you're looking at the collection of a huge amount of data, thousands of hours of expert demonstrations that they say they collected over 12 months, a whole bunch of diverse tasks and non-action data as well. So that itself is interesting. I

You don't usually expect RL to be used in this context because RL is just super sample inefficient. And also for safety reasons, unless you've got a really, really high fidelity SIM to real transfer, you're going to be doing some of this stuff potentially in the real world if you are, there are safety issues with just using RL. But in any case, yeah, they say they collected a bunch of teleoperated robot action information on their Aloha 2 robots. And that's what was used for

supervised fine tuning. And the result is, I mean, pretty impressive. It kind of feels like it follows in that tradition of Godot, the sort of truly multimodal models that can do anything, including control robots, including understand video, like just trying to stuff it all in there to get to true AGI. It is a very kind of Google DeepMind paper in that sense.

Right. They have some fun examples of things you could do. It can make an origami fox pack a lunchbox. And to give you an example, an idea of Eloha 2 are these little two gripper hands with some, you know, relatively sharp.

less expensive hardware as far as I can tell, but still pretty capable in terms of what they're able to pull off. And yeah, I guess I should correct myself a little bit. This isn't like an end-to-end image-to-control model as far as I can tell because of intermediate code output. But for the execution of individual code commands, I guess they are using that Gemini Robotics Vision Language software.

action model to execute things like grasping, for instance, grasping objects or moving to a particular pose, which the planning stage is output. So don't believe this is released yet. I need to double check, but either way, you know, we saw this with PyZero. This is pretty much comparable to that in claiming to be a robot foundation model. And I think also figure, you

And 1x are working on the similar things with a general purpose capable robotics model. So it really does seem like there's a pretty significant amount of progress in this space and it is

pretty conceivable that we can have pretty broadly capable embodied AI agents in the coming years, which has pretty significant implications for, let's say, economic impacts and so on. For sure. I mean, I haven't read it yet. There's this post where they're talking about implications for China of a lot of the breakthroughs in robotics and

I mean, this sort of makes me think of that, right? To the extent that we're building really, really good software, you know, that controlling robots is a software problem. Pretty quickly then, if China replicates that, which they will, your ability to just manufacture robots at scale is one key, key determinant of national power. And China has this just mopping the floor with us on that. So that'll be an interesting thread to follow for sure.

Yeah, and there's even more. There's kind of a lot bundled with this announcement. They also, as a fun side detail, released the Asimov benchmark. So there's another paper called the Generating Robot Constitutions and Benchmarks for Semantic

They say that this Asimov benchmark is a comprehensive collection for datasets for evaluating and improving semantic safety of foundational models serving as robot brains.

So that's pretty fun, I guess. They are focusing on the safety side to not get Skynet to happen, I guess. They also have another dataset to release as part of this. I think it's ERQA. Anyway, it's a visual reasoning dataset with a focus on embodied reasoning. And so a lot of, you know, variation and means of benchmarking for embodied applications in particular.

Next, we move away from robotics back to the favorite topic of recent months, test time compute and reasoning. We have a paper optimizing test time compute via meta reinforcement fine tuning. So the focus here is not on enabling test time compute and reasoning. It's on making test time compute usage efficient. So that concept

It has been kind of a known problem. And you covered, I think, a paper for this, this idea of overthinking where you use

more test time compute than necessary. They have an interesting figure in the paper, actually, that it seems that in many cases, just doing a majority vote, so making many outputs, and then just from among the many outputs, choosing what most of the models output doesn't actually outperform a test time compute scaling. So

In fact, test-time compute is maybe not as efficient as just doing inference with shorter compute a bunch of times.

So in any case, they are introducing a method to optimize test time compute efficiency via meta reinforcement fine tuning. So reinforcement learning is learning to optimize your reward for a given task. Meta reinforcement learning or fine tuning is being able to adjust quickly to a given task. So it

is kind of a meta layer, right? Where you're learning to achieve good rewards efficiently with not much feedback. And they do that by providing a dense reward during the reasoning process. So

Similar as opposed to process reasoning models as well. They chunk the test time compute reasoning steps into episodes. They provide reward for each episode that indicates how much progress it represents towards solving the problem. And then they

are able to train the model to pretty much minimize regret, optimize being able to make rapid progress. And they say that they're able to show two to three times improvement in performance for math reasoning compared to just outcome-based reward reinforcement learning where you are getting just zero or one rewards at the end. So that's a pretty significant one to also at a 1.5

gain in token efficiency. Yeah, it sort of reflects this challenge of this is sort of classic exploration exploitation trade off that you see in reinforcement learning. I mean, I would argue basically anywhere, but reinforcement learning is the place where it's most obvious. And it's mathematical formulation. Essentially, at any given step, if you're a language model, and you're trying to reason through some reasoning trajectory to solve a problem, you can kind of choose like, do I just generate an

output right now, which is very compute efficient, like I'm not going to spend a lot of flops, I'm just going to do a quick inference and, and the job is done? Or do I spend more time doing discovery, doing exploration, testing out different possible solutions, right? And certainly that exploration bit is important. We know that because when we look at

models like R10 that are just trained by reinforcement learning to reason as much as they want to get to their solutions. What you see is the reasoning traces get longer and longer and longer, and those traces correlate directly with higher and higher performance. So there certainly is value to just longer reasoning traces that allow for more discovery. But the question is, like, is all of that...

discovery actually worth it? Like at a certain point, are you just sort of mulling over the unmullable? Are you kicking a dead horse or whatever? Quite literally overthinking. Yeah. Exactly. Yeah. Quite literally overthinking. Not necessarily because it'll kind of like

give you a worse result, though potentially that's a thing, but also just because it's wasteful, right? That's the big thing. And when you think about the human brain, the way that our brains were sort of evolved, yes, there was a pressure evolutionarily to make good predictions so that we make smart

But also there's a huge, huge pressure to be compute efficient or energy efficient, right? The human brain runs on, I forget how many watts, but it's like a shockingly small amount of energy. And that's often a distinction anyway, between AI systems and computers, we just face different constraints. And so this is going to be an effort to say,

Can we measure, as you said, the progress that each chunk of reasoning in a long reasoning thread, in a long chain of thought, each chunk of reasoning, how much does it contribute to the accuracy of our final answer? And

It might seem weird. Like, how do you even measure that? In practice, there are a couple of different ways. The sort of most straightforward is that they just they look at, OK, after this chunk of reasoning, by the way, in reinforcement learning terminology, they refer to these chunks of reasoning within the chain of thought as epistemology.

episodes. So an episode in RL parlance is like one play of a game or something. These episodes are essentially chunks of reasoning. What they're going to do is after episode number, say five, they will, instead of letting the model move on to episode six, in other words, try another chunk of reasoning, they'll sometimes just like force it to give an answer directly from say episode number five. And they'll do that like 20 times and get 20 different answers.

Let's say that 20% of those answers that jump straight from episode five are correct. Then you let the model go on to episode six, you repeat the process, have it generate 20 answers straight from episode six. Let's say that those 20 answers you get, I don't know, 60% correct.

Well, now it's like, damn, episode six really kind of made a difference. So it's worth it to keep reasoning up to that point. But maybe episode seven, you know, once you repeat it there, it plateaus. That kind of gives you a sense that, hey, we're not actually making more progress here as we add more of these episodes as we do more in context or yeah, I guess chain of thought.

And so maybe it's worth cutting our losses, right? So this is kind of going to be how they instantiate this in practice. They're going to train two different models to take advantage of that information. One of them they'll train through supervised fine tuning. This one will involve basically just generating a whole bunch of reasoning traces for a whole bunch of problems, segmenting those reasoning traces into episodes, right? These like logical thought segments.