#197 - free AI in gmail, MiniMax-01, Titans, Transformer^2

Last Week in AI

Deep Dive

Shownotes Transcript

Get around and lend your ear to the news of AI. Loud and clear. Run inbox magic. It's now for free. Thrones in the sky. New realities. We've got Titan Transformers breaking bounds.

Hello and welcome to the Last Week in AI podcast where you can hear us chat about what's going on with AI. As usual in this episode, we will summarize and discuss some of last week's most interesting AI news. And you can also check out our Last Week in AI newsletter at lastweekin.ai for stuff we are not going to cover in this episode. You can also look in the description of this episode for the links of the stories we will cover.

I am Andrey Karenkov, one of your usual hosts. My background is of having studied AI in grad school and now working at a Gen AI startup.

Not as usual. We do not have Jeremy. He's off doing some policy business, maybe having to do with new administration. I don't know. But we do have some great co-hosts from Latent Space, the number one AI engineer podcast and a community reaching over 2 million technical readers. They do a lot of interviews with very cool AI people. So I think they'll be great co-hosts and I'll just let them introduce themselves.

I'm Alessio. Yeah, I co-host Lit in Space with Sean. I run a venture capital firm called Decibel, where we do early stage technical founders investing. And I'm a pretty active open source and AI engineer. So yeah, on my GitHub and Twitter, there's a lot of the projects that I work on. Yeah, even though Alessio is a VC, I was very surprised to hear that he was at the Vercel hackathon last night.

And I was not. This is like a complete reversal situation. I was cooking last night, I can tell you that. Yeah, so this is Swix. Hi, I am also co-host of InSpace. And I also run the AI Engineer Summit that's coming up in New York in February.

that is going to be entirely focused on agents and AI engineering leadership. And I am also founder of SmallAI. And yeah, we run AI News in space and we're kind of in the same space. I've been a listener for a long time. So just really glad to be on when your A player is out of commission. Yeah, it's always nice to have some new co-hosts that are also in the space. Maybe you can...

Also give the listeners a bit more of an idea of what Latent Space is about and what they can expect if they do check out your podcast. We try to serve the AI engineer in a broad sense. So Latent Space is about the transition to the productization of AI by software engineers. I think that a lot of the existing AI and ML products

I guess, media or coverage or even attitudes and philosophy is very focused on research. And I think, you know, especially with the movement that was started since ChatGPT, like a lot of things are now going into production and especially have the bar has been lowered a lot for basically guys like me to be able to build AI products. Because we do interviews, that's definitely a big part of our podcast.

And then we also do essays. We do conference coverage. So like we cover NeurIPS, ICMI, Clear and cover what the latest research is.

Alessio, did I miss out anything? No, I think you covered most of it. And then we have a weekly paper club and we have a weekly AI in Action club on Friday. Yeah, so the Discord is active. Yeah, we have about 5,500 people on the Discord now. So if you're interested in research, Wednesday is your day. If you're interested in applied AI, Friday is your day. Yeah, go check it out. And we're trying to do more on YouTube. So check us out on YouTube. Let us know if you like the formats and everything.

That's our 2025 plan. Yeah, there you go. And as our listeners will hear, you're obviously also very knowledgeable in the space of AI. You're also kind of living in it day to day, so to speak. So it'll be a fun episode.

Just to give a quick preview, as we always do, the episode is actually going to be a bit light this week. There's not too many huge news stories. There's not any huge news on the tools and app side, some minor kind of updates on the business side. The OpenAI drama is kind of quieting down, so there's just some small updates there.

primarily some pretty cool projects and open source and research stories similar to last week, and then some more updates on the actions that the Biden administration is going with before they close out. So yeah, a bit of a light news week, and we'll probably be a bit short of unusual, but it'll give us some time to chat.

And then one more thing, we do want to acknowledge any listener comments and corrections. Seeing some more comments on YouTube, which is always nice. And we are having a bit of a chat on the new Discord. So there is still the link in the description. You can go to our very small, very new Last Week in AI Discord, kind of different from Latent Space. And it's still...

you know, still kind of forming. We'll see how it goes. But the idea is I'll at least try to post some news stories and papers as we see them rather than as we cover them on the podcast. And then we can chat about it there. So feel free to check it out. It's a fun spot. And if nothing else, it's fun for me to see the sorts of people who listen to a podcast.

And before we get to the news, as usual, we do want to acknowledge our sponsor, which as has been the case for a little while now is Regenerator, Bobson College's interdisciplinary AI lab focused on entrepreneurial AI. With Bobson being number one school for entrepreneurship in the U.S.,

There was this initiative where professors from all across Bobson partnered with students to launch Regenerator and organize it into various groups like entrepreneurship and business innovation, AI ethics and society, the future of work and talent, and so on. They are peer training all of Bobson, so making sure that the faculty there is up to date on AI concepts and AI tools and

and are, I guess, guiding people in learning how to do entrepreneurship, innovation, and creativity with AI. So a very cool initiative. And I guess Bobson as a college is going full in on AI.

And now onto the news, starting with tools and apps as usual. And to start with, we have kind of a duo of stories where neither is too huge, but I figured we can just pair them up. So first, Google is making AI in Gmail and Docs free, but raising the price of Workspace.

So there are these workspace apps you can pay for as a business like Gmail, Docs, and Sheets. And they've had the ability to pay for AI features, $20 per user per month for the Gemini business plan.

So Google is now making that free, but raising the base cost of subscription from $12 to $14, which kind of makes you wonder, I guess, if people were really into this Gemini business plan.

And then just as that happened, Microsoft did something sort of similar. They have Copilot for Business and they did some rebranding. Now it's free Copilot for Business is Microsoft 365 Copilot Chat. And they are rebranding Copilot Chat as Bing Chat Enterprise and sort of

Emphasizing the agent angle here. And again, trying to encourage people to sign up for this 365 co-pilot. So yeah, a real duo of stories there of Google and Microsoft both having their kind of business offerings for people to pay.

And it makes me, yeah, I do often wonder how many people find these things useful. The AI tools on Google kind of do email summaries. They do some automated meeting notes, some writing tools, nothing mind-blowing. So hard to say, I guess, how excited business people are about these subscriptions.

Yeah, we have a group that we call the AI Center of Excellence at the firm, which is about 200 Fortune 500 execs and AI buyers. And yeah, most people will tell you that co-pallets are a scam because people are paying, you know, 12 bucks a month for workforce. And then they're paying, they want to, they're asking for 20 bucks for the AI stuff and the math doesn't work out. What you're basically seeing happening is kind of like,

AI washing of the P&L. So what this company is basically doing is like, hey, look, you're already paying me.

$12, I'll just charge you $14. But then the way they're going to look at it internally is like, you know, instead of $2 going to AI, it's like $6 are going to AI and $8 are going to the previous product. So then the reporting on like the AI revenue and kind of like ROI on the investments that these companies are doing kind of looks better. So a lot of it is financial engineering when it comes to this.

Some of the, you know, maybe more midsize 10 to 30 billion market cap companies that I talk with, all of them started with their AI stuff for free. They're not trying to charge for AI separately because they don't really have a lot of leverage.

In Microsoft's case, people are not moving out of... If you're a Microsoft shop and you're using Teams, you're using SharePoint, you're using the stuff, you're not going to move out. But it doesn't mean that you're going to pay for AI. But obviously, Microsoft is under a lot of scrutiny to show that all this money they're pouring into AI and data centers is kind of getting ROIC. So you'll see more of this AI bundling and pricing.

I'm a little bit more positive, I guess, on this thing. You know, we always wanted this vision of AI that is too cheap to meter. And having a really good free tier and all these things, it just makes AI sort of everywhere. I also, I mean, I definitely agree that AI washing is going on. The

I mean, this is the future you want, that AI is free and everywhere in small ways, and then we'll hopefully step it up over time. One thing I was just kind of looking at their intro video, the co-pilot chat intro video, and noticed if you looked at the UI, it doesn't say what model they use. It doesn't say OpenAI anywhere. And this is a question of how much does Microsoft want to collaborate with OpenAI in the future?

A little bit unknown, but it's interesting that Microsoft used to have a very, very close relationship with 4PI and now maybe less so. Right. Yeah, I think I kind of agree where it's a bit of both. As a user of Gmail and Google Sheets, I do look forward to a future where some of the boring stuff I do on spreadsheets is automated and I can just ask Gemini to do it.

And as you said, I think this is a real advantage of Google and Microsoft where they do have people already using their suite of products and they can sort of just encourage them to upgrade and pay. They are seemingly kind of iterating on how to get people to do that with this Microsoft latest rebranding of the free version is Copilot chat. The paid version is just Copilot. They have the...

various things that you pay $30 a month for. And then also they have these agents where you pay as you go, where they do research for you on the web and their graph of knowledge. So they're definitely expanding a product suite aggressively and trying to get people to buy into it.

Okay, and next story, we have Google signing a deal with AP to deliver up-to-date news through Gemini. So AP is the Associated Press. They do a lot of news delivery of breaking news. And now we are seeing yet another deal of a news organization. We've seen

OpenAI already doing a deal with AP back in 2023, and now Google has done the same thing, presumably paying to integrate and give that breaking news. So we've talked a lot about this as a trend with OpenAI over the past year, and we are now starting to see, it seems, other companies following in their footsteps.

We have this thesis on our podcast called the four wars of AI, and this is kind of the data war that people are kind of fighting. I don't know if this is like the first example, but it's one of the earlier examples of a news provider not being exclusive, that they do a deal with

OpenAI and Google. I don't know if that was something that was a deal point for OpenAI in doing their deal, whether or not we will pay extra for you to not deal with anyone else. But clearly, I mean, now this is possible because the AP has done it. And clearly, it's in the news organization's interest to strike a deal with every single LLM trainer on the planet to do this.

Yeah, I mean, I really wonder how much they charge. Yeah, and I think the structure now with ChaiGPT search and things like that is changing. Because before, it's like, if you use the My News to train your model, I don't really get anything out of it. Versus now that they're moving more towards these products with source attribution, it's like, well, first I'm getting money for access, and then I'm getting clicks.

to my product. So Barron's is doing the same thing with the Wall Street Journal and some of these more financial services things where at the end of the day, they also need new traffic. If Google, so to speak, is going away and the social media algorithm that Elon has put in place downsize links all the time, you need to find some other way for people to come to your product. So yeah, these deals can get quite expensive just based on

what I've heard from people working in this space. Can you give order and magnitude? At the early stage, kind of like, you know, early companies are paying about 750K, 1.5 million for some of this data. I'm sure that, you know, open eyes may be paying

50 million, 30 million, something like that, depending on the source and kind of like some of the more fine grained thing. Like, for example, can you also use for training, like things like that? There's kind of like a lot of parameters that go into it. Yeah, my impression from having covered some of these stories is we didn't get any concrete numbers, but just from, I guess, various sources.

Examples of this with OpenAI, my impression was it's easily in the millions, in some cases in the tens of millions, depending on how big the publisher is. So it's a very lucrative deal for the publishers. And I do recall, actually, as we've been covering some of the developments, that it was noted that these are non-exclusive access. And perhaps that's also the case with others, and we'll get more stories like this.

And just broadly, I think part of the war is that there's this sort of fight or flight, partner or lawsuit decision going on. I think for someone that made the other decision of partnering, I think you can always listen to The Atlantic. The Verge podcast did an interview with The Atlantic on why they did the licensing decision.

And I think it's really interesting to view it from their perspective, that they actually don't see that much difference between suing them and doing a deal with them. It's just a purely economic, like, how can I get the most money out of my content play? Which is just very interesting. On the LLM lab side, I think it's kind of incriminating. I'm not a legal person.

or anything. But like, you can say that AI training and training on data is fair use. Therefore, you don't have to pay for any of it. But like by paying and licensing, you kind of admit guilt. So like, I don't think you can have it both ways. But, you know, obviously, I think this will be played out in court.

Yeah, and that's one thing we did note with all these OpenAI stories that they have put forward this free use argument for training on all the publisher's data as they have also been signing all these deals partly to train on new data and have breaking news, but it seems also to have a back catalog. So yeah, as you say, we'll see how that goes.

into some of the lawsuits going on. And onto the next story, actually, it's very related. We have Mistral signing a deal with AFP, the agent France Press.

to offer up-to-date news in Le Chat. So Le Chat is there, basically ChatGPT or Gemini, it's a chatbot. And very similar, it will now be able to give you access to stories as you chat to them. So another example of that data war and that...

Almost search war going on as well, or I don't know if it's a war, but everyone is trying to get you to use their chat to see about stuff that's going on right now. And Mistral trying to also compete in that chatbot space is pretty curious because I feel like...

I don't know if a lot of people are using LetChat and I don't know if they'll be able to compete with ChatGPT and Gemini and so on, which are already so dominant. But interesting to see them try. Yeah, no comment there. And moving away from those stories to something a little different and...

Dealing with OpenAI, we have ChatGPT having reminders and to-dos. So we're adding this better feature called Tasks in ChatGPT, which is what it sounds like. It can schedule future actions and reminders, similar to stuff that Google Assistant or Siri can do.

It is available to paying people plus Steam and pro subscribers. And you can have these one-time or recurring tasks like daily weather reports or reminders. So again, kind of surprising to me from a product perspective to see them

doing this kind of thing to me i always think of these chatbots as one of things as places you go to do research or your work and here they're doing this thing that is very much like siri and and kind of positioning chat gbt as more of a personal assistant which i don't think we've seen so much of in their product direction so far yeah this is basically how do you get

batch completions in the chat gpd interface i think it's obviously great for them because yeah you can kind of predict traffic i'm sure they pre-compute a lot of these things because most of the time it's not live data and then yeah they got chrome jobs you know so if you were a developer it's kind of like obviously you want to schedule things but i think for for maybe the non-technical folks this is like a big upgrade just being able to have

do the same thing every time at a specific time. It's good. I don't know what the adoption is going to look like because it's kind of limited, right? You don't have function calling. You don't have code interpreter, I think. So we'll see what the numbers look like. Yeah, but they can easily add it, you know, over time. So I think there's this dual story. Like depending on who you are, this is the biggest launch of the whole week or this is a complete disappointment of OpenAI.

You said you want to start out building AGI and now you're building reminders. And I think it's just, it's a starting point. They want to get somewhere with the agent stuff. And this is a core loop of what an agent needs to do. It needs to be asynchronous. Langchain happened to also release what they call ambient agents on the same day that opening I release tasks. And

I think both of them are basically exploring spaces where you don't have to initiate the chat in order for the AI to do something. And I think the general theme of ambient agents is something I can bet on or follow, explore throughout 2025. I can see that ChatGPT task is on that path. It's just a very, very small step on that path.

Right. It is trying to compete with basically, I guess, what you get with the Google or Microsoft suite of products, where it's built into your productivity tools, into your email, etc. And I think this is a feature that many people foresee with AI being a sort of personal assistant that is ambiently there for you and is doing stuff for you all the time and not just when you are interacting with it.

So it is, as you, yeah, I think another example of trying to position ChatGPT for that and also to differentiate it. Because in my view, still, there's not much of a distinction between ChatGPT and Cloud and Gemini. One chatbot is not too different from another. And so there's no stickiness there. And whoever is cheaper and faster and better

is probably what you're going to go to. And I've personally been using cloud a lot more. So there is that product angle too of how do you get people to stick around and commit to you.

And moving on to applications and business, not too many exciting stories here. And we start with something pretty different from our usual kind of stories related to chatbots or LEMs. I think this one is pretty good to highlight. And it is that Palmer Luckey's AI defense for company Endural is building a $1 billion plant in Ohio. So Palmer Luckey, I guess, is being highlighted here as...

The pretty prominent CEO and founder of this company. Previously, he did VR and started Oculus, what Meta is now doing. And after that, started Endural, which is a company building drones and really AI-enabled drones for the military. For military applications, they have these Fury drones.

Roadrunner drones, Barracuda missiles, with the idea being there that these are AI-enabled, more advanced, and competing with maybe, I guess you could say, legacy contractors and R&D shops for the military.

So clearly, we're getting a lot of business considering we're building a $1 billion factory named Arsenal One. And I think really highlights that often we are not talking about AI and its military implications. We haven't seen any sort of advanced AI so far be a major player in wars or ongoing battles. But I think it's safe to say that

it is going to happen at some point. And certainly people are investing and moving in that direction. So an important thing to keep in mind in this whole push for AI investment and that kind of rapid improvement of AI is that it is going to play into this aspect of technology as well. Yeah, it's definitely already happening. We do a lot in the cybersecurity space and

There's two types of warfare, right? There's kind of like the digital warfare, which is more resource denial. And then there's, yeah, the drones and things like that. But most of it today is pretty autonomous and autonomous.

I think that's why we talked about software and AI on the podcast before and things like that. The more warfare depends on technology, the more owning the infrastructure for a lot of these things matters and where the data centers are located matters and how much you know what model is running matters. So yeah, I think that's kind of the... I think Anton from Chroma...

I've done this like fake protests, basically like they're like, it's illegal to not build autonomous warfare systems because you're putting human lives at risk instead. Instead, you should just send the robots to fight for you. So I think you'll see more of this in the next few years. Now that AI is kind of like in the zeitgeist. And I should actually highlight...

For Enduro, it's not just military applications. They have one of their successful products is Sentry Tower, which is a surveillance system to use, for example, along the US southern border that integrates with AI, partner recognition with, I guess, seeing people crossing presumably.

So that's another angle here as well, where AI certainly is already massively being used for surveillance, or in this case, I guess, detection of people. That is massively the case in China, from what I've heard. And this company is now offering that to some degree in the US as well.

And one more thing I'll mention is I think Silicon Valley as a whole, my impression is, is warming up to the idea of working with the military and working with the defense sector. We've seen Anthropic and Meta both announce that their products can be used for military

military applications by the defense system, by the DOD. And in the past, Silicon Valley type software engineers are very resistant to that sort of thing. We've seen protests in Google when their, I guess, AI division, AI cloud division was working on some stuff for military. I think it was Project Maven. And that was

met with a lot of outcry. My general vibe check on Silicon Valley is that people are becoming more open to it, if nothing else, because presumably it's very lucrative. Yeah, I mean, yeah, these things are hard to talk about. There's always like people that speak out and then people that think certain things. And I don't think those numbers really change. It just change who feels like they can talk publicly about it. So

I think a lot of people in the Bay has been pro-defense for a while. I think now there's a lot more maybe VC interest, which I think then makes people want to talk more about it because then they want to raise money to go do a company in that space. So there's more of that at play, but...

Yeah, I don't know. I mean, I'm becoming a US citizen in five days. So my view is also... Congrats. That's always a major milestone. Totally. So it's funny. I grew up in Italy and people in Italy don't work really hard. And my citizenship interview is at 7:45 AM at the immigration office. And I do not know of a single federal office in Italy that is even open at 7:45 AM, let alone running.

The US is a very different country. And next story, going back to publisher and news, OpenAI is apparently bankrolling Axios' expansion into new markets. So Axios is a sort of, I guess, media company, and OpenAI is partnering with them to support them expanding into markets.

a local newsletter for Pittsburgh, Kansas City, Boulder, and Huntsville. This is a three-year deal, and this is the first time OpenAI is directly funding newsroom operations of a media company it has partnered with.

We've seen them engage in content sharing and licensing deals, but this is the first time they are actually, I guess, helping a publisher expand. And I would imagine that's to get closer ties to kind of become more closely aligned with these media companies. Another interesting development in this whole story of, I guess, what you call the data wars and not one I would have expected, you know, paying for media companies and their initiatives.

And on to another type of business story one we see every once in a while. We have a new startup from a famous AI person focused on AGI. And this time it's Francois Chollet, I would guess is how it's called. They have a new startup called Endia. And the story is that they are going to shoot for AGI through, it seems like, program synthesis.

This is co-founded with Mike Knoop, who they are already hiring. And Sholay's background is having created Keras, which is a very popular, I guess, package that a lot of people use for coding with Keras.

deep learning back like a decade ago. He worked at Google for a while. Keras was bought out by Google or adopted by Google. And yeah, it's part of a trend in a way where we've seen, obviously, people leaving OpenAI and doing AGI startups. And this is another example of that. And

I guess it's just the feeling now that we're close to AGI and you can kind of shoot for it, perhaps. Yeah, Francois and Mike have been working on the Arc AGI challenge before this. So I wonder-- it's funny. It's like when you run a challenge for AGI and then you see the O3 model score really, really well,

I don't know if them starting a lab means they think people are on the wrong path to get to 100% and they have better ideas, or if they also feel like we're going to get there in a reasonable timeline. And so they kind of want to run their own thing. But Mike and I actually play soccer together on Thursday nights. So I already messaged him about doing a podcast episode with us. So we'll learn more. They'll talk more about publicly soon.

Yeah, makes sense. And we did cover just recently with O3, the Arc AGI challenge and the rumblings or conversations people have been having about maybe you couldn't call O3 AGI, maybe we're already there. So I think people are very bullish on the agents. In this example, it's program synthesis, learning guided program synthesis that will create AGI that can invent, adopt and innovate.

But in general, people are aiming to do this in the next one, two years horizon and not super far in advance. And speaking of VC type things, we do have a couple of stories related to fundraising and evaluations. First, we have the company Synphagia, which is an AI video platform. They're focused on sort of AI generated content.

Videos with human avatars, things that you can use for, I don't know, like marketing materials or maybe internal videos, things like that, have raised $180 million. Their valuation is now $2.1 billion. So not in the LLM space, not in the chatbot space, but...

We have seen Synthesia, I think previously also covered them. And I do think that this is one example where AI video will be pretty successful with kind of this inclusion of synthesizing videos of people talking or perhaps doing some things and commercials much cheaper than hiring models to do that for you, much easier to adapt to multiple languages and

To quickly iterate on messaging, we've seen this done for politics, for example, in South Korea, I think we covered that story. So clearly, based on this fundraising and the valuation of this company being at $2 billion, the presumed expectation of the companies bankrolling this is that many businesses are going to go for this.

Yeah, I mean, I'm actually also very much thinking about having latent space have an AI-generated

We'll clearly market as AI because we want to make sure that people know whether they're listening to us or listening to an AI. But I think this sort of AI creator space is going to keep growing. Synthesia pre-existed this current AI wave. And I think they do much more sort of broad things than just helping people in the creator economy. This is much more like support or sales or anything like that.

but I think it will keep growing. Like, Hey Jen is the other one that's kind of up and coming in this area. And I think we as creators, like literally like us in this room as podcasters should also think about using it.

And another story, another startup being evaluated at around $2 billion. This time, it's the makers of Cursor. They raised $105 million from a Series B round with some of the major Silicon Valley VCs, Horowitz, Thrive, and others.

Cursor is one of the major AI coding initiatives out there. It's an integrated development environment where it has a built-in coding assistant, similar to GitHub Copilot, which is

helps you by predicting what you'll be writing next. Cursor has kind of a few more features that set it apart that are more advanced. I know I'm personally a user. A lot of people at my company are users. So it does seem like it is positioned to perhaps lead in this very, very competitive space. There's been many startups that

betting on doing, I guess, coding assistance, a lot of fundraising for that. I think you saw YC having multiple startups in the space in a single batch. So very competitive. And it seems right now that this company is a major competitor and potential one to come out on top.

Yeah, I feel like they really crossed the moment when they got the Lex Friedman interview. I think first time Lex has interviewed four people and also first time Lex has, I think, interviewed an early stage startup that was pretty notable. Yeah, I think definitely, you know, I've been a doctor at Cursor. We're also pretty close friends with Codium, which launched Windsurf.

And yeah, it was a bit of a meme last year of people forking VS Code and trying to compete. But I think Cursor with their really good execution and they were basically first. Like we actually had them on the podcast. I think we were the first podcast that they ever did. And seeing them execute and then also they came and spoke at my conference last year. Like,

very sort of heads down determined and has a, with a very strong point of view as to like how people should be coding. Like they didn't promise, you know, super AGI agents or anything like that. They just said like, we will make a better idea for you with AI in it. And I think typically what people usually say is that, you know, challengers like this would force the incumbent VS code to do something. And, um,

VS Code and GitHub have done something. They just are usually copying Cursor. And I don't know if that's like the strategy. Yeah. And Cursor is now over 100 million of revenue. So I know there's a lot of AI is over, blah, blah, blah.

100 million in revenue. It's pretty good. Yeah, there's not too many startups that can claim to actually be making money, I think, and Cursor is one of them. I mean, I would pay three times as much for Cursor. Like, I use Composer every day. It just, it's nuts, you know? Like Sean said, I was at the Vercel NVIDIA hackathon last night. I used Speed Zero for the whole thing.

I think VZero is closer to both Danio and some of these products. It's really nice when it's self-contained. You see the preview, but I think Composer is just as good on the coding side, and you're still within your environment, and it has a lot of advantages. I'm curious to see where things go from here, and then just grapple it

It's kind of been a little left behind, at least in the zeitgeist. They're obviously making a lot of progress on the product, but it's a competitive space. It's hard to get developer mindshare. And when you only have the IDE form factor, it's easy for people to switch around. I can open the same repo in VS Code and Cursor at the same time. It's like the same thing. So yeah, I'm sure the worst will get...

even more intense this year. I'm curious if there is room for multiple players here, or if Cursor is just going to eat everything. I've also said that opening I should acquire Cursor because they are, at this point, a really good source of coding data. And I think everyone needs one. My thesis has died, basically, because now it's pretty clear that Cursor

is rumored to be Anthropic's biggest customer now. Something like that. They definitely ran into limits with Anthropic. Multiple startups claim this, so I don't know how true that is or what the exact positioning of this is. But yeah, I think applications, we're in sort of the applications layer of the podcast or whatever. They typically want to be multi-model and then the

the LLM labs, they all want to be sort of vertical, right? They want to build their chat GPT. They want to build their tasks and reminders and all that. I think it's one of these, it'll just be a fight back and forth between applications and foundation model labs. And onto the last fundraising story, we have Harvey, which is a company offering AI for legal purposes for legal companies. They are seemingly trying to raise money

300 million to be valued at 3 billion. They are said to have quadrupled their revenue. They were getting something like 30 million as of their last round of CVC in July. So again, another example for a more domain specific company that is aiming to dominate a specific space, in this case, AI for legal applications, where it's

obviously very important to not hallucinate, to give accurate information as you're doing, for instance, like research for a certain legal case. And Harvey was one of the early players in that space and seemingly is already getting a lot of revenue. We saw them make some deals with major law firms, covered that a while ago. So another one that

is seeming to probably stick around in my view. Yeah, one thing that's kind of interesting. So we did mention Cursor's valuation last time. It wasn't published anywhere except for this New York Times article I found. Cursor is valued at $2.5 billion with $100 million ARR. And that's interesting with Harvey, which is rumored to have $50 million ARR now and is valued more. So they have half the revenue and is valued higher than

And is this because the legal space is super hot or is this because the investors are very desperate to get into this thing? What's going on? Yeah, no, it's hard. It's hard to say. I think some of the perception is that Cursor is doing very well. And I think going back to your point about is there going to be a one winner? There's maybe a question of like, OK, can all these companies survive? And I think everybody's getting obviously a ton of traction early on.

But yeah, Harvey's expanding. I mean, I think most of this has been made public. I'm not leaking anything, but Harvey's kind of like broadening from like just legal to like all professional services. And same thing is happening with Hebia, which started as more financial services focused to start. And now it's kind of like going towards all kind of service businesses and knowledge work. So they have a bunch of overlapping investors. So I know some of the investors are a little nervous about the collision path that they're on.

But yeah, I think theoretically the market is bigger if you do all professional services work. In practice, it seems hard to build a company that does service every professional services market. So yeah, I don't know. But A16Z was already an investor in Cursor, so maybe they got to stick to it. And you always got to do the math also on how much solution the founders are actually taking. So it's not always just about the valuation. I wasn't...

Yeah. I'm also curious where the money's going. Do you need 300 million to build GPT wrappers? I don't know. They all claim to have their own models, but also, let's be real, most of the traffic's actually going to the clods and the GPTs. And yeah, I don't know. But obviously, good for them. I'm not criticizing them at all. I'm just like, there's supposed to be this thesis of you raise once and you're done.

or, you know, you're a profitable or unicorn 10% startup. You know, that's what Gumloop is touting. But then, you know, both Kirsten and Harvey are kind of following the traditional path. I'm sure they haven't touched this in their previous round. They all say this. And, you know, money is just sitting in the bank. And so what is it for? Sales and marketing? I don't know. It's certainly a part of it, I think. Yeah.

Yeah. I don't know how many people Cursor has. I'm looking on LinkedIn, Harvey has 74 employees. Wow. Cursor doesn't even have a LinkedIn organization. But when you search Anisfere. I've been to their office. It's 32. Yeah. It's 32 people. But it also has a lot of the investors and then it has a bunch of like, Anisfere is kind of like a common name for like random tech things in the world. So,

Yeah, it's definitely a much smaller team. I'm sure Cursor is mostly spending it on compute. Right. And that's the other thing about this ARR. A lot of it is sort of passed through to the model labs. So what is the margin? Yeah, exactly. I'm curious to know if they are actually profitable because there's also kind of a price war aspect here where certainly for VLLM providers, for chatbot providers,

There has been a movement towards offering smaller rates of dollar per token, like aggressively

lowering it. And in these competitive spaces, especially for coding, you would expect that there would be a similar kind of paradigm of, you know, you're not going to go above $20 per month because that's the pattern that's been established by GitHub Copilot early on. And if you're going to try and go above that, then...

to be very expensive and something like cursor where it's doing ambient completions for you. It's like, as you code it, just offering stuff for you all the time. It's also looking forward to see about suggestions, not where you are. It's able to do inference over multiple files, answer long questions and,

Yeah, you've got to wonder if one $20 subscription per month is actually going to pay for what a typical programmer who uses Cursor every day is querying.

And moving on to projects and open source, we've got a couple of fun stories here, starting with Minimax 01, which is all about scaling foundation models. So they have Minimax Text 01 and Minimax VL, Vision Language 01. And the big story is that they're meant to handle lower

longer context. So they use lighting intention and mixture of experts to have a total of 456 billion parameters with 45 billion active web token, pretty big for

this class of models. And they're saying that these can handle context windows of up to 1 million tokens during training. And they can even take 4 million tokens during inference.

So this is one of the big stories since ChatGPT came out. It used to be that the token windows were like 4,000 tokens, 8,000 tokens as the default. Where the context window is how long of an input you can handle, right? So can you handle like a book's worth of content? Can you handle multiple documents?

So this is an example where by using a newer form of attention, typically that's one of the major tricks for being able to do this kind of long context, you are able to scale. And so Minimax says that they offer context windows that are 20 to 30 times longer than other open models. And these are publicly available. So yeah,

Another kind of class of open source model that is competitive with top of the line models like GPT-40 and Cloud 3.5 Sonnet. Yeah, very big. I would say the meta narrative that I'm interested in is just the rise of this lab called HiLore. Not really something that was really announced last year. They just quietly started producing...

video models and now text models. And who are they? What are they? Do you know? I can't say that I've done a deep dive, but we've seen more and more announcements of this kind from primarily Chinese organizations. And I think this is Chinese. Anyway, I would guess. Yeah, it is.

And playing in the open source space. So I guess you could say that's another interesting trend where we saw and covered recently also QWEN, QVQ. These are now major players in the space of models that are competitive with Lama often and other things that have been out there. Also DeepSeek, stuff like that. Yeah, that's true. I would say, I mean, the other thing is...

that we can definitely see there's a trend in increasing number of MOEs. So 32 experts is... The standard used to be 8, and now it's 32, and I think it's gone up to 150, 160 before. And it seems to be a consensus in terms of more efficiency or a sparse inference. But also having that many parameters means it's really hard to serve. I think this is also very true for DeepSeq v3. So...

Mostly these are just kind of training models where you can kind of distill into smaller ones that you actually use. It's just interesting that they choose to launch their text models like this. Usually it's the other way. Like remember Mistral launched their 8B and then they went to 8x22B. Here the Chinese are doing the opposite. They're just going big. Yeah, and on the...

Benchmark side, obviously, they're showing that they're doing very well on the long context side, kind of competitive-ish with Cloud and GPT-4, much better than other open source models out there. Another interesting aspect of this is they did release a report recently.

on the archive with a lot of details on the training, on the architecture, et cetera, similar to LAMA or other models that basically lay out for you all the nitty-gritty of training models, of optimizing them, dealing with long context windows,

So I guess another interesting aspect, in addition to there being pretty competitive open source offerings for various LLM tasks, now also for vision language tasks, is there's no kind of secret formula for

having a good LLM, as far as I know. It's just like you need good data and you need to have the infrastructure and then the details of how you train and set up your model are not very much of a secret at all. And onto the next model, this one is called Minmo and it is covering another aspect of a space that is less seen in open source. This is a multi-modal large language model that is...

more so focused on voice integration and audio. So again, this is coming from China, Tongi Lab and Alibaba. This is an 8 billion parameter model, and it is focusing on voice comprehension and generation. So this is dealing with speech recognition, speech to text, and text to speech.

Again, another example, I think we've mentioned recently how vision language is an area that hasn't seen as many open source models and they're starting to come out. Now we have pretty impressive models

example of speech to text. It's apparently competitive with Whisper Large V3. It's getting 85% accuracy in language identification and 98% accuracy in dialect and other tasks. So pretty impressive and again, pretty interesting that you're seeing more and more models of the sword being developed.

And in this example, they are saying that the code and models will come soon. So far, we just have the paper. And again, another example, I guess, of something you've seen where we've seen previews and papers come out before they are able to do a full launch. Here, we're not seeing the model or code yet, but we do see the numbers and the paper. So let's see if they follow through, I guess.

And on to the very last example here is a benchmark. And that's another space where there are many, I guess, things happening all the time and many new benchmarks for different sides of the AI inference game, I guess you could say. In this example, it's how

And the paper or the report has this fun thing with title, Fantastic LLM Hallucinations and Where to Find Them.

That's referring to some Harry Potter movies. Anyway, this one is focused on identifying, on benchmarking LLMs for how many hallucinations they have. Often, how many times do they say things that are essentially false? This has almost 11,000 prompts and automatic verifiers that look to see in the LLM outputs how often they produce reliable knowledge and facts.

So it's interesting, I think they show that even top performing models can have hallucination rates as high as 86% in some domains. My personal experience and kind of impression is that hallucination is increasingly not a real problem. You know, when I do work on some sort of coding or some sort of, I don't know, I guess some, I just want to find out about some topic and

I'm not super worried about hallucination and them just importing packages that don't exist or doing some silly function call for something that isn't there. But at least in this example, they are saying that there are still some pretty major hallucinations on things like coding, text simplification, historical events, and so on.

Yeah, I kind of disagree on the hallucination is not a real problem thing. I think it just differs by use case. In the code generation examples that I see, LLMs still generate a lot of code of APIs that don't exist just because they think they should exist.

And also, I mean, a very simple example is Cursor for a long time. When you try to write opening code with Cursor, it would refer to the old completions API and not the new chat completions API. And obviously, it wouldn't know anything in that sort of like a beta API. So that was very annoying.

But also for large report generation, like the Gemini Deep Research of the World, there's a back-to-front called BrightWave that generates reports from a lot of data sources. And I run AI News, which also generates from a lot of resources into a report that gets sent out every day. Yeah, every single day there's hallucinations. It doesn't understand what sources are tied with what, so sometimes it will confuse things.

And sometimes it will not fact check something that was like, obviously wrong. If you know, even like the little thing about like, let's say like Eric Schmidt, like running meta. I'm like, no, like that's, that's wrong, you know, but like the model doesn't know that. So like we've caught it. Like I get reports from my readers every single time this happens. And I'm like, yeah, sorry, man. Like I'm trying, but yeah.

the best I could do is just have another agent check the agent, but like, yeah. And to be fair, I guess I say I'm not too worried about it, but then again, if I would be hesitant to make a claim or like cite an LLM for something factual, because you really, you know, if you're talking about some historical detail, I could easily see that being fabricated. So yeah,

Yeah, that's why I always thought, I think, I would thought that something like perplexity would take a lot longer to become a thing. And I think really it's just people don't mind as long as you have citations. And if they see something where they click through to the source, they're like, ah, the LLM made a mistake, but isn't that funny? And then you move on with the day. It's not that big of a deal as long as you provide sources.

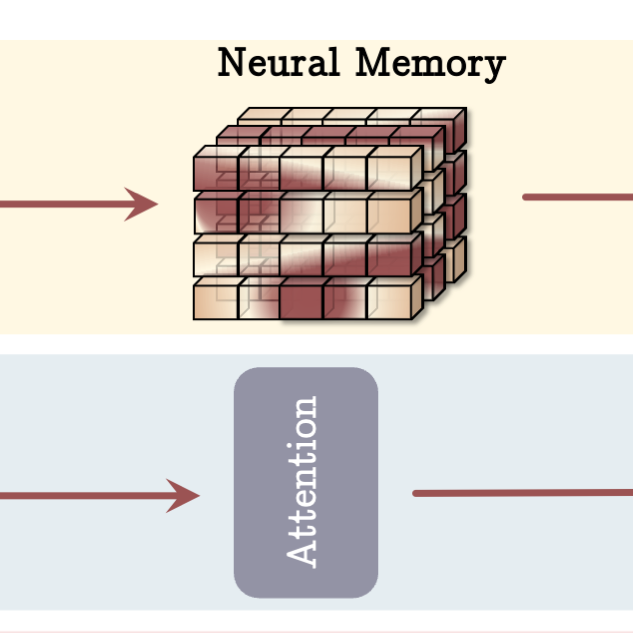

And on to research and advancements, we have at least one paper that generated a lot of excitement in the AI space on the Twitter, X-verse, and so on. The title is Titans, Learning to Memorize at Test Time, coming from Google. And the gist of this is that LLMs, usually as we use them, are kind of frozen post-training. You train them, they have some set of knowledge, and then you

that is up until a certain date, and that's where knowledge caught off. And there's been a lot of work in the research space on how to get beyond that, how to be able to update information

The models as you use them so that they have ongoing updating attention. One example of that is TTT, which recovered back in like mid 2024. Well, now there's a new variant of that, that people are pretty excited about. It is called Titans. And the idea is they're

We have a few ways to integrate it. So at a high level, what we are proposing is a formulation of novelty loss of being able to see what you want to put into your long-term memory based on how novel it is.

They also propose a specific way to do forgetting. So they have this decay model where over time you can let go of information that's not relevant and

They also look into how to integrate memory in your architecture. So there's different ways you can have memory as what you're doing. They have one variant called the memory in context, where you have multiple parts of your model. You have persistent memory, which is your typical LM that has knowledge that is there forever.

You have your long-term memory, neural memory, which is the memory that gets updated as you go. Context as architecture is appending into your memory

So you take some input sequence, you add some information from your persistent memory, and then you also add some knowledge from your long-term memory into the input based on retrieving some of it with regards to the input. And then you do attention over all of that. And as part of your output, you update the neural memory with this novelty metric.

Another example of what you can do is memory as gate, where you add memory into your attention calculation. So you take your input, and then as you calculate the typical sort of attention over the input,

you take into account the long-term memory. And then another example they have is memory as a layer, which I think is more traditionally what you do with things like recurrent models, where you pass the input through your memory and then you update the memory and also have the kind of middle layers almost of the model be the memory layer.

So anyway, they have this test time memory where you actually update the models based on the novelty of the inputs. And as you might expect,

They show in comparison to various models of memory like Transformer++, Mamba, DeltaNet, TTT, they outperform all of them by a decent metric. So this is one of these problems that's unsolved for LLMs, right? Where if you have an agent that's deployed out there, if you have an assistant that you're using,

the fact that it can't remember things long-term, things that you've seen, that you've chatted about, is a real hindrance and a real, I think...

that AGI is not possible if you're not kind of learning as you go. So there's been more and more research on this. This is building on a lot of that. We have detailed comparisons to various things like Mamba and like TTT. And people were pretty excited because there's a lot of detail in this paper and the numbers, of course, on various benchmarks look very solid. I think that was a really good summary of

I think the only thing I've not understood about these things is how are they supposed to work with multi-tenancy? So if I'm deploying a model to serve, does this only work if you have one customer per model, basically? You need to replicate. Which seems interesting, but it's also like,

It's kind of hard in practice to do. Yeah, it's hard because you do need to update the weights of a model, at least some of the weights. And that's one of the aspects here is you have part of a model, the neural memory that you're updating and other parts of it are frozen. So in terms of actually deploying it, you will need sort of per person, per model,

chatbot its own memory that so far what people do is more retrieval so you store away some information like chat gpt memory that's how it works you store some stuff and it gets added to the input here actually updating the weights at test time and that's much harder yeah

I don't think this is as big of an issue as you are fearing because we already have prompt caching, right? It's already caching per person. It's a form of memory. It's not as advanced as this one, but it's a form of memory. Like our infrastructure-wise, not an issue. I don't know if that's necessarily true because if you put my memory in a model, then on a net new response, you cannot serve the model to anybody else.

Yeah, it's basically caching. You can load the memory module back in whenever you activate your endpoint again. I think from infrastructure side, which is a big aspect of this, I guess there is a difference in prompt caching and in retrieval in general, where it's one thing to append some extra stuff in the input to the model. Here you have a sort of customized model, so different weights for...

I don't know, I guess you have millions of variants of your model that you now need to store and load onto your GPUs, which is presumably not quite the same. So again, as with any of these papers that look into alternative architectures, we've talked about Mamba a lot, and that addresses a similar problem where you can have ongoing memory over a lot of time.

Not clear when we'll see some of these things actually have an impact on things that are out there in the real world, but always exciting to see developments in the core architecture of how we build these large language models.

And what they do say is you're able to scale to context window sizes larger than 2 million tokens and maintain high accuracy in things like needle and VHS scenarios. So a real surprise for me personally that we've been able to scale context so well without using some sort of memory like this. With a reminder that needle and VHS is just the most basic version of what memory utilization looks like.

And onto the next paper, another exciting or interesting development from Sakana AI. We've covered a few of their papers, so they're putting out a lot of neat things. In this case, it's Transformer Squared Self-Adaptive LLMs. So this is looking at

adapting to tasks in real time by selecting, I guess, the best variant of a model. So they have essentially different expert models that deal with things like math or coding specifically. And what they do is given your input, they have a few different ways to be able to decide on what set of what type of task it is, you could say. And then they provide

take that as the right sort of set of weights to use. And then they put your input through the model a second time. And then, um,

that kind of gets you better performance. So another example, in a way, of what we would say is adaptation over time, although in this case, I was a little disappointed in the general idea where they do need to pre-train different specific variants of a model, what they call task-specific expert vectors, which are then mixed into the weights and

So it's not quite as dynamic as something like memory here, but you still have just a few variants and you pick between them. They do say that this outperforms things like LoRa and is more efficient, takes fewer parameters. And LoRa is one of the very important techniques out there for taking...

base model and customizing it to a certain task. So in that sense, this is pretty cool. And then there's some pretty nitty gritty details of doing SVD and so on. But anyway, another example of research on adopting LLMs given a specific input. So this is coming out of Sakana, which is kind of cool that they are sort of ramping up their research. I think the previous ones like AI Scientist,

or the evoMERGE stuff is always tinkering around the edges of research. I think this is in that category. Philosophically, it makes a lot of sense. I think it makes this two-pass mechanism you're also seeing in embedding models as well with the CDE small that's released out of Columbia University. But one thing that I don't really know is how this relates to representation fine-tuning.

I mean, I just searched the paper. It doesn't really mention Reft, but Reft was one of the best papers at NeurIPS last year. And it's kind of the same thing in terms of people understand that lower layers are good, but tuning representations over the entire model is better. And Transformers Square kind of does that. It uses the inference to do the sort of representation discovery, which is kind of cool.

But in all other respects, it feels like just representation fine-tuning. And yeah, I guess people are exploring this, but at the same time, I am not so sure if it'll get mass adoption yet because we haven't seen Reft get mass adoption.

And then just one more paper this week onto yet another trend as far as research inference time scaling. But this time it's not inference time scaling for LLMs. This is actually for diffusion models. Diffusion models are what typically gets used for image generation. So diffusion means that you start with a bunch of noise and then you do multiple steps to...

move your noise, like mutate the image towards something that is correct given some text. And so typically one way to get better images is just to do more denoising steps, more iterations,

of fine tuning your image towards the best, I guess, denoised variant of it. And that's how you train it. Typically, as you take a real image, you add noise and you train the model to do these denoising steps.

Well, this paper is looking at what else can you do beyond just doing more denoising steps to get better outputs. And what they find is one example is you can search in the space of what noise you are adding. So you don't just do more denoising, you also do different types of denoising and find the best output.

output for your diffusion model that way, which is, I suppose, somewhat big deal, right? Inference time scaling is the hot thing now in LLMs and image generation is not seeing that much competition, I think. But if this could be one of the differentiators for ChatsBt, for Gemini, for the multiple kind of places competing for text-to-image,

could be a significant part of a model that gets you that last kind of delta in the quality of your output.

I'm a little bit more skeptical. Like, I don't know what I learned from this paper, like diffusion models or the original inference time compute model anyway. So I guess they're, they're, you know, trying to improve it, but like the title itself is a very cynical, like let's stuff the heart current hot keyword into the title to make it hot. But like, I don't know. I think they focus on the results. Yeah. I don't know. I mean, I think it's,

I wouldn't say it's so cynical because ultimately it's similar to what you often do, I suppose, with inference time where you search in the space of possible outputs. In this case, the outputs are... Yeah, that's what diffusions have done, right? With clip-guided diffusion, that is...

Yeah, but usually they take a linear path where you just denoise, denoise, denoise, denoise right here. They're saying, well, maybe you can denoise in different ways and get better outputs that way. So I don't know. It is novel. Maybe I would say it's not going to get you much better outputs compared to the inference time scaling of LLMs. And on to policy and safety and yet another story about restrictions of chips. So

So there are these new guidelines and restrictions for exporting US-made AI chips. This is known as the interim final rule on AI diffusion. And these are doing some new, I guess, categorizations. So there are now three groups, strong allies like Japan and South Korea. There are adversaries like China and Russia, and a third group that is most powerful,

And so each of these groups have varying levels of restrictions on AI chip purposes. So that's countries like Mexico, Portugal, and Israel now face a cap of 50,000 GPUs per country, which is kind of surprising. I mean, we've seen most of the restrictions on China, for example. Now it's on any other country. NVIDIA has criticized these proposed rules. So these are not yet implemented.

in action as unprecedented and misguided quote because it does seem like a very big move to yet again restrict the ability of nvidia to sell to other countries in a very significant way yeah i don't know what's gonna go actually in effect but yeah our friend the same analysis did a very very good post on breaking down this thing line by line the actual impact like what are like the

data centers that are actually getting hit in different countries. As you know, there's a lot of global build out. And for example, Malaysia is a country where there's been a ton of investment from Oracle and media for data center build out.

question mark on how some of these things will fare out and the other thing is the restrictions are not geographic based but about ownership based so for example if you are you know Volvo which is like a car maker in Sweden they're actually majority owned by a Chinese company so they would also be limited to GPU access so there's kind of like a lot of implications of this thing but

Also, you know, we're going to get a new administration in four days. Right. Yeah. It's hard to say what will happen with all of this policy. You know, this is coming on the executive front. These are just guidelines and restrictions. So as, yeah, like when Trump comes in, it's a real question mark as to what happens with all of this stuff.

And speaking of, I guess, the last set of stuff that the Biden administration is doing, they also did another thing, signing an executive order to accelerate the development of AI data centers powered by emissions-free electricity. So they're involving the DoD and Department of Energy to lease sites for these data centers. And...

Yeah, another example, I think, of it seems like they're clearing out their priorities, signing a lot of executive orders. And this is addressing one of the popular limitations with how hard it is to get permits and so on for data centers. Again, we'll be curious to see what happens as the new administration comes in.

Trump will probably just expand this. It's not about reversing it. Trump is pretty pro-AI anyway. So hopefully they've talked. I don't know. Yeah, I think that's their expectation. Exactly. And speaking of that, the next story is that OpenAI has new, I guess, guidance. They have this economic blueprint that is...

putting forth what they think is a good version of AI regulation. And this is, you know, as you might expect, is dealing with wanting increased federal investment in power and data infrastructure,

To support AI data centers, they are saying that they should streamline industry engagement with national security, establish export controls for these models. They also are advocating for publicly available information for model training. So on the copyright question,

And so on. So yeah, another example, like regulation in the US is in an open area, pretty fluxy. So not too surprising that OpenAI is trying to influence where that goes. And interesting if they released this blueprint to time it with a new administration coming in.

And one last story in the section dealing more, I guess, with societal impacts, not policy and not quite safety, but I think sort of related. This is about a Pew Research Center survey that found that 26% of US teens aged 13 to 17 have used chat GPT for schoolwork, which is double what that was two years ago. Although I don't know what two years ago was because that's...

when the chat GPT came out like a couple months after that. So I guess maybe sort of like that. Anyway, over half of the teenagers also believe it's acceptable to use chat GPT for researching new subjects. A lot of them like more like 20% also approved for essay writing.

So I guess it's interesting to see a glimpse. It's often surprising to me and to people in the AI space how little impact ChatterGPT and these LLMs are seeing, how many people are not using them or not aware of them even.

To see that a quarter of US teens are using them, clearly it has a massive impact on education already. And that's one of the areas where it is very disruptive. So that is really painting a picture on education and schoolwork as being an area that needs to evolve now that we have stuff like this. It's always a surprise that numbers are so low. Why is it not 100%? I don't know. Strange.

And moving on to the last story, just one more in this section, synthetic media and art. And this one is dealing with AI copyright disputes. So this case, K3V Meta Platforms, one of the ongoing challenges by authors and IP holders over use of copyrighted content is

There is now a new deposition where there was, I guess we talked about the LibGen dataset last week. So this is dealing with the deposition of Mark Zuckerberg. So actually some statements he has made as part of this. I guess the YouTube part of the story, the headline is he said that

For example, YouTube, I think, may end up hosting some stuff that people pirate for some period of time, but YouTube is trying to take that stuff down. This is, again, dealing with LibGen that had pirated content. And he also said, and the vast majority of the stuff on YouTube, I would assume, is kind of good and they have license to do. So, I don't know, I guess not really worth highlighting the YouTube thing.

There's some other things that happen in this deposition. Zuckerberg claims he hadn't really heard of LibGen. He says, I get what you're trying to get me to give an opinion of LibGen, which I haven't really heard of. It's just that I don't have knowledge of that specific thing. So yet another thing he said, so would I want to have a policy against people using YouTube because some of the content may be copyrighted? No.

Lots of statements by Zuckerberg in this deposition, nothing particularly surprising, but does point to this LibGen case actually giving us some updates and some interesting things where we haven't seen many of these legal battles have progress or have any updates on what's going on in them.

And that's it for this episode. Thank you to our listeners for listening to yet another episode of Last Week in AI. As always, you can go to lastweekin.ai for the newsletter at lastweekinai.com for the notes for this podcast, all the links if you want to open them on your browser.

Thank you, Sean and Alessio for co-hosting. Once again, Laden Space, very cool resource. Check it out. And yeah, again, thanks for co-hosting. Yeah, thanks for having us. Yeah, it's a real pleasure. I think you are doing like very important work. I refer to your notes all the time. So it's very special to be contributing for the first time.

Once again, thank you to the listeners for any comments you leave, to the people who joined the Discord. Fun to see you chatting a little bit and just hearing your backgrounds. But more than anything, thank you for listening and thank you for tuning in week to week and enjoy our AI's generated outro song. Get around and lend your ear to the noose of AI.

Welcome to this week's AI tale, where the future's now, and it's full sail.

From the inbox to the sky, text top of the town, AI and Gmail is doing it for free, turning the world around. Drones for battle, factories they rise, Titan transforms, breaking new skies. As we sail today, as far as unveil, the future's unfolding, let us sail. Episode 97, we're up for the ride, in the world of AI, nothing's left to hide.

In a world where AI takes center stage Episode 197 Turn the page Stories of innovation Text printable From inboxes sky See what the eyes meet Genius tricks All AI's fail Smart braved eyes And it helps beyond the drones Of the rise Building dreams of flights Transforms Titanic Reach a new height

The future unveiled, stories of wonder, where your eyes mail. Turn and listen to the tales we tell. Episode 197, here the story swells. Building walls, here I step in.

Breaking all walls Innovation's here to change the game That's weak in AI, make it a reign Stories unfold like never before Turning discoveries into folklore Hear the buzz of factories alive With drones and titans, tech will thrive