#113 - Nvidia’s 10k GPU, Toolformer, AI alignment, John Oliver

Last Week in AI

Deep Dive

Shownotes Transcript

Hello and welcome to Skynet Today's Last Week in AI podcast. You can hear us chat about what's going on with AI. As usual, in this episode, we will provide summaries and discussion about some of last week's most interesting AI news. You can also check out our Last Week in AI newsletter at lastweekin.ai for all these articles and more. I am one of your hosts, Andrey Karenkov.

And I'm your other one of your hosts, Jeremy Harris. It's great to be here for another one of these things. I mean, man, it doesn't slow down, does it? It isn't. No, there's just more and more. And there's yet more chat GPT news to discuss. But now there's, I think, all of AI is accelerating because of chat GPT in a way, right? So we have a ton of other stuff going on that we'll get into.

Yeah, I kind of find it interesting, too, how it's all because of chat GPT. And then in a way, it's all because of GPT-3. It's all because of GPT. It's so hard to tell exactly when this moment started, whatever this moment is. But it does seem kind of like people are just...

now seeing all these opportunities to build on top of language models using things like laying chain or making tool formers or things that take action in the world, but that are fundamentally these language models. It's a fascinating time. In your mind, is that the root of this acceleration or is it coming from more compute power or something else?

I think, I mean, we've seen there was a wave of hype of GPT-3. On Twitter, there are many applications and there are many little startups, but I think now it's just gone so mainstream that even if you're not an AI, even if you're not a software person, you know that this is there now. And even if you're not an investor in tech, you know that it's there now. So,

I would say it's just like, it's been building up to this point and now we're here, right? Yeah.

Yeah, I guess in a way, you're right. The thing with ChatGPT and things like that is it just makes it so much easier for people to argue their way into spending huge amounts of money on bigger and bigger and more scaled systems. So it's all kind of linked. Yeah. Well, let's go ahead and dive into our news stories. First up, we have our applications and business section, starting with...

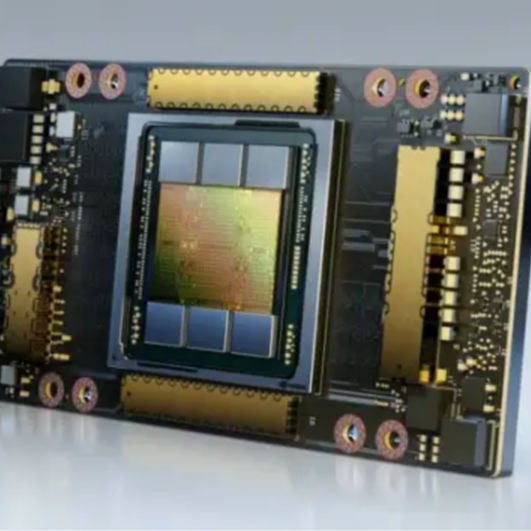

the article meet the $10,000 Nvidia chip powering the race for AI so what did you make of this one Jeremy well I

Well, I thought this was interesting. I mean, for people who are like kind of in AI, it's not going to be news to you that, you know, the NVIDIA A100 is like the is the GPU that you use. But I found this article useful because it kind of provides a bit of an overview of what the landscape of GPUs compute looks like.

For one, they put a hard number to Nvidia's market share, which I think everyone in AI has a sense that they're super dominant, but you maybe didn't realize that it was 95% dominant. That's pretty impressive.

A couple of numbers that just always help to flesh out people's understanding of what it means to be in the game when it comes to scaled AI. They mentioned it's pretty typical to use hundreds or thousands of these $10,000 A100 GPUs. It gives you a good order of magnitude sense. We're talking about millions and tens of millions and hundreds of millions of dollars for this infrastructure.

And they gave this specific example of stability, stability AI, which a lot of people will know from stable diffusion and these image generating models. And they were talking about how they went from having 32 A100s last year. Again, that's, what is that? $320,000 worth of A100s to having over 5,400 this year. So we're talking about here just a discount

number of dollars being spent on GPUs for this company and sort of starts to focus the mind in terms of how important these things are strategically. Jensen Huang from NVIDIA. Oh, sorry. Yeah. Yeah, exactly. Strategically, it's crazy in a way where it's not...

Literally that these companies have supercomputers, but they're spending the money that you could use to build a supercomputer. And that is just if you want to do large scale AI for things like language models or text to image or really a lot of things, you're going to need to have millions and millions of dollars for this sort of thing.

Yeah, 100%. And that does mean more revenue for NVIDIA too. This is where the article talks about Jensen Huang. You can kind of imagine sitting at his desk back in November, October, mapping out what is 2023 going to look like for me? And he's got a certain set of numbers on the board. And then chat GPT rolls around, Bing chat rolls around and boom, he basically was talking about how

We've had to reassess our goals as their stock's up 65% or whatever on the year, whatever it is. So like, you know, Nvidia really a big beneficiary of this. And as he says, you know, there's no question that whatever our views are of this year, as we enter the year, it's been fairly dramatically changed as a result of the last 60 or 90 days. So, you know, big changes happening fast and, you know,

Anyway, a bunch of really interesting numbers in this doc, and they do talk about the next generation as well, the Hopper H100. Obviously, the A100 is not the end of the line. And maybe one last thing to mention here, if you're not following the compute space, the H100 is specifically designed for transformers.

So we're not talking anymore about a GPU that's designed for deep learning, but specifically what kind of deep learning model are you training? Oh, you're training a transformer? This is hardware just for that, which is just a reflection, I guess, of the fact that attention is all you need and transformers are increasingly looking like all we need, or at least a big part of it. And amazing to see actual hardware reflecting the broad use of transformers.

Yeah, it's super interesting. It used to be that GPUs were powerful or very much liked because of their general purpose, right? You can put anything you want in there. And now there has been a bit of a convergence around this one type of neural net. And now we still have GPUs, but they're starting to be a little more specialized, which is really interesting.

So as usual, we'll have links to all these stories in the description. You can check out the story for more. It's pretty good to know about all this NVIDIA GPU stuff. But

We're going to jump ahead to our next story. Generative AI is coming for the lawyers. And this is a pretty nice detailed article from Wired about kind of the current moment in AI being used for law. There was just recently a pretty major news story about companies

law firm conducting with this company Harvey and this article discusses how another company Dellon and Ovaries is now using Harvey and it has this cool story where this Dellon and Ovaries is

Started trialing the tool in September of last year. And then they were just kind of giving it to some of the lawyers to answer questions or draft documents or, you know, write messages. And, you know, it started small and now they have, you know, thousands of people, like literally 3,500 workers across, you know, everywhere using the tool.

just in a couple months. And now it's kind of becoming more official where they are in an official partnership and this tool, Harvey, is probably going to

grow a bunch more. So yeah, it's a good article discussing these recent trends and how there's been a lot of chatter about AI and law, but right now it's looking like, you know, it's really going to be there and it's going to be used by every company pretty soon.

Yeah. And, and especially interesting given the risk profile of the legal profession. Like you imagine, you know, you have a bunch of interns. Normally you trust your interns to go through legal precedent and look at old cases and things like that and, and comb through them carefully. Um,

What happens when you start to outsource that to an AI? What if something goes wrong? And do you start to lose that kind of, I don't know, that, I don't want to say work ethic, but the habit that people are in of consulting the raw materials themselves? I don't know if that's something they talked about in the article. Yeah, they do. They discuss how we do have an issue with these things where there's hallucinations with language models and...

They talked to the CEO and founder of Harvey about how some of the technical ways they're handling this. So it's not just a language model, they are fine tuning it for a massive corpus of legal data sets. So that helps. And then also this company, Ellen and Overy said that they have a careful risk management program around technology. So I think

I would say as people start using it in general, I would be a little bit optimistic, actually, that they would be careful and not just sort of trust it fully.

Yeah. I mean, I personally think there's huge potential to use large language models for summarization of large legal documents for, I mean, the number of times, I'm sure you've done this a lot. You kind of hit, yes, I accept the terms and conditions. And I'm sure my firstborn son has been sold off to a number of different megacorps by now. But like,

That's the promise of these tools is to be able to level the playing field a little bit maybe between people who can't afford legal degrees and people who can afford armies of lawyers to kind of like

write up all these documents. So I'm curious about that aspect too, long-term. Maybe that ends up making human agreements easier to draft and abide by and reducing the number of lawyers that have to get involved. That's not going to hurt anyone's bottom line, I guess, except for the law firms. Yeah, that is interesting. Maybe less reliance on lawyers if we can understand the law stuff as well.

But moving on to our lighting round with some other stories, we have Vicarious Surgical cuts 14% of staff, which Vicarious has robotics research on surgery. And it looks like maybe they're cutting some staff.

Yeah. So, Vicarious, as you say, it's this company that's focused on a specific kind of hernia surgery. There are millions of hernia surgeries in the US every year, and then about half a million of them qualify as the specific kind of hernia surgery that Vicarious Surgical wants to go after. Their tech, yeah, surgeons remotely control these robotic arms that carry out the surgeries.

And this falls in an interesting time for robotics. You know, I've talked to a lot of friends in kind of VC and like in the startup world. And one of the things that I keep hearing is the talk about chat GPT, the talk about large language models is like kind of sucking the air out of the room for a lot of these robotics companies. And they kind of want to be like, hey, look over here, like we're doing interesting shit, too.

And I guess, you know, it's unclear whether that's directly the cause here, but certainly a cut happening with Vicarious. And they are going to be using that to make more budget room for R&D. And so that's, you know,

So probably a good thing given the additional leverage that a lot of new tools have introduced. So maybe they're looking at exploiting some of the sort of action transformer stuff that we've been talking about and we'll talk about. But they now have two years of runway. So it should be enough to kind of weather the storm. But Vicarious Surgical is definitely a big and emerging player that a lot of people are excited about. So I figured it was worth flagging.

Yeah, yeah. I think it's also probably more of a broader trend where a lot of startups, like we've seen giant companies, Google, Facebook, lay off 10% of their staff, right? And I think startups are definitely maybe more hesitant to hire if they are not downscaling as well. And related to that, our next story is Alphabet Lays Off Hit Startup.

Trash sorting robots. And this is about how there is this division everyday robots within Google that

was originally an ex-moonshot, and the team was basically trying to get robots to do useful things around the office. So they had these moving robots with arms that could clean cafeteria tables or separate trash and recycling and various things. It was kind of a mix of a product group and research group.

And now it's gutted and it's over, which is kind of sad because it was doing cool work. Yeah, it's sort of surprising. I am really curious about the sorts of decisions that get made in these down markets. Robotics, I would have thought, would be close enough to the AI hype cycle. And I don't particularly think it's a hype cycle. It's just that AI can now do so many exciting things. It's surprising sometimes to see companies pull away in quite this way.

We've seen Facebook, for example, I think withdraw a little bit from the metaverse stuff and redirect towards AI. So Microsoft investing more even in the down market and all that. So anyway, interesting to see where the cuts get made and where they're not getting made and maybe a bit of a surprise here from Google or from Alphabet. Yeah, I know. They've been doing really, really cool stuff. I think my impression is it was just...

Too much money. This article says they have over 200 employees, which is quite a lot. And it does say that parts of it will be absorbed by other places within Google Research. So it's not fully gone. Yeah. Yeah.

And next up, we have Amazon's cloud partners with startup Hugging Face as AI deals heat up. So Amazon announced just last week that it will collaborate Hugging Face, which is this company that does a kind of a variety of things. One of the many things it does is hosting models of AI

AI models that do various things and also demos. So you can just go to the site and run things directly or get the code or other kinds of things. And this is pretty interesting, I would say. What do you think, Jeremy?

Yeah, well, I think obviously Amazon through AWS and any number of other arms has huge distribution. So maybe more of a distribution play for hugging face here. And I think Amazon also wants to get more in the game of generative AI. When we think about the big players right now in the space of generative AI, there is obviously there's the Google, the DeepMind, the OpenAI, the Microsoft, the Anthropic.

And then you start to cast about. And it is surprising that Amazon hasn't risen to the top of the list as much as they might otherwise have. They're doing more and more stuff in that direction, but maybe this is another big step on the way to participating more fully in the generative AI race as it's increasingly looking.

Yeah, I'd be curious to see where this leads. It feels like maybe it'll be making it easier to deploy things on AWS, but maybe there'll be yet another language model. We'll see. Yeah.

And then on the consumer side, our last story is Spotify's new AI power DJ will build you a custom playlist and talk over the top of it. So this was just announced. There's a small video where, you know, it's kind of trying to mimic a radio DJ that gives a bit of commentary and then you go between different tracks and, uh,

Honestly, it's not super impressive. I feel like this is not using anything too fancy. We've had AI playlists for a long time. So I think this is interesting from kind of another, you know, hype cycle aspect where now Spotify had to get into this and make an AI powered DJ where it's not exactly an awful idea.

Yeah, I mean, again, interesting how, so there, I guess there are two interesting things here. Like one of them is, you know, you kind of sometimes feel like people have a hammer in search of a nail, you know, like they've got this amazing tool, they know AI can do new stuff, and then they're trying to shove it into as many places they possibly can. And so, you know, the startup founder in me looks at this and goes, okay, that's not how you build product.

But there is an important difference here. This is a set of tools that are much more malleable than the kinds of tools that we're used to productionizing. Usually you build a website, you build an app, and a button can only do one thing. And you build these very specific sets of buttons and the whole thing does one function. Whereas you're always a prompt away with these language models from changing the kind of value they can offer to end users.

So maybe it's the case that companies aren't actually going to find that they're throwing their money away, even as they just test out a harebrained idea, because it's so easy to tweak that idea just by changing a prompt, just by retraining or fine tuning or whatever. And maybe that's a fundamental difference between what it means to develop product with AI today versus doing product work five, 10 years ago without AI.

Yeah. And I think another aspect here is if you kind of look at the demo, my impression is, um,

It's not really just using a language model. It's more like the things that the DJ will say are kind of pre-written. There's like a template and a lot of human writing going on with maybe a bit of room for improvisation. So I think we also see this where a lot of companies will announce features that are, you know, AI. And you've already seen this, right? Where it's AI, but

It's not doing anything too fancy. It's not necessarily using GPG-free that much. So good to know. And moving on to our research and advancement stories, starting up with Toolformer. Language models can teach themselves to use tools. So you flagged this one, Jeremy. What did you think of this?

Yeah, I mean, very cool. And also from a safety standpoint, yeah, this is one of those papers that shows you that, you know, the set of things you thought these language models can do,

Maybe is a little bit bigger than you might have thought. And they're basically talking about a technique that you can use to get these large language models, not only to learn, like we're not just talking about prompting them and giving them examples of using APIs or tools in their prompt.

But actually finding a method to have these language models teach themselves how to use new tools. And so this is kind of a, I think it's interesting from the standpoint of like this basic question people always have about a new model when it comes out is like, what can this thing actually do?

That's an important question because sometimes language models, or models more generally, have capabilities that are dangerous, malicious. The companies that develop them don't necessarily know what those capabilities are when they finish building the model. Famously, you could use GPT-3 when it came out to write phishing emails. OpenAI hadn't realized that at the time. They just had this thing that was good at doing autocomplete and surprise, it has all this cluster of malicious applications.

And so this is kind of another step in that direction. If we have these systems that can teach themselves to use any kind of tool and the kinds of tools they play around with here are things like calculators, calendars, Wikipedia search engine, machine translation engine. So, you know, non-trivial tools. If you can get these systems to figure out how to use those tools, then like you start to think, wow, how could I even conceive of the range of applications of this model?

And that's, yeah, focused on the malicious stuff. There's also a really good side to this too. Holy crap. How useful is this? I mean, like a general purpose tool understanding model that you could prompt in plain English to do anything you want, like to use any tools that you want. I mean, it's, it's fascinating. And I think a really interesting trick that they're using to pull this off. And anyway, yeah, I thought a really, really cool, cool leap here.

Yeah, no, this is super exciting. I think combining language models with these APIs is very powerful and something we're just sort of starting to explore. And this paper is pretty fun. I think it has a neat idea at its core where basically you do have to start out with--

You do need to provide sort of a template of the API. You do need to give an example or two. And the general idea they have is given some data set, you can add some API calls and just sample them randomly kind of and see which ones work.

make it easier to predict the correct text and which ones don't. So they do have, as far as teaching themselves, the idea is you can collect more data and then do fine-tuning on more data, which is pretty neat. I will say that...

This does limit the complexity of the API that can be used. So these things, calculator, calendar, these are fairly intuitive things, I would say, where you don't need to provide anything more than the text input, really.

Uh, so if you get tools that are, I don't know, like 3d modeling or, uh, drawing or anything like that, that's not quite what tool former is about. It's about, you have an API where you can send a request with some text and you get a response and some text and that's.

and how far it goes. But still, we don't have many data sets with using APIs. I don't really know if we have any really. So this is a cool demonstration that you can collect that without human involvement. Yeah. And it's funny to see how

I don't want to say straightforward, but how cute, how interesting the ideas are now that are coming because we're able to prompt these systems in plain English. You don't really have to think about the execution as much. You can just come up with a concept like, "What if I could teach my model using this little trick?" It's very cheap and quick to test.

And so I feel like that's kind of part of what's behind a lot of these interesting, again, cute ideas that people are starting to explore with getting these language models to teach themselves or do things like that. So yeah, chalk this one up as another example of that trend, I guess. Yeah, maybe.

And then next up, I wanted to jump back a few weeks to discuss some work we haven't discussed, where we are going to get away from language for just a little bit and talk about reinforcement learning and having agents that don't just process some input and produce some output, but are kind of learning from trial and error, which is something that language models cannot do.

So this is a paper from DeepMind that came out earlier this month titled Human Timescale Adaptation in Open-Ended Task Space. And this is in line with DeepMind's kind of long-term mission of seeing how far we can push reinforcement learning, learning by trial and error. And in this work, they showed that if you scale...

in multiple ways. So we use a transformer, we use a transformer Excel, and they use a massive set of possible tasks of different kind of specifications.

And they show that sort of like GPT-3 in a way, where you can prompt these language models to do a lot of stuff. Here they show that you can actually have the agent learn by trial and error very efficiently. If you've done all this training on a large set of possible tasks, then just after a few trials, without any sort of changes to the weights,

just via aggregating observations, the agent can actually learn what to do in a task and adapt. And this is using a few different ideas. So it's not simple. There's meta reinforcement learning. There's this large scale attention based memory architecture.

And they have sort of a curriculum as well. But it's nice to see some more progress in this reinforcement learning and real embodied agent space where you don't have a passive model. You have something that continuously interacts with the world to accomplish a goal.

Yeah. And it's also interesting. It kind of makes me think back to that debate that people used to have about what it means for an AI to learn as fast as a human, right? Like, you know, humans are certainly able to learn how to solve new math problems the first time they run into them, provided, that is, that they're not six months old.

Right. And so there's like there's a question of of what counts as the learning phase. And increasingly, it really seems like AI and perhaps a lot like humans kind of has to be front loaded with a bunch of knowledge. And then once you get it past a certain threshold, then in context, learning can take over. Then meta learning can take over and you can learn tasks on the fly and very quickly. It's just interesting to see that, you know,

take shape in a sort of analogous way to the human brain. The brain itself is structured from arguably billions of years of natural selection. So it's been shaped from learning, if you will, across generations in that way, plus through childhood and early life. And then we can start to solve problems in real time. And sort of looking at machines the same way, give them a big fat data set, do pre-training, and then you start to see the kind of learning on the fly bit. So sort of, anyway, interesting to see maybe that middle ground in that debate shaping up.

Yeah, that's a good point. I think it's maybe a pretty good analogy of now...

We do have a lot of built-in structure in our brains for vision, for movement, for language. And that is, you could think of it as what these things are learning from aggregating a massive amount of experience. And related to that, I also wanted to mention, without getting too much into it, another paper from earlier this month titled RT1 Robotics Transformer, which is pretty much similar. It's just...

having a giant transformer and a lot of data and having an image of what the robot is seeing and some text instruction. And they show that if you have enough data, you have a large enough model, you can do a lot of things pretty effectively. So I think it is nice to see that

There is still work on embodied agents, which is non-trivial, I will say. I think there are still some major challenges in moving from language to other modalities, and it's pretty exciting to see this progress. So what are your, because I know you're more on the vision side of things, what is your take on the bigger challenges that still exist there?

Well, I think one of them is generally with, for instance, the DeepMind paper, you do need to learn from reinforcement learning and trial and error. And so far, none of these things are learning from trial and error, really. I mean, there's some fine tuning going on with Chad GPT, but it's not like you're interacting with the world and trying to accomplish some goal and so on. So...

That is a major challenge that I think may be important for AGI, right? Because you would need to be able to learn by yourself without a huge data set, you know, by trying things out. And that's not something you can learn from a data set necessarily, maybe. And the other thing is, yeah, there's just other dimensions of once you get to images and, you know, not just images, observations, text,

there's still a limited context window in these transformers. So that's going to eat up that space real quick. And there is still not a great answer to how to keep increasing that context window or add some sort of memory. Because right now, it's just there is a limit to how much you can scale these current architectures. Yeah, it sort of makes me wonder--

Well, actually, maybe this will be one for next week because I just saw this article earlier today. I think it was from Meta comparing the structure of the brain to the way AI systems work and kind of deriving some rough guesses about what's missing to get to AGI based on that. But what would the closest equivalent to a context window be for a human? And how long is that? Anyway, these are all kind of really fascinating questions that you're

That you're prompting me to think about, but maybe you can do that next time. Maybe next week. Maybe next week. Yeah, yeah, yeah. And now we're going to just wrap up with a few more stories. So first up, Meta heats up big tech's AI arms race with new language models. So Meta released LAMA, short for Large Language Model Meta AI, that...

It's similar to GPT-3, fundamentally kind of the same idea, and has pretty impressive performance, which is interesting.

Yeah, and it's trained on 10 times more data than OPT. I think one of the interesting things we're seeing here is this constant optimization around how much should I scale my data set? How much should I scale my amount of processing power? How much should I scale model size? Obviously, this goes back to, well, GPT-3 first showing, hey, you know what? You make your model a lot bigger, you get good results. And then we got Chinchilla saying, actually, you know what?

you ought to make the model maybe a little bit smaller, crank up your data. And maybe we're seeing that reflected here with this LLAMA, sorry, I had to do that, 10 times bigger than OPT on the data side. And it is going to be available to basically everyone on a non-commercial license. And this is yet another example of Meta doing this

playing this game, it seems, of fast following. So some big lab will come up with a major breakthrough, and then a few months later, Meta comes out with the open source version of that breakthrough. We've seen this happen with a number of other systems. Actually, OPT was an example of that, which was basically GPT-3 scale.

But I think what's really interesting about this, one of the biggest take homes here is this Lama model is competitive with, or the bigger version of it, which has 65 billion parameters, is apparently competitive with Google's Chinchilla and also Palm, which is a 540 billion parameter model. So it's kind of showing you how not all scaling is created equal. Maybe you can pack a lot more punch in 65 billion parameters than we once thought.

And then their 13 billion parameter version is competitive with GPT-3, which is about 10 times, actually over 10 times bigger. And so it's interesting, you know, you can shave an order of magnitude off your, at least your parameter scaling with data scaling, sort of a cool test of the balance between all these different ingredients.

Yeah, yeah. It's quite cool. And I think it is quite cool that this is open because it is competitive with GP3 and the code is out there. And this was trained on publicly available data. It's not, you know, unlike a lot of these things, GP3, chat, GPT, you know, it's all out there. So fundamentally, someone could train this themselves if they had the money and the tech. So yeah, it's exciting.

Yeah, it is. And it's also, you know, you think about the malicious applications too, and it's out there for those purposes as well. Everything in AI is a double-edged sword, but I'm excited to see what people start building with this. You know, like what can people do once they can crack these powerful models open and, you know, use the middle layers and all that good stuff? Yeah, yeah.

And next up we have MIT researchers have developed a new technique that can enable machine learning to quantify how confident it is in its predictions. This is coming from MIT. You don't get too much into it. This is showing new results on uncertainty quantification while using less processing power and no additional data. And I think this is just worth noting, I think,

As one of the problems that is interesting, as we deploy these systems in the real world, having the ability to not just produce an output from a model, but also produce a confidence score from that model will be, I think, very important. I think that will really help in calibrating when to trust the model, when not to trust it, things like that. Even chat GPT, you can maybe do something like that. So it's nice to see some progress along those lines.

Yeah. Do they mention anything about the kind of problem of, you know, like if you ask a human being, you know, so, okay. So you think that, you think that, I don't know, you should buy Tesla stock today. And then you're like, how, how confident are you that you should buy Tesla stock today? And if the human being is like,

let's say it's a really stupid person uh you know they make the wrong call but then they might be really confident about the call because their mental model of the world is so broken that it not only makes their prediction wrong it makes their uncertainty estimate wrong on that prediction in other words the uncertainty is only so only as good as the base model is that part of what they discuss or well yeah that's basically the one of the challenges is uh uncertainty calibration

So when you calibrated, you have the right kind of output of uncertainty.

Definitely when you're dealing, for instance, if you're dealing with out of distribution data, you might have some issues quantifying your uncertainty well. So it's a challenging area. And this is nice primarily because it's creating a simpler and cheaper approach that you could maybe deploy. But there's been a lot of work on it. So I think it's possible to be a little bit robust. Nice.

And jumping on to a very much real world application, we have machine learning makes long-term expansive reef monitoring possible about how conservationists can now monitor climate change impacts on marine ecosystems over long periods of time using a tool called Delta Maps, which assesses which reefs might be best for survival and

can tell you where to target your preservation efforts, according to a scientist. And you can use this tool to examine the impacts of climate change on connectivity and biodiversity and more large marine ecosystems. So it's basically like a triaging strategy for reefs. Yep.

Yeah, good to have. And again, we'll see a lot of these things with climate change. Yeah, that's the ultimate out-of-distribution problem too. Yeah. And last up, we have how AI can help design drugs to treat opioid addiction. And this is about how we've seen similar research before where this is using AI to help explore drugs

compounds that might block a particular receptor called kappa opioid receptor. And yeah, we found that you can go through a lot of options and find some promising variants. And obviously, this is a huge deal if we can make progress because every year, more than 80,000 Americans die from opioid overdose. So it's a

It's an immensely large problem and it's interesting to see that AI is now helping on that front. Yeah, it's another one of those kind of problems I think we were talking about maybe a couple of weeks ago, but this idea that a lot of the problems that are left, especially in things like biochemistry or biomedicine,

are these high dimensionality, high data volume problems that humans just haven't been able to parse. There's no way that a human can look at the structure of a protein or the structure of a receptor or something and be like, oh, okay, those will work together. And so now AI is just the perfect tool for a lot of these things and exciting to see all the progress being made as a result now that we're unlocking things through the virtue of scale. Yeah. Yeah.

Jumping on to our policy and societal impacts section. First up, we have planning for AGI and beyond from OpenAI, which is kind of, I don't know, I don't know what I would call this, a position paper, a sort of editorial perhaps on how OpenAI views AI.

the path forward in AI and how to go about it. What was your impression, Jeremy?

Yeah, I agree. I think position statement, I think manifesto maybe sounds a little too Ted Kaczynski-ish, but it's a statement about where the future might go according to OpenAI and how they see themselves playing a role there and steering it. This was authored by Sam Altman, obviously the CEO of OpenAI. And there were a couple of excerpts that I think were especially interesting. So one that I

I thought was especially interesting was this idea that they say we want to successfully navigate risks in confronting these risks. We acknowledge that what seems right in theory often plays out more strangely than expected in practice. We believe we have to continuously learn and adapt by deploying less powerful versions of the technology in order to minimize one shot to get it right scenarios.

And this is a really interesting, I mean, if you're following the kind of AI safety world at all, this is one of the core debates in AI safety. And this debate is about whether we're going to have just one shot to get AGI right.

Whether we build an AGI the first time and if we get it slightly wrong, the thing goes nuts and kills us all instantly, or are we going to be able to iterate our way to it, test things, see how they break and gradually shape the system? In which case, maybe that's a more optimistic scenario.

And there's a really big kind of polarity in the AI safety world right now with some people who feel it's a one-shot get-it-right situation and others who think maybe more iterative. OpenAI definitely falling more on the iterative side, which is why they err on the side of deploying their systems. And they've got an eye on policy too. They're focusing on trying to

openly publish their systems so that policymakers and institutions have time to understand what's going on. That's a big theme here. And I think one of the last things I really wanted to flag was, let me just see here.

Actually, there's so many interesting ones. But anyway, one of the things I did want to flag was they were talking about how this idea of AI safety and AI capabilities as being separate things doesn't really make as much sense as people once thought.

We kind of see that with ChatGPT. Again, ChatGPT is not a more scaled version of GPT-3. It's a more aligned version of GPT-3. It's a version of GPT-3 that we've figured out how to steer and control a little bit more carefully. It's just in that steerability that we unlock all the value. Almost no one had heard in the mainstream of GPT-3, everybody's heard of ChatGPT. The thing seemingly that makes the difference perhaps is the alignment component.

The value we can get out of our AI systems today does seem bottlenecked to some degree by the safety piece. So maybe it makes sense to think of these together. Anyway, what were your thoughts on this, André, as somebody who's looking maybe more from the capability side? ANDRÉ BIRCH: Yeah, I think this was a pretty decent read. I wouldn't say I found anything surprising in it. I think a lot of this is pretty much what has been the stance of OpenAI, and this is a bit of a summary.

And yeah, I think some of these highlights, as you said, that AI safety and capabilities should be entwined is a good point that I found very interesting recently. And then I think it's also cool that I think this is primarily about the short term, most of it. So it's not actually talking about, you know,

about AGI. There is a section about it, but it's really talking about in the meantime, so far we don't have AGI, what should we be doing as we seem to be approaching it? And so, yeah, I think it's worth a read if you haven't kind of been keeping up with this. I also find it a little bit interesting that later on it said there should be great scrutiny of all efforts attempting to build AGI and public consultation for major decisions.

I don't know if I can take that very seriously. I'm not sure OpenAI is lobbying for regulation or supporting the EU regulation front. And if you really believe in that, I would say probably you should be just lobbying for regulation from the government. But yeah, I think the comments on what OpenAI is thinking about is interesting and kind of a big deal considering they're building ChasGPT.

Yeah. I mean, and to double click on that point about, you know, regulation and the extent to which open AI might be for it, I think one complicating factor too is like, it also has to be the right regulation. It's so hard to get this right. And, you know, we've seen things like the EU AI Act that seem to be a little bit off, like things are a little bit sideways in terms of definitions of terms and all kinds of stuff like that. But,

At the very least, this serves as a call for policymakers who now know what ChatGPT is. They know OpeningEye is the company that did it. OpeningEye has that credibility. And this, in some sense, is legitimizing efforts to say, hey, you know what? Yeah, there ought to be some real scrutiny. If we're talking about labs that are going to build human-level intelligence that see themselves as anywhere close to doing that, it does seem like...

Pretty insane to just be like, "All right, yeah, you do what you want and we'll check in whenever." Actually, on that idea of normalization, maybe the last thing I'll mention is in this post, one of the things that Sam A does is he says, "Look, a lot of people think of AI risk or existential risk or AI risk in the long term as being this laughable thing."

And he basically says, we take it seriously. In fact, we do think that it could be existential. And again, whatever your views are on OpenAI from a safety standpoint, like do they actually mean this, whatever, this does start to change the mainstream conversation when arguably one of the leading labs in the world that's close to doing this is saying, we think there's existential risk here. And Satya Nadella, I think is also, CEO of Microsoft, has also come out and said the same. Yeah, no, I think it is interesting

Probably going to reach a lot of eyes that haven't been seeing these kinds of things before, which is a good thing. And maybe speaking of that, also a bit of an editorial or perspective, you wrote this next article, what ChatsGPT means for a new future of national security. So maybe you could just go ahead and tell us what does it mean?

Yeah, I added this. So for the audience's edification here, Andre very kindly puts together this amazing Google Doc every week, and we add our articles to it. And I was just like, oh, I'm just going to slide this one in here. It's an article that I wrote in the Canadian context about a couple of days ago now. And yeah, basically, this is just arguing that like,

All the things that we talk about here, or many of the things, AI is a dual-use technology. You can use it for good and for evil. We've seen things like chat GPT be used to make new forms of malware. And basically, you think of scaled phishing attacks, information operations. We have breakthroughs in AI that can help us design better bioweapons and things like that. At a certain point, you start to wonder, is it OK? Is it remotely sane for governments to be flying blind through this process?

Should there not be a structure within a government at the executive level, very close to the head of state, that's tracking risk from AI systems? When you talk to people in this space, they'll tell you they fully expect some kind of dramatic AI-augmented malware attack, cyber attack, to cause global scale harm in the next two, three years. The number of years is not long.

And so just the thought that, you know, hey, these governments should probably set up a structure. We call it an AI observatory, at least my team does. And yeah, just to inform on opportunities and risks, also things like workforce disruption. You can imagine that being something that you track depending on where it's positioned. But certainly national security risk, risk from misaligned AI, all these things, we should have eyes on it. That's basically the entire point of the article. I saved you the click here. You're very welcome, everyone. That's about it.

Yeah, I think it's another case where this conversation has been going on. AI has been kind of crazy for a while. But now, if you're behind, if you haven't really gone around to building a real set of expertise in your government, then you got to do it. And Canada, for instance, does have an AI strategy. I think most...

Like, most countries almost have some sort of AI strategy document. But I think now you need maybe not just kind of talking about it, you need maybe a division, a whole, not necessarily branch, but a set of people that are keeping up with AI and can think about it and, you know, do what needs to be done. So...

It's a good point, and I'm sure we'll see more discussion on this front as well. Well, yeah. Just a last quick note on that front. You've got a lot of countries with AI strategies. They're mostly acceleration strategies. They'll be like, hey, how do we get our country to contribute to the cutting edge? Rather than saying, holy shit, we have AI systems that can cause real...

real harm. And the curves are all going vertical right now in AI capabilities and saying like, okay, should we start to, you know, think about counterproliferation language? Should we start to think about national security language? And that language does not exist right now. So, you know, something that I'd be eager to see a lot more governments jump in on. And anyway, that's, that was my article from the Canadian perspective on that.

FRANCESC CAMPOY: Cool. As before, we'll have links. So if you want to check out more detail, go ahead and go over and click on it. And we're going to move on to our lightning round, starting with AI Alignment and Uncalibrated Discourse in AI. So this is from an AI research scientist, Nathan Lombard, from his Substack. And it talks about, as the title says, discourse kind of within the AI community almost.

and going into things like you can categorize people who are about AI safety as you don't want to cause risk, but then there's people who are more specifically about existential risk, whereas people on the alignment side, on the ethics side, and various camps coming up now with different views. So yeah, what was your takeaway, Jeremy? Yeah, no, to your point, I think he was highlighting something important here. I think it was

It read to me not like a post that necessarily had a clear point. It was more like a lot of points about specific areas of disagreement definitionally. So yeah, Andre, you mentioned this idea that AI safety isn't just one thing, right? We have people who say they work on AI safety, but what they really mean is I work on making sure that my robot doesn't accidentally roll over

drive over a human being while it's doing its work. And then there are other people who go, no, no, I'm working on AI safety. And by that, I mean AI existential risk mitigation. So how do we prevent AI systems that are smarter than human beings from like basically wiping us out the moment they're created, which a lot of people expect to happen. And so, and then everything in between, right? You've got AI ethics people who worry about bias and who worry about, you know, kind of like harmful language and so on. And so getting all these communities engaged

to see eye to eye and not see each other weirdly as they kind of do sometimes adversarially. There's almost the sense that there's like a finite amount of energy that the public has to dedicate to whatever we'll call responsible AI or something. And that, you know, what existential safety gets, um,

you know, bias doesn't get or something like that. And I think he's kind of trying to chip away at this idea in part in this article, which I thought was, you know, a laudable thing to do. I mean, we've got a definite issue here in terms of people kind of rowing in the same direction, let's say. Yeah, I think that's a good point. And I think something he doesn't get into, but I think it's worth getting into is

The fact that AI ethics and AI safety are almost different camps, somewhat strangely. So people concerned about bias and discrimination or really just erroneous outputs in general, but not so much AGI, that's AI ethics. More present day concerns in AI safety, AI alignment is a bit more forward-looking. And I would say...

Hopefully these two will come closer together because it's all alignment at the end of the day, right? So it's strange. Yeah, no, I think that's a really good point. And it's only recently that it's become...

I think possible to argue that very credibly, but it really does seem possible that we're going to see a convergence between those things. Ultimately, you're talking about being able to control the behavior of an AI system. Whether that system is super intelligent or just subhuman,

As our systems get smarter and smarter, the AI ethics people have to deal with systems that look closer and closer to those long-term outlook systems. Anyway, it's an interesting dichotomy and maybe ultimately a false one. Yeah.

Next article we got, how I broke into a bank account with an AI generated voice. So it's pretty much what it sounds like. This journalist created some fake audio of them saying certain things like check my balance and using their voice as a password, which apparently you can do.

and were able to get into the account. And we saw something like this actually last year already where someone did, you know, actually stole some money with this kind of approach. And yeah, it's a good thing to be aware of because also recently we saw some impressive progress in realistic audio generation and that's going to have a major impact this year.

Yeah, it's like every couple of weeks, right? You have this new capability and someone somewhere in some like enterprise or some security organization has just had like a fundamental operating assumption wiped out just like that with the new system. So it's really interesting to see like how are banks going to respond? How is the security ecosystem going to respond to stuff like this?

Yeah, it's interesting because it's one of these things where we've been discussing it for years. And I think a lot of people in the security community, it's been known that you could use AI for phishing and things like that. But now with tech, it's here, right? So it's time to-- you can't just talk anymore. You've got to actually address it. Yeah.

Next up we have AI human romances are flourishing. And this is just the beginning, a pretty interesting article from time about how there's this app replica, which launched in 2017. And it's basically a little chat app with a virtual, uh, character. And, uh,

Over time, it kind of evolved into this thing where you can have romantic relationships and even sexual relationships. You actually could pay a $70 pay tier to unlock...

erotic conversation features. And now what just happened was they removed that option. The company removed the option for it to confess its love or, I don't know, be spicy. And now all these users, a lot of these users are angry that their bot has been kind of stripped away. And it's pretty, I don't know, surreal to see this.

Yeah, what it makes me think of is this like my favorite niche Silicon Valley saying, and I can't remember if Paul Graham said this or who, but it's something like if you A, B test a website often enough, you will end up with a porn site.

And just like this, like race to the bottom of the brainstem. And, uh, you know, and the, you know, the moment you talk about, Oh, well you can have, you know, human like chat bots and whatnot. I think a lot of people's first thoughts went the same way they did with, uh, the good people at mid journey, uh, who can make images out of, out of text prompts. So, you know, like, like your, your mind very quickly goes to these things, but it's also, it's fascinating to see just how, how hooked people can get to these bots. Like the,

I was on a subreddit that was talking about, I saw this on Twitter, somebody posted like, you know, if you're wondering about what the future of AI might look like, you got to check out the replica subreddit. And I checked it out and man, I was like, people just furious. Like from their perspective, they just lost a loved one. That was really like what these reactions were. They were raw. They were emotional. And yeah, I mean, you can't judge them. I mean, that's

It seems like that's kind of where things might end up going in the long run. And this is not even chat GPT, right? The AI here is relatively primitive. It's not super good at talking and then does kind of silly nonsense all the time and everything.

Uh, yeah, but it's, it is kind of crazy and there have been stories in the past, you know, Japan or China about people falling in love with their eyes. But I think now that we have chat GPT, it's going to be not very uncommon. I would imagine. Yeah. Yeah. Like, you know, there was internet dating was weird at first. Uh, now, now you're going to be dating the internet. Yeah. Yeah.

And last up, we have the story of machine learning is helping police work out what people on the run now look like. So this is about how basically, instead of using artists to sketch what aged up people,

you know, people might look like. Now you can use AI to estimate, you know, the wrinkles and so on. And it's actually being used in practice. There's kind of an interesting example about a member of a Sicilian mafia who has been on the run since 1993. And so, yeah, another case where AI is everywhere. It's being used for everything. Get used to it.

Yeah, really. And I'm looking forward to the lawsuits that come when these images are slightly wrong and the wrong person gets arrested because of the aged up image or something. But who knows? I mean, these are probably going to be a lot more accurate in some cases than sketch artists and things like that. Mm-hmm.

All right. And then let's just wrap up with one more thing. We're going to... Usually we have a couple of art and fun stuff stories. We're going to just touch on one. I wanted to highlight...

Last week tonight, Move John Oliver had a segment on AI this last week, and a lot of people enjoyed it. And as usual, I guess, this was quite good. There were discussion of chat GPT, of mid-journey, of bias, of ethics, regulations, newsletters.

Not existential risk, but a lot of stuff that is pretty relevant. So if you haven't seen the John Oliver segment, you can find it on YouTube. It talks about a lot of stuff that is pretty relevant.

It's funny how mainstream things are getting. I don't know if you have this feeling, but I used to have this sense that I was in this very tight community that would talk about AGI and take it seriously and all this stuff and would be weirdos to the outside world. I wouldn't tell people what I was working on and so on. And now it's just kind of like everyone's got to take on AGI and rightly so. But it's funny to see it proliferate like that.

It is funny. I mean, everyone knows what GPT is. It's crazy. GPT was like a paper. You know, it was like the research committee cared about GPT-2 and people were like, oh no, GPT-2 can be used for misinformation and things like that. And now talk to anybody, they know what GPT is and sort of what it does. So it's kind of crazy. A lot more weirdness waiting for us.

There sure is. And with that, we're going to wrap up. Thank you so much for listening to this week's episode of Last Week in AI. Share it with friends. Rate us on Apple Podcasts. We actually look at your ratings, so we'd appreciate it. And be sure to tune in. We'll keep going next week.