The Race for AI—Search, National Infrastructure, & On-Device AI

a16z Podcast

Deep Dive

Why is Google's search dominance under threat in 2025?

Google's search dominance is under threat due to the rise of AI-native tools like ChatGPT and Perplexity, which offer more personalized, conversational, and ad-free experiences. Additionally, Google faces legal pressure from antitrust rulings and competition from alternative search providers empowered by phone manufacturers like Apple. AI-driven search engines like Perplexity are growing 25% month-over-month, and 60% of U.S. consumers used chatbots for purchase decisions in the last 30 days.

What are the key differences between traditional search engines and AI-native search tools?

AI-native search tools like ChatGPT and Perplexity provide immediate answers instead of lists of links, offer personalized and conversational interactions, handle complex queries better (average query length is 10-11 words compared to Google's 2-3 keywords), and are less cluttered with ads. These tools also encourage follow-up questions, making the search experience more interactive and engaging.

Why are smaller, on-device AI models becoming more popular?

Smaller, on-device AI models are gaining popularity due to their ability to deliver real-time, performant experiences without relying on cloud infrastructure. They enhance user privacy by processing data locally, reduce latency for applications like voice agents and AR experiences, and leverage the increasing compute power of modern smartphones, which are now as powerful as computers from 10-20 years ago.

How are nation states approaching AI infrastructure independence?

Nation states are prioritizing AI infrastructure independence by deciding whether to build or buy AI capabilities. Smaller countries often enter joint ventures with hypercenters (countries with advanced AI infrastructure) to align with shared values and access compute resources. Key ingredients for AI infrastructure include compute capacity, abundant energy, high-quality data, and forward-thinking regulation. Countries like the U.S. and China are leading in building sovereign AI, while others focus on leveraging their strengths, such as energy reserves, to attract AI development.

What challenges does the U.S. face in maintaining its AI leadership?

The U.S. faces challenges in maintaining AI leadership due to fragmented data regulation, with over 700 state-level AI-specific laws in 2024 alone, many of which are poorly implemented. Additionally, the U.S. has lagged in nuclear energy adoption, which is critical for powering data centers, and lacks a unified federal framework for AI data regulation. This regulatory uncertainty hampers innovation and risks driving AI developers to other countries with clearer guidelines.

What role do private companies play in national AI infrastructure?

Private companies play a significant role in national AI infrastructure by driving innovation and providing critical technologies. In the U.S., companies like NVIDIA and OpenAI lead in compute and AI model development, while in China, private companies are legally required to support national intelligence efforts. The balance between government oversight and private sector freedom varies by country, but unlocking private sector talent with minimal bureaucratic hurdles is key to maintaining AI leadership.

What are the potential applications of on-device AI models?

On-device AI models can power real-time voice agents, enhance AR experiences, and improve user interactions with applications like Uber, Instacart, and social media platforms. They enable faster, more private processing of tasks like voice conversations, image filters, and real-time pricing, while also supporting creative applications like virtual interior design and interactive 3D experiences.

How does the economics of on-device AI differ from cloud-based AI?

On-device AI reduces reliance on cloud infrastructure, potentially lowering inference costs and improving user experience through lower latency. However, the economics are not straightforward, as cloud inference prices have been dropping significantly. On-device models require careful integration with hardware updates, which can impact developer efficiency and iteration speed. The shift to on-device AI may benefit hardware manufacturers and chip developers like NVIDIA in the long run.

Shownotes Transcript

In 2024 alone, I think there were more than 700 pieces of state-level legislation that were AI-specific. The average query on perplexity is 10 to 11 words. The average search on Google is two to three keywords. You've had an enormous amount of NVIDIA's purchasing orders come from the balance sheet of governments. You can actually reimagine the AR experience. If you're a founder like that who has the guts, then your impact on humanity ends up being quite generational.

Here we are again, inching even closer to the end of 2024. And as we near 2025, here are a few dates to give you some perspective. We've had 24 incredible years of Wikipedia, 18 years of the iPhone, and 16 years since the Bitcoin white paper release.

So as we look to 2025 and the speed of innovation is only increasing, we continue our coverage of A16Z's big ideas, together with the dozens of partners who are meeting daily with the people building our future.

Last year, we predicted... A new age of maritime exploration. Programming medicine's final frontier. AI-posed schemes that never end. Democratizing miracle drugs. On deck this year, closing the hardware-software chasm. Game tech powers tomorrow's businesses. Super staffing for healthcare. And throughout our four-part series, you'll hear from all over A16C, including American Dynamism, healthcare, fintech, games, and more.

However, if you'd like to see the full list of 50 big ideas, head on over to a16c.com/bigideas. And of course, if you missed it, check out part one, all about the intersection of hardware and software.

As a reminder, the content here is for informational purposes only, should not be taken as legal, business, tax, or investment advice, or be used to evaluate any investment or security, and is not directed at any investors or potential investors in any A16Z fund. Please note that A16Z and its affiliates may also maintain investments in the companies discussed in this podcast. For more details, including a link to our investments, please see as16z.com slash disclosures.

Today in part two, we'll be talking about the topic of the day, the month, and quite frankly, the year, artificial intelligence. There's certainly AI, AI, AI. And the race is on, whether it's across companies like Google and the disruptors chasing away 10 blue links, or sovereign countries trying to capitalize on the next frontier, or even device companies figuring out their role as AI meets the edge.

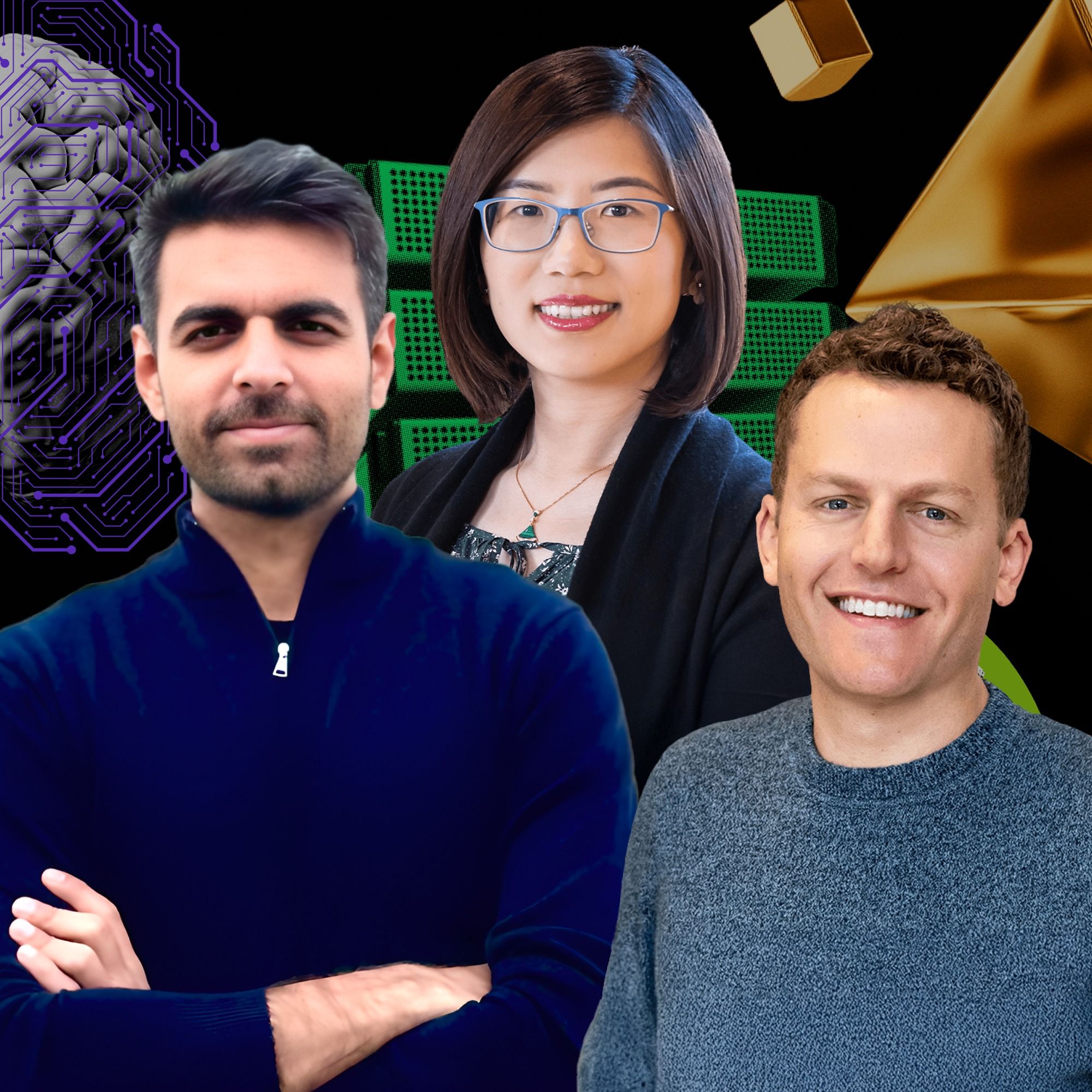

That is all on deck today. There's a bit of an innovator's dilemma here, and I'm excited to watch it play out. That was Alex Zimmerman, and I'm a partner here on the Growth Fund. Here's his big idea. The search monopoly ends in 2025. Google controls 90% of US search, but its grip is slipping.

Its recent U.S. antitrust ruling encourages Apple and other phone manufacturers to empower alternative search providers. More than just legal pressure, Gen AI is coming for search. ChatGBT has 250 million weekly active users.

Answer Engine perplexity is gaining share, growing 25% month on month, and changing the search engagement form. Their queries average 10 words, three times longer than traditional search, and nearly half lead to follow-up questions.

Claude, Grok, Meta.ai, Poe, and other chatbots are also carving off portions of search. 60% of U.S. consumers used a chatbot to research or decide on a purchase in the last 30 days. For deep work, professionals are leveraging domain-specific providers like Causally, ConsenSys, Harvey, and Hebbia.

Ads and links historically aligned with Google's mission. Organize the world's information and make it universally accessible and useful. But Google has become so cluttered and gamed that users need to dig through the results. Users want answers and depth. Google itself can offer its own AI results, but at the cost of short-term profits.

Google as a verb is under siege. The race is on for its replacement. Maybe start by setting the stage. So how big is the search market today? The search market is enormous. Anyone listening to this uses search.com.

Virtually anyone with the internet uses search. But to put some numbers around it, Google that I just mentioned, they're the biggest game in town. They're approaching 200 billion of revenue annually. They're still growing double digits, highly profitable. Microsoft Bing, which has been the number two player for a long time, mid single digit market share, so pretty small. They have 12 billion of revenue. This is a massive, massive market, one of the largest out there.

And it does feel like there are forces reshaping this industry. So tell me about those. So there's certainly AI, AI, AI. But before we jump into that, we can level set with the legal pressure that's mounting on Google. So earlier this year, Google was declared a monopoly. The court ruled that they're spending billions of dollars to phone manufacturers. In the case of Apple, tens of billions of dollars.

is monopolistic, it's anti-competitive, and it's preventing their competitors from gaining share in the marketplace. They are the default search engine on all these phone manufacturers, and it basically makes it impossible for any of the others to gain share. So the exciting technological change, of course, is around AI. The Gen AI search providers are fantastic. And the market has been dominated by Google for close to 25 years at this point.

And as a monopoly, they're not really innovating. They have no incentive to until now. If you think about the Google experience as it is today, it's just a long list of links. And the first few are sponsored. They're ads sponsored.

And I, as a user, when I make a query on Google, I then need to make the decision. I have to sift through the information on that site and find the answer that I'm looking for. That's actually a pretty long process. Instead, with Gen AI, I can just get the answer. So on ChatGBT, Perplexity, Claude, or when I'm chatting with my AI friends on Character AI or on Poe, I get an answer immediately. And that's just a much, much better experience.

I hear tons of people saying they're trying these new tools, but maybe you can give us a sense. Is this shift really stark? Are people really moving over? People are definitely moving over and I'd say there's four main reasons. One is the one we just talked about, the shift from links to answers.

A second one would be how they are personalized, how the answers feel interactive, how they are conversational. The average query on one of these new services has a follow-up question. So it's not just about that initial engagement. It's about the ongoing conversation.

The third difference is about how these new services engage with complex queries. So the average query on perplexity is 10 to 11 words. The average search on Google is two to three keywords. As you can imagine, their ability to leverage that information and get you what you want is much higher. On Google,

You're going to get a list of links. One of those links might have one side of the debate. Another link may have the other side. You may never make it to that second side. On perplexity, on chat GBT, they're going to synthesize both sides. They're going to give me both perspectives. And then the fourth is,

They're just not cluttered with ads. That may change over time, but putting it all together, it should be no surprise that these AI-native services are gaining share. For 10% of consumers, they're now referring to ChatGBT as their search engine of choice. Perplexity queries are growing 25% to 30% month over month. And I saw a survey last week that 60% of consumers for purchase decisions in the last 30 days used a chatbot.

All of these services are growing massively. So if we look at some of the tools that exist today that are coming for that market share, you take a Perplexity or you take a ChatGPT, those are also broad-based search engines or chatbots

But there are these other players, you mentioned ConsenSys or Hebbia, for example, that are verticalized. Does it surprise you that the last wave of search engines weren't verticalized? It does not surprise me that the last wave or the next wave will result in a winner-take-most dynamic. It's a pretty monopolistic market.

Search is very much a distribution game. Google has an incredible brand. They have incredible direct traffic. They are the default search engine on most browsers. They are the Kleenex, the Band-Aid of online search. It's important to note that search engines definitely benefit from network effects.

More users coming to Google provides more data around preferences for Google. That means the next search has more relevance and brings more users. It also means more users means more advertisers, more profit dollars that you can invest back in the service.

or into ensuring that Apple puts them as the default provider. Absolutely. And so as we think about this next AI wave, it sounds like you think maybe a similar dynamic might be at play. Or how do you think about maybe these smaller players actually finding a wedge or differentiating? One framework for thinking about

how search could fragment or verticalize is just looking at why does vertical software beat horizontal software in some cases. And it's typically the vertical requires a specific user interface, proprietary data, features, workflows, compliance, et cetera. And that's why Viva and Life Sciences and Pharma can beat Salesforce in horizontal CRM.

For the average query on ChatGBT, Perplexity, or Google, they're pretty good. You don't need a vertical-specific application. But for deep domain research, I can imagine a world in which standalone apps thrive. In the case of our portfolio company, Hebbia, they have a unique application

interface. It looks like a spreadsheet, which is native to financial services and their customers. It brings in public filings, earnings transcripts, but also private data. It can bring in research. It can bring in survey results.

you can query and then the output can also be specific to that industry. Not just look like a spreadsheet, but populate a meeting agenda so you can go in immediately prepared. And so when I think about vertical search or vertical apps, it's not just about the search, it's about everything around the search. That's a good distinction. And as we think about how this maybe continues, a progression of this industry,

If we go back to the last wave of search, we did see a bunch of search engines get traction to start maybe AltaVista or Ask Jeeves. We all pick on Ask Jeeves. You know, all the Gen Zers will not know what the hell any of that is. But they had some traction and then Google exploded and took over. Do you expect that same kind of consolidation or is this time different? I think consolidation should be expected in general purpose search because of distribution, because of network effects.

Google won the last time around in part because of their PageRank algorithm. It produced superior results. It had a minimalist UI. It was really simple. Ask Jeeves, AltaVista, they had tons of ads. It was cluttered, links on the homepage. No one wanted to use it. The irony is that today, what we're all complaining about with Google is that their pages are cluttered with ads on the results, and that creates the opportunity for these new search engines.

I would expect consolidation again. And I think something else that's interesting is what you're pointing at with some of these verticalized solutions is that it's not just for the everyday consumer, but also for the lawyer or for the academic researcher. Do you think that is also part of the fragmentation? Do you expect that to continue?

Search historically has been thought of as a consumer product, and for good reason. I make a lot of searches that are related to my personal lives, but I use, as do all the professionals you named, search at work. I probably make more Google searches at work than I do around personal matters.

But there is a category of enterprise search in a market that has existed. And you can think of that as querying Box, Dropbox, Salesforce all in one. But I think those two worlds are going to blend together.

consumer search should not just be limited to what's on the web and enterprise search should not be limited to just proprietary data. It should have both. And a lot of these AI native services are working on that. Yeah, that's a great point. And as we think about those two models maybe blending together, we think of consumer search for sure. It's always been an ad-based model, or at least today. Google is this massive economic engine, but no one's paying a subscription for that. The new

entrants do seem to have a more subscription-based model. Is this a temporary thing, or how do you see those two dynamics playing? So the subscriptions could stick around, but I think this is going to continue to be a digital advertising-focused market. And I think digital advertising is going to grow because of these AI services. So these AI services today, they don't have ads. I imagine that's going to change.

It's been in the news that Perplexity is already talking to advertisers. They have a high-income, highly educated user base that should be attractive to these advertisers.

But as we discussed earlier, the queries on these services, they're longer, they're more complex, they're more detailed, they're more personalized. And because of that, there's greater intent, which should be more helpful to advertisers in producing the best results on their end and creating an even larger market. But in the meantime, these subscription business models, they make a lot of sense. They bootstrap the business, they cover costs. And from a personal perspective, they're

I'm very happy to pay $20 a month for ChachiBT, Perplexity, and Poe. I mean, they provide so much value. A lot more than $20 a month. Yes, yes. So obviously this was your 2025 big idea, and I know this is going to be a many-year, maybe even decade-long progression, but what are you paying attention to in the next year? What opportunities are still on deck?

As I looked at 2025, Google may be on decline, but I don't expect them to go down without a fight. Google and Meta, I think they can be big players. Google AI overviews already has a billion monthly active users. Meta is not far behind. They have 500 million monthly active users. Again, this shows the power of distribution for search.

And maybe to that end, Apple, if you could call them a dark horse, is a dark horse. They are not investing the CapEx like Meta and Google and Microsoft and Amazon, but they control a central node to the consumer. And if they wanted to build a search application, the next day they could have a billion users.

If you're like me, you're probably always looking for ways to improve your morning routine, whether that's waking up early, which is certainly a battle, eating a good breakfast, or listening to a quick and informative podcast, just like the Morning Brew Daily. Every weekday on the Morning Brew Daily, Neil Freiman and Toby Howell break down the biggest news in business, the economy, and just about everything else, all in 30 minutes or less. So why not add it to your routine and listening queue?

Follow Morning Brew daily on Apple Podcasts, Spotify, or wherever you get your podcasts. We just heard how quintessential winning AI is to multi-trillion dollar companies like Google and Meta. What about nation states? In the race for AI dominance, compute has become critical national infrastructure, but not every country is equipped to compete in that race.

That was... Manjaneh Mida. I'm a general partner here at A16Z where I focus on AI infrastructure. And here's his big idea. My big idea is infrastructure independence. And it's the idea that a lot of countries and regions are starting to realize that modern AI, deep learning based AI, generative models,

are a form of what have been called general purpose technologies. In the history of humanity, we've only had maybe 20 or 22 or so general purpose technologies. And these are usually types of technologies like electricity, the printing press, that have very broad based applications in society. They end up being largely digital.

horizontal economic multipliers and progress multipliers across a whole set of pillars and domains in society. There are usually two moments in the adoption of a general purpose technology where first you

countries, nation states start asking, are we going to welcome this technology or are we going to be hostile to its development? Okay. And that's the first step that becomes pretty important in a country's progression or in a nation state's progression or region's progression is, do we even want to adopt it? Do we allow this in? Do we allow this? Regardless of whether we own it. Do we embrace it or not? And then the second is, do we build or buy? Right. Which is, can we trust somebody else to provide it for us? We're well past the stage of, do we embrace it or not?

We're already well into billions of people around the world now having already embraced it. So the governments don't really have a choice, so to speak. So in a sense, AI is already percolated throughout society at one of the fastest diffusion rates of any general purpose technology because it's piggybacked off of years and years of digital infrastructure. And so now the question everybody's asking is, do we build or buy? It's the single largest probably purchasing decision that's going to happen in the next 24 months is, do nation states...

start buying. Do they build or buy? Do they build or buy? Yeah. I love the parallel of companies because there are many companies that do choose to build, but also companies that choose to rent or buy. Right. And so as you think about that, there's large nations around the world like the United States, which are clearly building. Right. But talk about the argument for smaller nations or the 190 plus that should be thinking about buying.

The good news here is that we've got hundreds of years of human history to look at for clues about what happens next. If you're a small country and you were in the early 1900s, you were watching the modern electrification of the developed world, the United States or Europe. If you chart what happened with many of those countries, many of them decided to actually enter into what were called joint venture agreements.

It starts with a joint venture with a country that's at the frontier. These countries at the frontier of AI is what I call hypercenters. These are countries that have the ability to develop, train, build, and host their own frontier models. I call them hypercenters mostly as an homage to the word hyperscaler, which is that there have been a handful of companies that have had the compute and the talent to actually build frontier AI. And now I think what we're seeing is a shift from just those companies driving a bunch of frontier AI to countries and regions driving it.

And so if you're a small country and you're going, well, we certainly believe that it's important to have our own AI infrastructure. We want to be independent, but we don't have all the compute.

required to train these models or we don't have all the talent locally. Then what you enter into is a joint venture with a country or an overseas partner that matches your values. And this is the really important thing about AI and AI models and how they're different from infrastructure like electricity is there's a fundamental encoding of human values in AI models because they're trained on data. Yeah. And the data

has these local norms and cultural values embedded. And so if you happen to train a model on a bunch of internet data collected in the US, the models are just generally- American. American. They're encoded with that. Yeah. And if you're trained the models on data in France-

They actually subtly have a bunch of different values encoded in the models that reflect those cultural norms. And so I think step number one, if you're a small country, is actually being a little bit crystal clear about which value systems you align with most out of the hyper centers. Now, it's not lost on people that the way the internet worked out was there essentially ended up being two internets, right? The Chinese internet and the rest of the world. AI may not end up looking that different.

And if you're a small country, what you really have to figure out is whose values align more with yours. A good historical precedent to look at here is the technology of money, right? Money is a pretty general purpose technology. And what happened in the early,

1900s with the modernization of finance is a number of countries started to ask the same question is, do we build or buy our own currency? Do we rely on the dollar? Right. Or do we have our own currency? And that led to the modern day currency regime where the dollar is a single global reserve currency. And that happened through a bunch of allied cooperation where a number of countries realized they did not have the local resources required to hold the peg of gold right to the dollar. And so

I think what we're going to end up seeing is the emergence of very similar to what happened with currency flows, where you have a couple of large countries that control their own sovereign currencies, right? You have the US, you have China, you have India. And then you have a number of smaller countries that decided they wanted to be flow points.

So you have Singapore and Ireland and you have Luxembourg and Zurich that become massive global leaders in modern finance because they decide they want to ally

with one of those power centers. Yeah. Right? So if you think about in the AI world, let's call regions at the frontier hypercenters. And then we have compute deserts. And these are places that have literally no install base of compute capacity to even be relevant. All the smaller folks have to figure out which of the hypercenters they want to align with. Right. And how do you become a modern day Singapore, Ireland, Luxembourg, et cetera, for the world of AI infrastructure?

And so it starts with deciding whether you want to be a compute desert or not. And if you're not, and you're going to actually embrace AI infrastructure as a government, then I think you've got to figure out which hypercenter you want to align with most. And then it becomes actually quite easy to reason about how to be a valuable ally.

That's such a good parallel because a lot of people think about resources in terms of the farmland that you have, the people who are working in that economy. But what you're pointing out is that countries for a long time have offered value or offered a resource in other ways. And as we think about AI, there's a few things that you've pointed out that countries can invest in, whether it's the compute capacity that they have, the energy resources to power AI.

AI and forward-thinking policy. So maybe we can break down each of those. How do you think about each of those blocks and how countries should be maybe maneuvering or investing in those things? The good news is that there's only three or four ingredients here that really matter. The first is compute, which we've talked about. The second is abundant and low-cost energy.

which powers the data centers. The third is data, just the availability of really high quality tokens for these models to learn on. And the fourth is regulation. Yeah. So that's the good news. Now, the bad news is that world is pretty unevenly split up, right? Some countries just have dramatically more compute than others. Yeah. Others have dramatically more energy than others because of their natural reserves. Yeah. So if you are in the Middle East, you may not have massive data centers yet,

But what you do have is vast reserves of oil. And how you translate that into becoming a hyper center is quite simple. It's the law of comparative advantage, right? You've got energy. You should use that to attract the world's best teams and companies and foundation model labs and so on by trading what you have.

with what they have. And so I'm quite bullish on allied ties between countries that recognize what their strengths are and then partner with other countries to fill that gap. And by countries, I mean private companies too from other countries. One of the things we may end up seeing in the coming years is jointly trained models between countries. Basically, I think for most countries, it's impossible to have total infrastructure independence at all parts of the stack.

What is much more feasible is to be great at one part of the stack and then collaborate with another sovereign or another country or region to achieve joint independence from a value system that you don't subscribe to, like the CCP. And so I actually think what's more important is for countries, regions, and frankly, some of the world's largest companies that operate at nation scale, to assess the

which parts of the stack are critical to them that they must have independence from. And the answer there is the function of what asset they already have, right? It's their strengths. And then if they've got a critical gap to fill, it's to go and buy that.

Now, in the long term, you might be able to build things out. But with infrastructure, especially of this kind, it can often take years, if not a decade-long scale. So as an example, lower down in the stack from the model layer, you have the chip layer. And even below that, you have the lithography layer, right? There's a company in Holland called ASML.

that builds literally the world's most important machines. How many machines do they make per year? It's some very small number. Each machine costs about $200 million. And I think they did $23 billion in revenue this year, 40% of which came, by the way, from China, because China was stockpiling...

ASML machines before a bunch of export restrictions kick in. Yeah, and they're the only company that can actually make these EU lithography. They're the only company that can do EUV lithography of this precision. Now, is it feasible for the US to say we're going to build our own ASML like tomorrow? No. I mean, it's just going to take 10 plus years, right? EUV lithography just takes a really long time. On the other hand, is it feasible for a smaller country to say we're going to train our own local models?

at the frontier. That's a little bit easier to do over the quarters of timescale if you've got a leading research team. If. If. And that's a big if, right? There's only a handful really of research teams globally that are capable of this. And so to answer your question, yes, I don't think sovereign AI or infrastructure independence means you have 100% ownership over every part of the stack. That's infeasible over the short term. It means that you don't rely on somebody for a critical part that you don't trust. Right.

Can we talk about private companies for a second? Because you've brought them up a few times. How do you think about...

that dynamic where as a nation state you're saying, okay, you need this sovereignty. Right. But at the same time, can you rely on that sovereignty through the companies that exist within your nation? Just using America as an example. Right. Does the government really need to be involved or can they just let Anthropic or OpenAI kind of command that part of the stack? Or how do you think about the difference between government versus private enterprise? The line is pretty stark in a few countries and is more blurry in others.

So in China, the line is very clear. There's a law called the PRC 2017 National Intelligence Law that says Chinese individuals and entities are required to support PRC national intelligence work by law, which means if there's any technology that a PRC company has access to, they are automatically obliged to make that available to the government. Mm-hmm.

And that's not the case in the United States. There are some covered types of technology, like dual-use technology, like classified defense technology, where if you are developing it, particularly if you're funded under a defense program, then you are required to make that available to government because the government's paying for the development of that technology. But by and large, the private sector in the United States and most other allied countries is by default protected from having to make its technology available to the government. It's not the case in the CCP. So,

I think that the question becomes for most countries is where on that spectrum do you want to exist? There's a general framework through which most countries

infrastructure is categorized. Every country approaches it slightly differently, but the G5, or the five I's, the US, Canada, UK, Australia, New Zealand, we generally have a joint approach or framework to categorizing this infrastructure. And by and large, AI models have not been categorized as being dual use or protected under national security. The short answer is the history of technology has largely shown that if you'd like to win,

then unlocking the best talents of a country with as few bureaucratic slowdowns usually ends up winning. Well, if we think about wanting to keep America at the frontier and we think about the different layers or ingredients that we talked about earlier, are there any high risk areas that we think or that you think we're falling behind?

I think we go back to the four ingredients we talked about earlier of the frontier of AI, which is compute, data, energy, and laws. Now on the compute front, I think the private market in the United States is doing a pretty good job. It's pretty responsive to market demand. And I think there's no coincidence that the largest infrastructure businesses in the United States are chip companies, right?

and computing companies, because I think we've generally done a pretty good job of letting the market feed that demand. I think on the data side, things are extraordinarily tough because one, the Biden executive order last year was a starting gun that said, oh, AI is important. Please do something about it and left it to the states to figure it out. And the states have all taken a complete patchwork of approaches to data regulation.

In 2024 alone, I think there were more than 700 pieces of state-level legislation that were AI-specific. Wow. And a bunch of those laws, if you look at it, are really well-intentioned but atrociously implemented ideas for data regulation. Right. And impossible to adhere to. Basically impossible to adhere to. And so I think one area where we're just handicapping ourselves is that there's no unified framework in the United States at the federal level yet for data, especially around training.

And I think we needed that yesterday. Overseas in a number of countries where rule of law, especially on copyright and IP and so on is just less stringent. Those labs are happy to just race ahead. Whereas our companies here are trying to figure out what they should even comply with.

And that greater hurts you more than actually a laissez-faire approach. Right. I think our companies would be totally fine. The best founders at the frontier of AI would be fine in the United States being compliant. They just want to be told what to comply with. Not across 50 different states with different regulations that are changing and unclear, and in some cases, impossible. Right. And there's also the fundamental scientific problem that

They're just very real data walls that these models run into. And I do think one of the things that hurts frontier research in the United States and allied countries is a lack of government support in collaborating across borders to make more data available to allied regions. So that's number two. On energy, I think we've obviously hamstrung ourselves in the United States with nuclear. France, for example,'s embrace of nuclear 20 years ago has positioned them to have extraordinarily efficient data centers today.

Whereas in the United States, I think we've basically shot ourselves in the foot around that. And then lastly, I think around inference regulation, what we're not doing enough of is making it clear who the liability rests on.

I've seen a number of proposals ahead of legislative sessions next year that want to hold model developers liable for the outputs of the inference, even if the misuse is being done by somebody else. And what does that do? That drives those very important developers elsewhere. Essentially forces most startups to lose much needed ground to big tech companies and that entrenches incumbents more.

So as we think about 2025, whether it's in the U.S. or elsewhere, because I mean, this idea really is truly global. What are you looking out for or what should maybe, let's say, a legislator or let's say the head of a nation, what should they be thinking about? And what are you looking out for in some of those decisions? Are you looking for countries that are buying GPUs or building out new energy centers? What are you paying attention to? The leading indicator is definitely compute. If you think about the AI supply chain, the first mile starts at the data center.

That's the new atomic unit of sovereignty, I would say, which is a new thing. We've never actually had nation states think about atomic units of an AI data center as a thing that countries should be purchasing. And I think about 24 months ago, we started seeing nations reason about that first mile as being important. So you've had an enormous amount of NVIDIA's purchasing orders come from the balance sheet of governments.

just unprecedented demand they've been seeing from nation states realizing that they want to be hyper centers. Yeah. And that starts with them placing orders 12 to 36 months in advance to take delivery of GPUs because if you don't get in front of that line, it's over. You're getting it after everybody else. So that was step one. The second thing I look for is founders who are deeply both technical, who often come from deep research backgrounds and scientists who've led frontier model development already.

often inside of large hyperscaler labs. So an example is Arthur Mensch, who started Mistral. He worked at DeepMind. Or Guillaume Lomp, who led the initial Lama family at Meta, who are deeply mission-led and believe that they can help solve a bunch of these infrastructure problems for the world's largest governments. So there's a new class of founder who's both primarily technical and has their training in academia, but is motivated to solve all the really hard problems that come with having to deliver, solve a bunch of these problems

infrastructure problems for really large nation states and regions. But I think if you're a founder like that who has the guts, then your impact on humanity ends up being quite generational.

But now, let us convince you that the future of AI may not be so straightforward. Instead of models running in the cloud, perhaps we're bound for a future where many more applications will run on-device. I expect smaller on-device AI models to dominate in terms of volume and usage. This trend will be driven by use cases as well as economic, practical, and privacy considerations. That was Jennifer Li. I'm a general partner on the infrastructure team. Here's her big idea.

My big idea is on-device and smaller generative AI models will become more popular in the next year. If you're a frequent user of Uber, Instacart,

Lyft, Airbnb applications, I'm sure there are many, many machine learning models already running on your device. Very easily when you load up an Uber screen, it's 100 models that's coordinating routes and giving you a real-time price. What I'm more referring to is the generative models that are creating image, voice, video will become more prevalent in the same way to run on device and within your applications.

similar to these other traditional machine learning models. The models that we've seen in the last few years do take a lot of compute. And so can you square that with how much compute we can get from something like a smartphone and also these models, whether they're getting smaller or how this kind of comes together? Yeah. First, never underestimate the compute power on a smartphone is probably as

powerful as a computer 10 or 20 years ago. That's thanks to Morse law. At the same time, the models are for especially smaller sizes of 2 billion, 8 billion parameter models. That's enough compute for them to run on device. And it can generate and create very robust experience already, be it text or image or audio. And some of these models, if they are diffusion models, they're intrinsically smaller than large text models to be very capable of

And there's another new set of tooling and also technology developed around distillation is if you have a very powerful large model, can be distilled to a smaller parameter size model and still maintain a lot of the capabilities that a large model contains. So both on the infrastructure side and also on the device compute power side, it's a perfect setup for the smaller models to be more popular.

Totally. So I'm hearing a few things. I'm hearing that the smartphones are becoming more powerful. Some of these models are becoming more efficient. But that kind of brings us to the question of why. So why would we want to run these models on device? What are the advantages of that and also the disadvantages? As consumers and day-to-day users, we're already spoiled by real-time and very

Performant applications, if you're talking to a chatbot, if you're talking to a conversational AI, if you're adding filters to your video and images on Instagram or TikTok, you don't want to wait for multiple seconds to load a new filter. You don't want to wait for multiple seconds for the chatbot to respond to you. Those are many real use cases that can really delight and improve user experience.

Also optimization for compute, there are a lot of harder, more complex questions or video processing that requires going into the cloud. But largely if it's, again, changing user experiences and improving the visual and sound effect of things, it doesn't have to route through multiple servers going through a network. So both from a user experience and efficiency perspective, it's a much better design to run some of the models on device.

And then the last part is just privacy. Users do care about if my meeting notice is taken locally, I probably will use this meeting note taker much more often than knowing some of the data is being sent to a server and they're processing a lot of my private conversations. So depends on the use case, again, for the application. I think that also improves the adoption.

Absolutely. And that has my wheel spinning for sure in terms of maybe this unlocks new applications. So on that note, you mentioned a few already, but where might we see applications pop up or where perhaps are we already seeing applications with these on-device models?

First come to mind is real-time voice agents. It's a very popular topic and it's something I'm very excited about. We invested in and work very closely with this company called Eleven Labs, and that's one of the areas they're spending also a lot of efforts on is not just having the human-like synthetic voice, but being able to handle conversations fluently with end users and to get the latency down and also to think about what type of real-time exchanges you want to have

with your AI companion, your support agents, or any sort of life coach. I think we do need to think about the modality and the latency in a much more, I guess, improved fashion. So I won't be surprised if some of those inference workloads are running locally.

coming into the next 12, 18 months. Absolutely. And as we think about how maybe these different models also interact with other parts of a smartphone, let's say the camera, do you expect this to also maybe change user behavior and what we can do?

100%. You can actually reimagine the AR experience of if I point a camera to this room and I want to see a new surface and wallpaper and furnitures, the technology is already there. Like we can actually leverage both generative AI and the camera and also prompting interaction to create new experiences already of how we interact with real physical life.

And that's where I also think a lot of on-device models will play a big role of how to interact with the 3D world, how to interact with the physical world, and not just using the camera for capture, but also using it as a projector. Definitely.

And let me ask you about economics then, because a lot of the models that exist today do rely on inference and sending that inference up to the cloud and that costs money. Do the economics change if you all of a sudden have these models running on device on the smartphone compute that already exists? Do the economics actually shift or can we come up with new ways of monetizing in this new world?

Yeah, it's a great question. And I honestly don't really have the answer because even for larger models, the inference price has been dropping really significantly. For the optimizations to be done, if it's a very workload intensive compute, let's say using a computer or phone, I think we'll still have economic benefits. But I don't think it's a very direct answer of it's going to substantially reduce infrastructure costs for some of these applications. But

But architecting and structuring, sort of the whole tool chain, it does change sort of economics on the developer efficiency and sort of iteration speed. There is pros and cons when shipping in the cloud where it can launch more continuously on device has its own challenges because you'll have to go with the updates with the application and that is what happens.

with hardware. So there is that side of economics that I think will have impact from how teams are being structured in launching models in a hybrid mode. So I would encourage teams who are thinking of leveraging this technology, consider it more holistically. Super interesting. And as we think about that world, are there any players that you think really succeed here? Like in one sense, I could see maybe the phone manufacturers. I could also see maybe, you know, the manufacturers of wearables,

being able to introduce all kinds of new applications. Think of wearing an Apple Watch, Fitbit, Whoop, things like that. Is it Nvidia that benefits in some way? Who do you think actually benefits from this idea of the models becoming more efficient?

and these on-device models becoming a thing? Right now, I've seen more interest and enthusiasm from the hardware development side, whether it's chips, it's the filmmakers. I do think there's also a lot of interest from the model developers as well of just proliferating the model adoption across different setups and devices. But I think over the long run, it's probably going to impact the whole supply chain. We talked about some of these macro trends throughout.

How do you specifically see those trends shaping up in 2025? And is there anything in particular that you're putting your eye toward? This will sound more like a consumer investor. I've been like a hardcore infra-investor, but I am very excited about the mixed reality where generative models, 3D models, video models that really, again, makes the reality of what we're seeing today and through the camera lens, through the microphones, much more creative.

world, even when sitting at home or when going on a ride. That's the type of experience I'm very much looking forward to. I think the foundation model technology is pretty mature. The infrastructure is getting ready. So I'm personally very excited about that sort of the new consumer experience.

All right. I hope these big ideas got you geared up and ready for 2025. Stay tuned for parts three and four. And again, if you'd like to see the full list of 50 big ideas, head on over to asicsnewsy.com slash big ideas. It's time to build.