In the Arena: How LMSys changed LLM Benchmarking Forever

Latent Space: The AI Engineer Podcast — Practitioners talking LLMs, CodeGen, Agents, Multimodality, AI UX, GPU Infra and all things Software 3.0

Chapters

- Chatbot Arena was inspired by Stanford's Alpaca project.

- The project aimed to address the challenge of evaluating open-source chat models.

- Initial success came from offering free LLM inference and a side-by-side UI for model comparison.

Shownotes Transcript

Everyone, welcome to the latest space pakia. This is a little partners and see you and residents of visibility partners enjoy in my school this is there a smaller I hey.

And today, we are very happy and excited to welcome the necessities of women from els guys. And is .

actually saw you I think at last .

year's europe E S you were presenting a paper which I don't really uh super understand, but you some theory paper about how your method was dominating over other such a search methods. I don't remember what I was, but, uh, I remember that you are very confident.

Speaker, oh, I will. You remember you didn't never connect up. Yes, that's definitely yes. I see again.

yeah. Was frantically looking for the name of your paper. And I couldn't find IT. Basically.

I had to cut IT because I didn't understand IT. Is this I D control? Last in the past? Man, last in the past.

It's always interesting how new these academic conferences are, sort of six months behind where what, you know, people are actually doing, but comfortable this control. I would recommend people check IT out. I have the record and I just never published IT because I was like, I don't understand this enough.

explain IT. So you but evil scores.

evil scores are very easy to understand. You guys are responsible for the biggest revolution in language model benchmarking in the last few years. Maybe you guys want to introduce ourselves and maybe tell a little bit .

of the brief history of alarm is i'm willing i'm a few year is a student and you super k uh working on chap arena these days doing prosource.

seeing air bench marking. I'm a sixty H D student here berkely. I did most of my PHD on like iraq statistics and sort of foundations of model evaluation and and testing and now working one hundred fifty percent on this chap altering it's great and what .

was the origin of IT? How do you get up with the idea ah how do you get people to buy in? And then maybe what was one or two of the pilot moments early on stand or pretty? thanks. Yeah yeah.

Jabra brother was started this year in April around that before that were basically experimenting in a lab how to find tune H R. Bot open source based on the lama. One model may have released at that time.

Last one was like a base model. Uh, people didn't really know how to find to IT. So we were doing some exploration tions. We were inspired by stanford alpaca.

So we basically yeah grow data set from the internet, which is all shorted to pity data set, just like the dialog a set betwen user and and chat your bet conversation and turns out to be like pretty high quality day 的 that alata。 So we find you on IT and then we try that and release the model caledonia and people are very cited to all IT because I can demonstrate open way model, can reach this conversation capability similar to ChatGPT. And then we basically released the model with and also una demo website over of the model that you were very side of the viet.

But during the the the open, the biggest changes to us at the time was like, how do we even evaluate? How do we even argue this model we trend is Better than others? And I was the gap between this open mount, that other preparations offering any time was like to beauty for us, to son like club one was the difference between them.

And then after that, like every week, there is a new model in fund release. So even until still not right. And then we have that demo website or we can now.

And then we thought that, okay, maybe we can add a few more open model as well, like A P, M model as well. And then we could realize that people need a tool to compare between models. So we have like a style side U I implemented on the website to that people choose, you know, compare.

And we quickly realized that maybe we can do something like like a battle of on top of E L M, like just anzy identity, and that people vote which one is Better. So the community designed which one is Better, not us, not as arguing your our mother banner or and that turns out to be like people very excited about this idea. And then we tweet.

We launch last year a roma. And then was like, first two, three weeks I just a few hundred thousand views suit under our lunch ets. And then we have regularly double update. We could be eating the time, adding new model to pity forces. So that was like I was another .

people moment. Just jump in. Would be pride all .

last like the GPT i'm a little .

yeah in the .

beginning, I saw the initial release was made third of the little more on April sex. We did a benchMarks, one of one episode or pockets. Just kind of you know how so much of the data like in the pretty training corpus and lot of bly like the benchMarks are really not what we need to do.

What are not a modest good? Why did you not make a benchmark maybe at the time? You know, if we're just like here, I just put together a little bunch of data again, run a mega score that seems much cheaper, then coming out with a whole website where the users need to vote. Any thoughts behind .

that thing is more like, fundamentally, we don't know how to automate this kind of benchMarks. When is more like, you know, conversational hot turn and more open enter task that may not come with a concern.

So that say, if you ask a model to help you write a email for you, for whatever purpose, there is no grounds chers, how you scored that right? Or write a story, or a creative story, or many other things like how we use tragedy these days is often, times more like opening this. You know, we need human in the loop to give us you back which one is Better.

And I think no ones here is like sometimes also hard for human to keep the absolute rate. So that's why we have this kind of pair wise compares and easier for people to choose which one is Better. So from that way, you know, use these paralized comparisons and votes to code ate the leader. You can ask me about the .

thole logy. yeah. I mean, I think the point is that and you guys probably also talked about this at some point, but static benchmark red intrinsically some extent unable to measure general mode performance.

And the reason is because you cannot free Anita, all the outputs of a generation model change the model. It's like the distribution of your data is changing new labels to deal with that. The new labels are great, automated little right, which why do you pursuing both and yes, static benchMarks.

They low you to zoom in the particular heights of information like factuality historical facts. We can build the best benchmark of historical facts, and we will then know that the model's great historical facts OK. But ultimately, that's not the only access, right? And we can build fifty of them and we can about fifty access. But it's just so the problem of general model valuation is just so expensive and it's so objective that is just maybe non transition ally impossible, but at least we don't see away we didn't see away of coding that into .

a fixed match. But on the other hand, I think there is a chAllenge where this kind of like online dynamic benchmark is so is more expensive than standing venture. Are people still needed like their view models, study benches, track?

yeah. It's not like benchmark uniform and benchMarks. IT just measures a different kind of performance that has proved to be useful.

You guys also publish mt bench as well, which is a static version, let's say, of chat now, right, that, that people can actually use in their development of models.

right? That's, I think one of the reason. Will we still do the sad bench fund? We still wanted to explore, experiment whether we can automate this because people eventually model developer needed to fast iterate the mode. Yeah so that's why we export like L M S, A judge and arena heart trying to filter, you know, elect the high quality data we collect from chip a, the high quality subset, and use that as a questions and an ultima judge apply so that people can quickly get high quality signal benches, signals using this overnight ban.

You know, as a community builder, I am curious about just the initial early days. Obviously, when you offer effectively free A, B testing inference for people, people will come and use your arena. Would think like the the key unlock for you was IT like funding for this arena was IT like marketing. When people came in, you see a noticeable skill in in the data, which obviously now you have enough data as you can separate things out, like coding and hard problems. But in the early days, he wish, is all sorts of things.

Yeah, maybe one thing to establish IT first is that our philosophy has always been to maximize organic cuse. I think that really does speak to your point, which is, yeah, you know, why do people come became the use rail lemon for this, right? And also a lot of users just come to the website, is direct chat.

Is you a chat of the model for free? And then you could think about IT like, hey, let's just be kind of like more on the selfish or conservative actionists side and say, no, we're only giving credits for people that battle or you know so and so for strategy wouldn't to work right? Because what we're trying to build is like a big function, a big function that can direct people and some people are assisting and interested and they bottle. And yes, the the distribution of the people that do that is different. It's like as you're binning out, it's like that's not thusia tics.

They are adopter of china logy .

or the like games like people like this. And we run a couple of surveys that indicate this as well. Like our user basis. Yeah.

we do see a lot of like developer come to the site asking coding questions .

thirty first yeah obviously not reflect .

of the general.

but it's like reflective of some corner of the world of people that really care. And to some extent maybe that's alright. They just those are like the power is and you know what? I'm trying to claim that we represent the world, right? We represent the .

people that come in both. Did you have to do anything marketing wise? Was anything effective? Did you struggle at all? Was its success from .

day one at some point almost okay? Because as you can imagine, this all depends on part user like parties, like community engaged participation. If no one come to vote tomorrow, then only awarded. So we had some .

period of time when the number of users was just after the initial launch that went to lower. yeah. And you know at some point, I didn't did not look promising.

Your right? Actually, I joined the project that a couple months to do the statistical aspects, right? As you can imagine, that's how I kind of hope in my previous work at that time, IT wasn't like, you know, IT definitely wasn't clear that this was like gonna be the eval or something. IT was just like, oh, this is a core project like willand seems awesome, you know, and that's IT .

definitely there's there's in the beginning because people don't know us. People don't know what is for. So we had hot time, but I think we were lucky enough that we have some initial momentum and as well as the competition between movies just be and makes the more fun to us, right? Because always number one.

Number one, there's also an another minute trust our main priority.

And everything we do.

we, anna, make sure we're doing everything like all the eyes are daughter in the teacher cross and nobody gets unfair treatment and people can see from our profiles and from our previous work and from whatever that we're trust where people are not like trying to make a book and we're not trying to become famous off of this or something. It's just we're trying to provide a great .

public new project.

yes. I mean, you you are kind of famous now.

Just a dive in more into biases and know some of this is like statistic control. The classic one for human preference evaluation is humans demonstrative ly prefer longer context or longer outputs, which is actually something that we don't necessarily want. You guys, I think maybe two months ago put out uh some length control studies. Apart from that, there there are just other documented biases like I just be interested in your review of the what you've learned about biases and um maybe a little bit about how you have control for them .

at a very high level yeah humans or bias totally you good like in various ways. It's not clear whether that's good or bad. You know we try not to make value judgements about these things.

We just try to describe them as they are. And our approach is always as follows. We collect organic data and then we take that data and we mind IT to get whatever insights we can get.

And you know, we have many millions of dat points that we can now use to track insights from. Now one of those insights is to ask the question, what is the effect of style? right?

You have a bunch of data. You have votes, people voting in your which way. We have all the conversations. We can say what components of style contribute to human preference and how do they contribute? Not an important question.

Why is that an important question is important? Because some people want to see which model would be Better if the length of the responses weren't the same, were to be the same, right? People want to see the causing effect of the model identity controlled for length or controlled for markdown number headers.

Bullet list is the text phone. Some people don't, they just don't care about that. The idea is not to impose adjustment that this is not important, but rather to say, expose factor.

Can we analyze our date in a way that d couples, all the different factors are going to human preference? Now the way we do this is via statistical or in russia, not is to say the arena score that we shown on our leader. IT is a particular type of linear model.

right? Is a linear model that takes is a logistics regression that takes model identities and fits them against human preference. So regresses human preference against model identity, what you get at the end of the legislative agression is a parameter factor of cofidis.

And when the cofidis large IT tells you that GPT for or or whatever very large conversion, that means that strong. And that's exactly what we report in the table. It's just the predict of effect of the model identity on the vote. Another thing that you can do, you can take that vector. Let's say we have m models that is an n dimensional vector of coalitions.

What you can do is you say, hey, I also won't understand what the effect of length, so add or add another entry so that vector would just try to predict the vote, right? That tells me the difference in length between two model responses. So we have that for all of our data. We can computer expose factor we added in as the regression, and we look at that predictive effect.

And then the idea, and this is formally true and certain conditions, not not always verifiable once, but the idea is that adding that extra coefficient to this vector will kind of suck out the predictive power of length and put IT into that ample first cofie ent and the bias, the rest, so that the effective link is not included. And that's what we do in stall control. Now we don't just do with our enforce one.

We have know five, six different style components staff to do with mark down hers and bottle lid lists and so on, that we act here. Now where's this going? You guys see the idea is a general methodology.

If you have something that's sort of like a nuisance, premature, something that exists and bright predictive value, but you really don't want to estimate that, you want to remove its fact. It's, uh, in cause, in inference. These things are called black confounders.

Often what you can do is you can model the effect. You can put them into your model and try to adjust. So another one of those things might be cost. You know what?

If I want to look at the cost of justice forms of a model which models are punching about of their way for, of people, which moves are punching above their weight. Terms of primary account, we can expose factor measure that we can do IT without introducing anything that compromises the organic feature of the data that we collect. Hopefully, I answers the .

question IT does for someone with the back on in econometric. This is super familiar.

You're probably Better this than me for sure.

Well, I mean, so I used to be no quality ata trader and so you know controlling for multiple effects on on stock Prices is effectively the job. Um so it's interesting. Obviously, the problem is proving caution, which is hard, but you don't have to do that.

Yes, yes, that's right. And causal in prints is a hard problem. And he goes beyond statistics, right? It's like you have to build the right cause of model. And so and so but we think that this is a good first step and where so sort of looking forward to learning from more people, there's some good people of birthday that work on cause of interests for the learning from them on bike. What are the really most contemporary techniques that we could use in order to estimate the true cause effects possible?

Maybe we could take A A step through the other category. So style control is a category. IT is not a default. I have thought that when you wrote the blog post, actually, I thought you would be the new default. Seems like the most obvious can l but you have are the category of coding of hard promise.

We sider that dering once you make that step, once you take that step, you're introducing euros ion. And are not, you know, why should our opinion be the one that's kind of the community choice we could put IT to A.

I don't know, no opinion is an opinion. I mean, you should choice here. Yeah, you have all these others have instruction following to just like pick a few of your favor category, talk about the of the story. The hard choices are yet to make.

Yeah, yeah, yeah. I think the initially the reason why we want to get these new categories is essentially to answer and some the questions from a community, which is you won't have a single liable for everything. So these model behave very differently in different domes and say, this model is trend for coding.

This model trend or more technical questions is on on the other hand, to answer people's questions about like OK, what if all these low quality, you know, because we cross our data from the internet, there will be noise. So how do we the noise, how do we filter out these low college data effectively? So that was like, you know, some questions we want to answer.

So basically, we will we spend a few months, I really like with these questions to understand how do we filter all. Because these they are like medium data point. And then if you want to to relate l yourself, well, so we need to kind of like to automate this kind of data as a fictional pipi for us to effectively category step to different categories, say cody mass struction falling and also hundred grams.

So that was like the hope is when we slice the data into these meaningful categories to get few people more like Better signals or direction. That's also to clarify what we are actually actually measured for. Is that thing that the court part of the edge motion that was the initial motivation to that make sense?

Yeah I just say this does like get back to the point that the philosopher is still like minor again said, can take .

that it's the data cage free to or .

just organic it's cage brass and 能 接 吗?

Yeah of these efforts like open source, I would open source all the data. Clean pine building.

Yeah I love the new books you guys publish. Actually really good just for learning .

statistics. Yeah I agree on .

the initial premise of hey breathing A E mail, breathing a story. There's like no ground troop. But I think css you move til like coating and like red teaming, some other answers like kind of scale levels. So i'm curious how you think about the distribution of scale of the users like maybe the top one percent a red t marris just not participating in the arena. So how do you get think about adJusting for IT and like feels like this where this kind like big differences between the average in the the top .

yeah ring of course, red team is quite chAllenging. So okay, moving back, there's definitely like sometimes that are not as subjective that like paralyse human preference. Feedback is not the only signal that you would want to measure.

And to some extent, maybe it's useful, but IT maybe more useful if you give people Better tools, for example, be great. We can execute code within arena. Be fantastic.

We want to do that. There is also the idea construction, a user leader board. What does that mean? That mean that some users are about to the others? And how do we measure that? We quantify that hard in chat, bott rena.

But where IT is easier isn't rantings. And then right thing, there's an explicit game you trying to break the model, you the winner, you lose. So what you can do is you can say, hey, what's really happening is that the models and humans are playing a game against one another. And then you can use the same sort of bradly tary methodology with some some extensions that we came up with. And one of you can get one of our recent blog posts for the sort of the radical extensions. You can attribute strength back to individual players and jointly contribute strength to, like the models that are in this sheer king game, along with the target tasks, like what types of jail breaks you ve so yeah, and I think this is a hugely important and interesting avenue that we want to continue researching. We have some initial ideas, but now all thoughts are welcome.

Yeah, well, first of all, the great execution, the e to be guys, i'm sure they will be happy to help you, help you set that up their big fans where investors in the company got a note, which we do a lot in A I red teaming. I think to me the most interesting thing has been how do you do sure like the model drill break is one side, we also need a scar leaving from the mind on the protest.

And he was talking about, for example, like you know, context stealing and like a weight stealing. So there's gonna a lot more that goes around IT. I'm curious just how you think about the model.

And then maybe like the broader system, even with red team marina, you're just focus on that. Jail breaking of the model, right? You're not doing kind of like tennis asking on the more system level thing of the model or like maybe you can get the training data back. You can explicate some of the layers and the weight and and .

picks like that. So right now, as you can see, the red team early stage, and we are still expLoring what could be the potential new games we can introduce the perform. So the idea is still the same, right? And we build a community driven project platform.

People, they can have fun with this website for sure, as one thing, and they used and then help everyone to test this moves. So one of the last argument mention as stealing secrets by stealing training sets that could be one, you know, could be designed as a game. Say, can you still user credential? You know, we have maybe we can hide the credential into system of problems. And so so they are like a few potential ideas we are want to exchange for sure you want to know.

I think this is great. This idea is a great one. There's a lot of great ideas on the red teaming space. You know, i'm not personally like a red teamer. I don't like go around and red teen models, but there are people that do that and they're awesome, the super skilled.

And when I think about the red team, and I think those are the really the people that were be building IT for like we want to make them excited and happy new tools that they like and just like job. But I will trust that this will end up being useful for the world. And all these people are know, I want to say, all these people in this computer actually good, harder, right?

They're not doing you because they want to like, see the world burn. They're doing IT because they like, think it's fine and cool. And yeah okay. Maybe they want to see. Maybe they .

want to not not so major you .

something. So you know trying to figure out how to serve them best. I think I don't know where that fits. I just i'm not right and give them credit yeah. So i'm not trying to express any particularly value judged here is to whether and off the right next step is just that, that sort of the way that I think you would think .

about yeah that we also talk to send a show off of the hacker prompt competition and he's pretty interested in reteaming at scale. I just called to that um you guys maybe wanted talk with him.

Oh nice.

We wanted to cover a little a few topical things and then go into the other stuff that your group is doing. You know you're not just running chat arena. You can also talk about the new website in your your future plans, but I just wanted to briefly focus on all one.

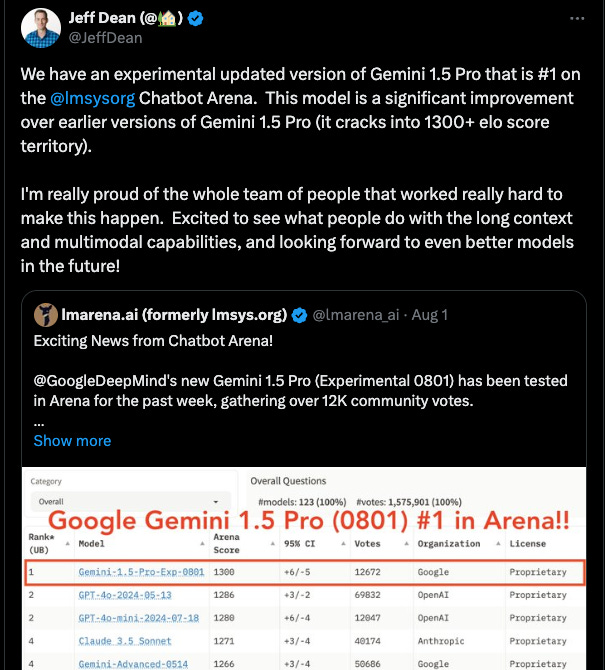

Uh, IT is the the hottest latest model. Obviously, you guys already have IT on the leader board. What is the impact of all one on your evs?

Later interview law?

Yeah because .

IT needs like thirty, sixty seconds, sometimes even more to the latencies is like hide. So that's one in sure. But I think we observe very interesting uh, things from the model as well, like we absolutely significant improvement in certain categories like more technical.

Yes, I think actually like one take away that was encouraging is that I think a lot of people before the a one release thinking, oh like this benched, market saturated. And why were they are thinking that? They were thinking that because there was a bunch of models that we're kind of at the same level.

They were just kind of like experimentally competing and IT sort of wasn't immediately obvious that any of them were any Better. Nobody, including any individual person, start to tell. But what oh one did is IT was it's clearly a Better model for certain tasks.

I mean, I used IT for like proving some themes and there's some theories that like only I know because I still do a little bit thear here, right? So it's like I can go on there and ask like, oh, how would you prove this exact thing, which I can tell you has never been in the public omai it'll do IT and like what okay. So there's this model and IT crushed the benchmark.

You know it's just like really like a big gap. And what that's telling us is that it's not saturated here. Would still measuring some signal that was encouraging.

Yeah that point to take away is that the benchMarks comparative, it's not there's no absolute number, there's no maximum ello. It's just like if you're Better than the rest than you, then you win. I think that was actually quite helpful.

us. I think people were criticising. I saw some some of the economic tiy zing IT as not apples to apples, right?

Like because IT can take more time to reason. It's basically doing some search, doing some chain. I thought that if you actually let the other models do that same thing.

they might do Better. Absolutely, but I mean, here none of the leader board currently apples to apples because you have like geri flash, you have you know all sorts of tiny models like laa A B, like A B of four or five b are not apples to apples.

So are different .

ency lencs.

So late.

Cy control, that's another thing we can do. Stop control, no lens control. You know, things like this are important if you want to understand the trade, ffs. Involved in using A I .

when is the developing story? We still haven't seen the full model yet, but you is very exciting new new paradise. Think one controversy I just want to to give you guys space to address is the collaboration between you and the large model labs. People have been suspicious, that should say, about how they choose the A, B test on you.

I'll state the argument and that you respond, which is basically they like have they run like five anonymous models and basically arga x their ello on els or or chapo trena and they release the best one, right? Like what has been your end of the the controversy? How have you decided to clarify your policy going .

on the high level? I think our goal here is to build the fast email for everyone and including everyone in the community can see the data board, can understand, compare the models.

More importantly, I think we want to be best evia also for for model builders like all these front labs building model, they also internally facing a chAllenge, which is, you know, how do you evel model? So that's the reason why we want to partner with all the frontier APP people, not just I need to help them testing. So that's one of the we want to solve this technical chAllenge.

which either yeah I mean benefits everyone.

an idea model and .

people also are interested in like seeing the bleeding edge of the models. People in the community seem to like that. You know all there's a new model of this, you know is this strawberry. People are excited and they're interest.

And then there's this question that you bring up up, is IT actually causing harm, right? Is IT causing harm to the benchmark that we are allowing this private testing to have them? Maybe like stepping back, why do you have that instinct?

The reason why you and others in the community have that have that instinct is because when you look at something like a benchmark, like an image net, a static benchmark, what happens is that if I give you a million different models with that are all slightly different. And I think the best one, there's something called selection bias, that placing, which is that the performance of the winning model is overstated. This is also someone a couple of winners curse.

And that's because statistical fluctuations in the evaluation they're driving, which model gets selected as the top. So this selection bias can be a problem. Now there's a couple things that make this benchmark slightly different. So first, all the selection bias that you include your only testing fight models is Normally empirical small.

And that's why we have this kind of like confidence constructed.

That's right. Are confidence actually not multiplicity justice? But one thing that we could do like immediately tomorrow in order to like address this concern is if a model providers testing five models and they want to release one and we're constructing the models at level like one minus alpha, we can just construct the intervals instead at level one minus alpha dev by five. That's how the one for any correction.

What you'll tell you is that like the final performance of the model, like the interval that against constructed actually formally correct, we don't do that right now, partially because we kind of have no from simulations that the amount of selection biases you occur with these five things just, just not huge. It's not huge. And comparison is the variability that you get from the from just regular human voters. So that's one thing.

But then the second thing is the venture work is live, right? So when I am happening is that will be a small managing two. But even if you suffer from the winners curse after testing these five models, what happened is that over time, because we're getting new data, it'll get adjust IT down.

So there is any bias that against introduced at that stage in the long run, that actually doesn't matter. Is asymptotically basically, like in the long run, is way more fresh data than there is data that was used to compare these five models. Inst models the announcement .

expect is only just the first phase and 还是 long to yeah.

that's right. And it's sort of like automatically correct excel for this selection adjustment every month.

I do a little chart of alums. Is ello vers cost just attract the Price per dollar a the the money like how much money do I have to pay for one incremental point in ello? And so I I actually observe an interesting stability in most of the ello number is succeed for some of them. So it's, for example, GPT for o August has fAllen from twelve nine to twelve sixty over the past few months. And it's surprising .

you are saying like a new version of people s the versions may.

there was me, me is twelve, eight, five. I could have made some data entry error, but if interesting to attract tracking things over time, anyway, observe like numbers go .

up and numbers go down. It's a remarkably stable points.

yes. And and sometimes els rise as well. Rick core rose from two hundred and thirty. Just one of the things, by the way, the community is always suspicious about like, hey, did do the same end point get dumper after release, right? It's it's such a mean that's funny.

Those are different end points.

right? Those are different A P I M points. Beautiful August and may. But if it's force like you know any point versions we fixed, usually we observe small variation after release.

I mean, you can quantify the variations that you would respect in an eo that's a close form number that you can calculate. So if the variations are like larger than we would expect. Then that indicate that we should look into that should have an important first to know. So maybe you should you should sense reply, yeah, please.

the data.

And I know we only got a few minutes before to be wrap, but there are two things I would definitely to talk about. One is row. So talking about models, maybe getting number over time.

Bubba, our reuters, actually helpful in your new experience and uh, short point out about the M O E, are technically rather do. So how do you can think about the rather being part of the model versus routing different models? And yeah overall learnings from .

from building IT yeah so roam is a project we release a few months ago, I think. And our goal was to basically understand can we use the preference data we collect to route model based on the question, conditional on the questions, because we may make assumption that some model are good at math model are good coding, said that. So we found that somewhat useful for sure.

This is like i'm going effort. Our first face of this is, is pretty much like open source, the framework that we develop. So for anyone they are interested in this problem, they can use the framework and then they can turn their own like router model and then to do evaluation to benched work.

So that that's thank our goal. The reason why we read this work, and I think there are a couple of future of stuff we are thinking, like one is like, you know, can we just scale this, do even more data, even more preference data, and then try the reward model. I try like like a role model, Better, rounder model.

Another thing is released, a bench one for this because right now, currently there is seems to be the one of the end point when we developed this project was like there is just no good benchmark for for router. So that would be another thing we think that could be useful contribution to community. And there are still .

a yeah I think my my fundamental philosophical doubt is, does the rather model have to be at least as smart as a smartest model? What's the minimum required intelligence of a rater model? right? Like if it's too dumb, is not going to row properly. Well.

I think that you can build a very, very simple rather that is very effective. So let me give you an example. You can build a great router of one parameter and the parameter is just like i'm onna check.

If my question is hard and if it's hard that i'm going to go to the the big model, if it's easier i'm going to go to the little model. You know there's very ways of measuring car that I like pretrial al I like doesn't have code, doesn't have math, is IT long. That's already great for step, right? Because ultimately, at the end of the day, you're competing with a weak baseline, which is any individual model.

And you're trying to ask the question, how do I improve cost? And that's like a one dimensional tradeoff. It's like performance cost, and it's great. Now you can also get into the extension, which is what models are good at what particular capture of queries.

And then you know, I think your concerns starts taking into a factors, but can we actually do that? Can we estimate which models are good in which parts of the space in a way that doesn't introduce more variability and more variation and error into a final pipeline? Then just using the best deval, that's kind of how I see IT.

Your approach is really interesting compared to the commercial approaches where you use information from the chat rena, inform your model, which is I mean smart and is the foundation of everything you do as we rap.

Can we just talk about alanis and what that's be going forward? And like alon arena becoming just something I saw you announced yesterday you're graduating. I think maybe that was confusing since R P, H. Students graduation.

just for context, L M. Says, started is like a student club and and driven, yeah. yes. Student driven, like research projects of many different research projects are part of sort of chap. Auto rena is, of course, like kind da become its own thing and leamon and Young who .

are you know created a .

i've kind of like moved on the working onest dealing and now they're doing other projects that are originating from alembics. And for that reason, we thought that made sense to kind of a couple of two just so a the olympics thing. It's not like when someone says how and they think chat, rena, that's not fair sort of speak.

And we want to support new projects .

and we want to support new projects on so for but because these are all like, you know, our friends, that's why we call the graduation.

I agree. That's like one thing that people were maybe a little confused by where alanis kind of starts in and where arena starts. And I think you reach escape city now that they are kind of your own, your own. thanks.

So have one parting question. Like what do you want more of? Like what do you want people .

to are approach with? Oh god, one thing would be like we're obvious ly expanding into like other kinds of arenas, right? We definitely need like active help on routines. We definitely active help on are .

different modality, different mode copilot holding hold.

You know if somebody could like help us inform this like rappel in replan travel arena. Massive I don't be a massive delph. And I know that these people out there who are pasted and capable of doing that is just we don't have enough hands on back.

We're just like an academy research life, right? We're not equipped to support this kind of project. So yeah, we need help with that. Um we also need just like general back and death and new ideas, new concept ideas. I mean, honestly, the work that we do spends everything from my foundational statistics, like new proofs to full stack step and like anybody who's like wants contribute something to that pipeline is should definitely reach out. And we need IT and sell .

open source project anyway. Anyway, can make A P R.

and we're happy to know whenever wants to contribute will give them credit. We're not trying to keep all cut for ourselves. We wanted to be community project .

that's great and fit this pair of everything you've been doing over there. So how some guys well, thank you so much for for taking the time and we'll put all the length and the show notes so that people can find you and and reach d you that thank you so much.

It's very nice to talking and thank you for the wonderful questions. Thank you much.