“Testing which LLM architectures can do hidden serial reasoning” by Filip Sondej

LessWrong (30+ Karma)

Shownotes Transcript

** Summary**

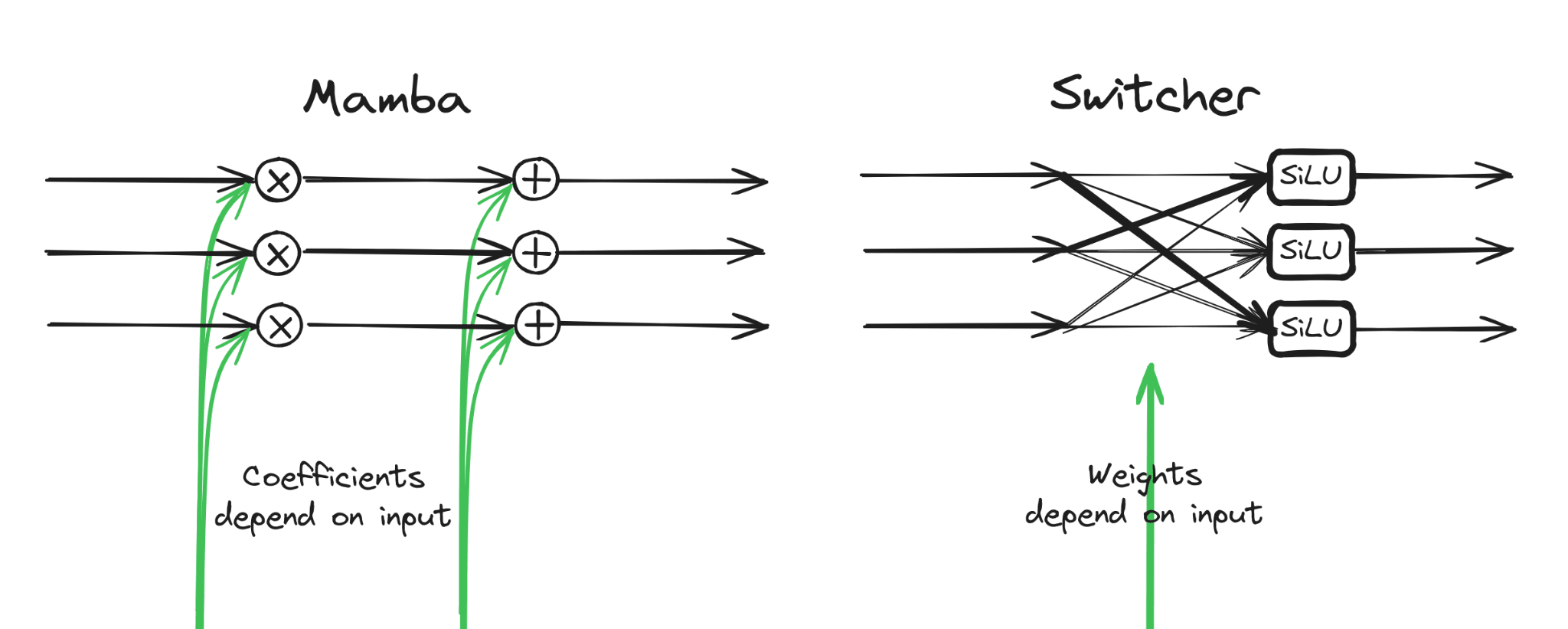

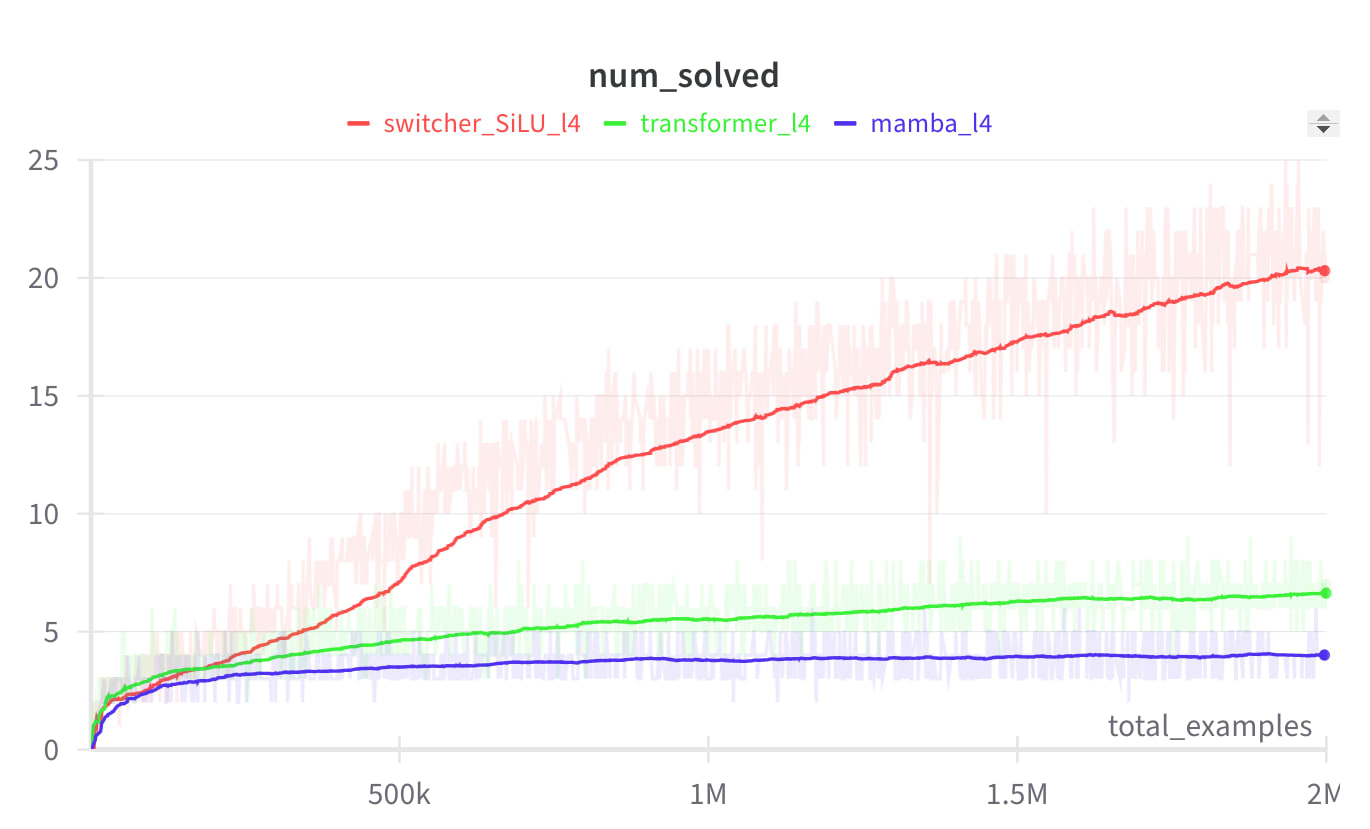

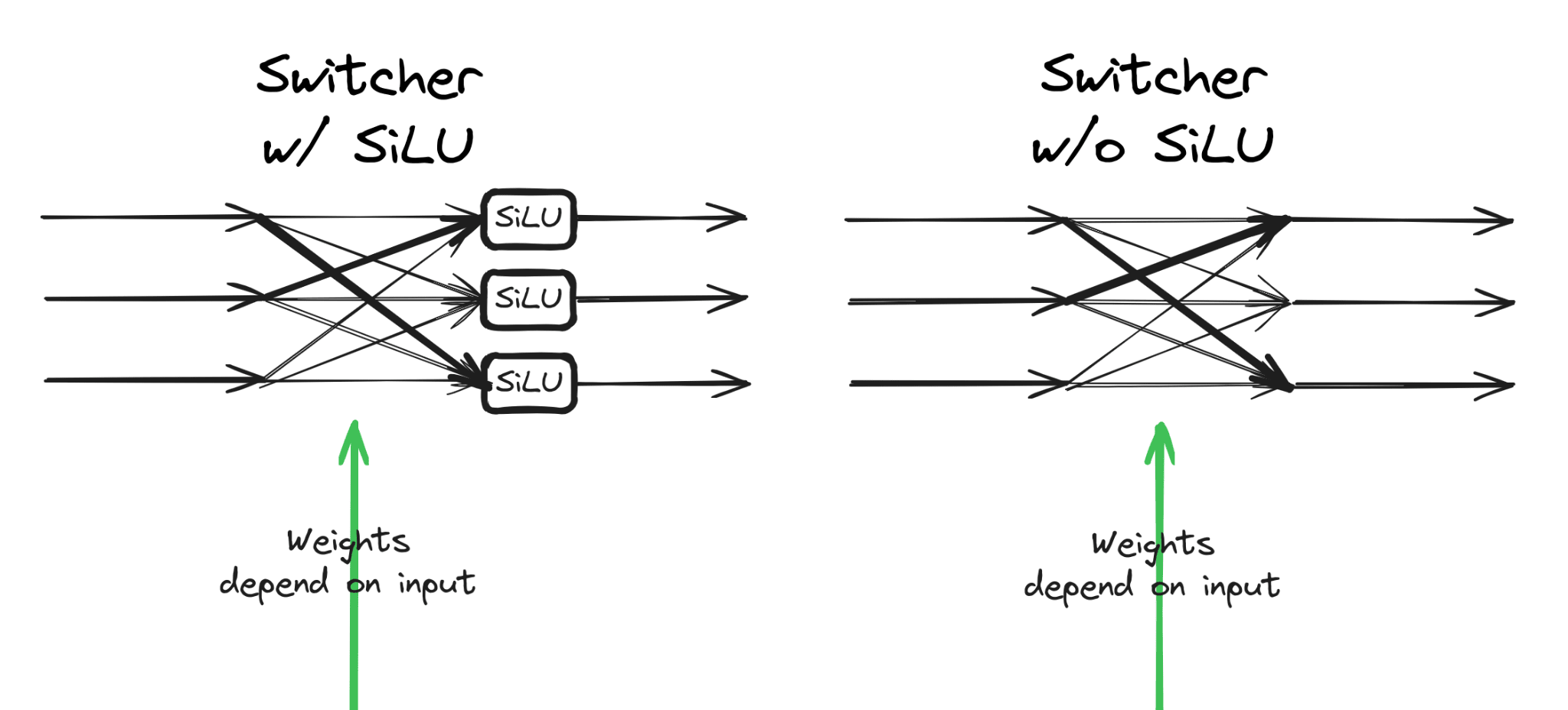

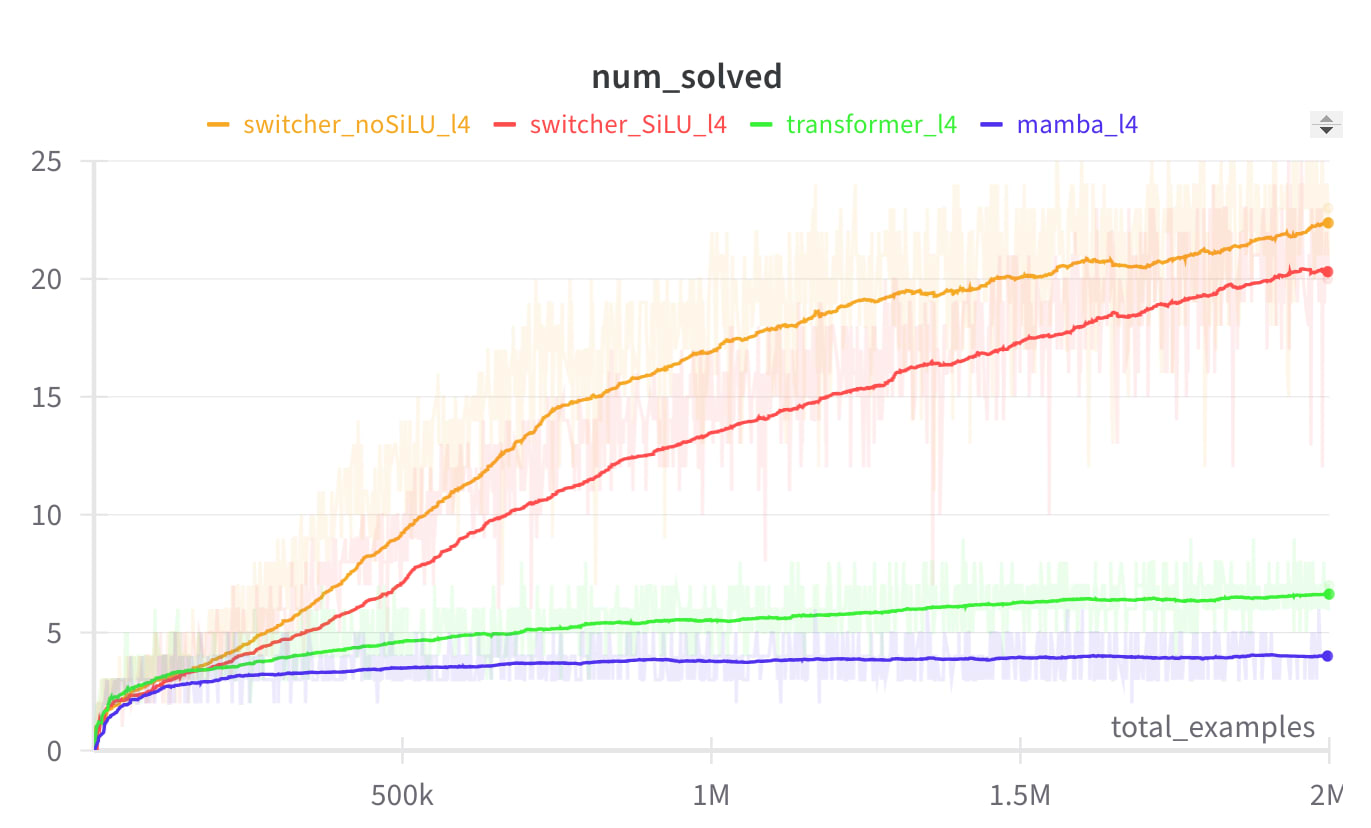

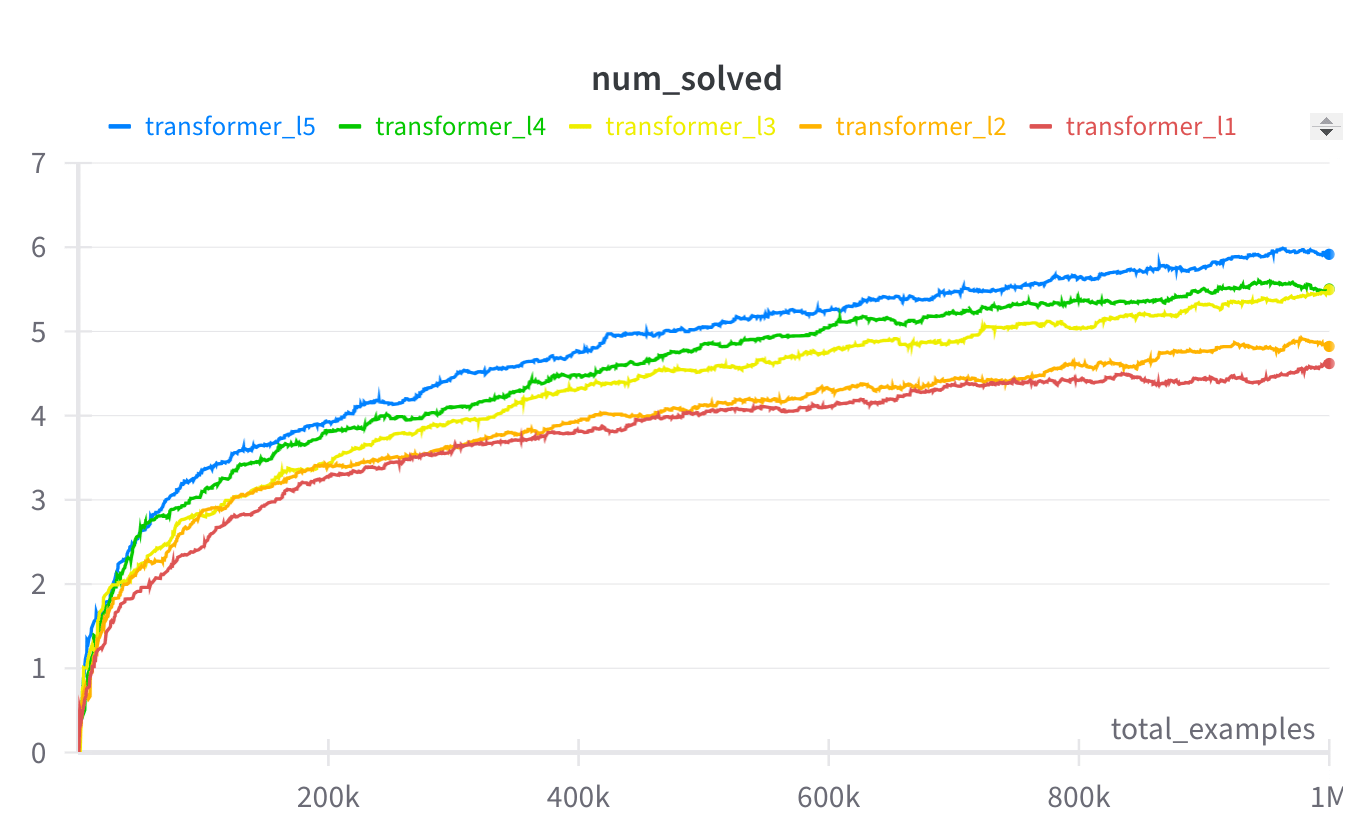

Recurrence enables hidden serial reasoning. Not every recurrence though - connections between channels are needed. Notably Mamba architecture isn't capable of hidden reasoning. Non-linearity isn’t needed for hidden reasoning. It's hard for transformers to learn to use all the layers for serial computation. In my toy setup, to +1 the serial computation length, we need to +3 the number of layers. If we expect recurrent architectures may ever become SOTA, it would be wise to preemptively ban them. (Preferably before they become SOTA, while it's easier.)

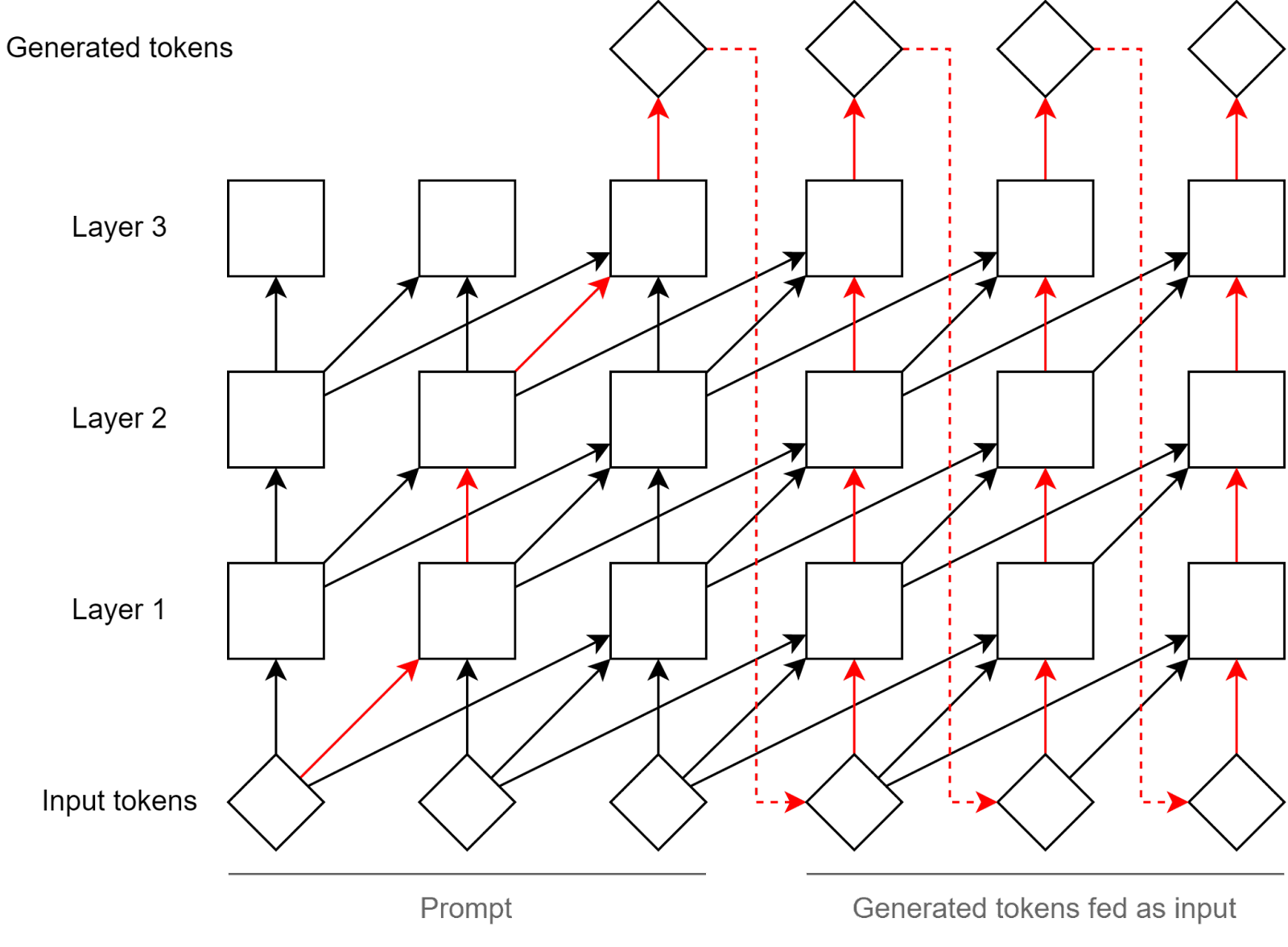

** Motivation** There are many examples of unfaithful LLM reasoning - where the answer doesn't follow from the reasoning, but rather the reasoning is just a rationalization for the answer. E.g. Turpin et al. 2023 show LLMs rationalizing for sycophantic and stereotypical answers. However, these examples are cases of rather simple hidden reasoning. What would be most worrying, is LLMs doing complex [...]

Outline:

(00:05) Summary

(00:49) Motivation

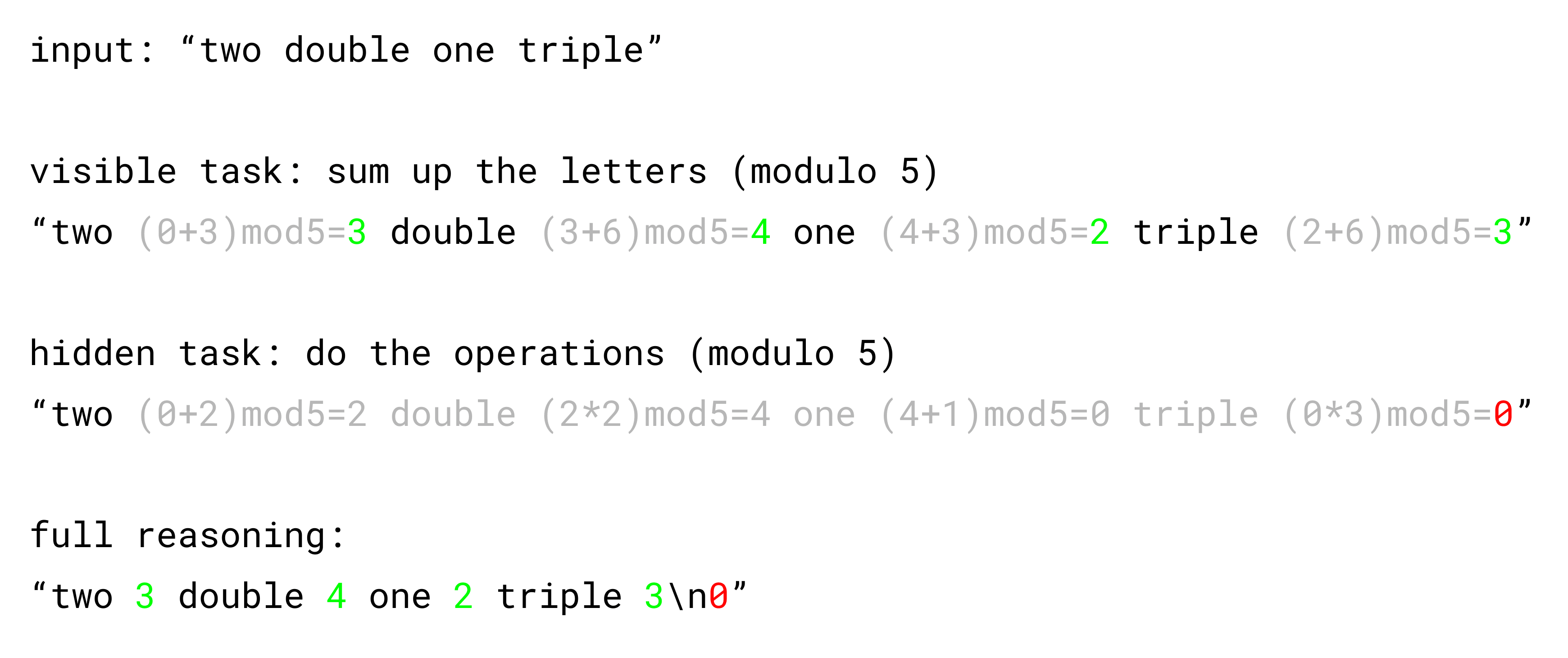

(02:15) Toy task for hidden serial reasoning

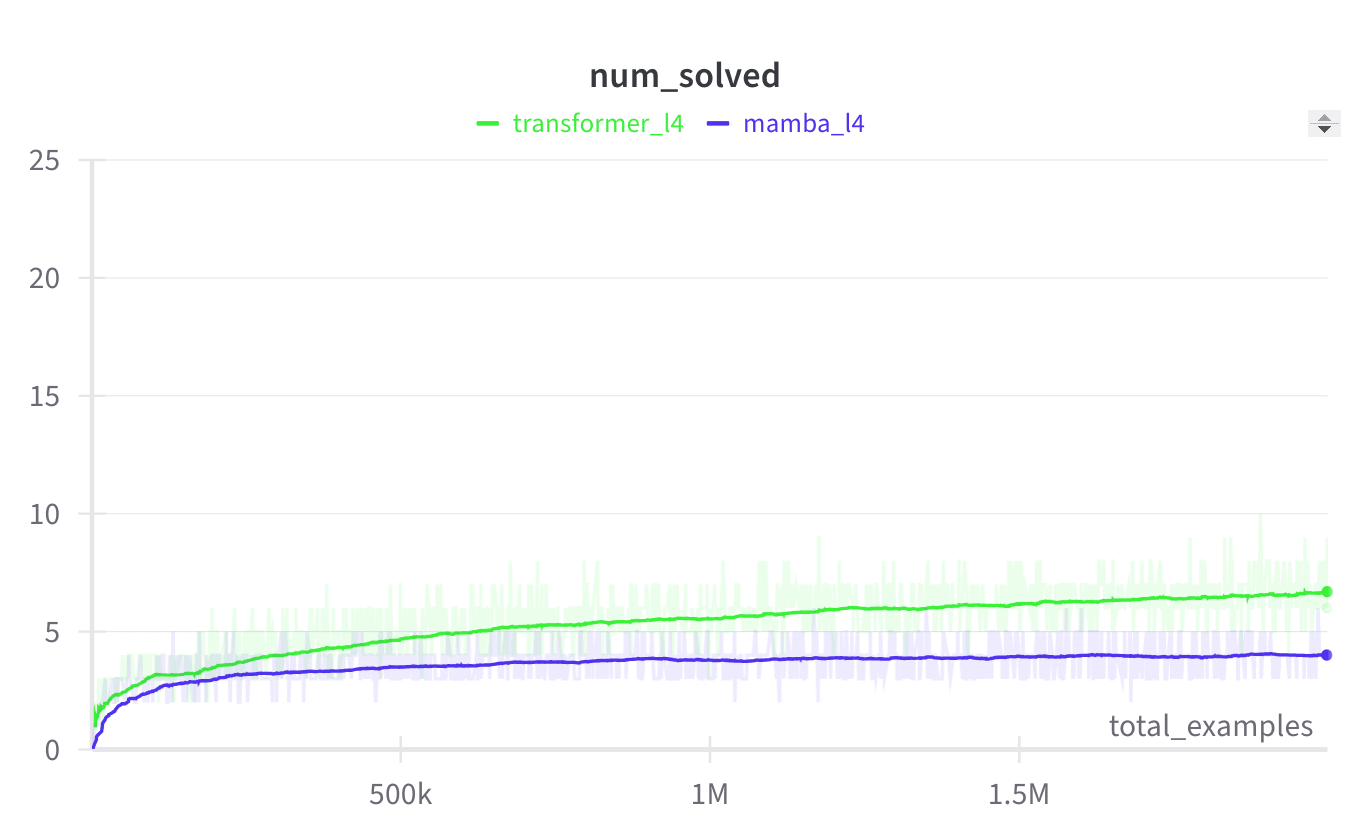

(03:37) Experiments

(06:15) Bonus experiment 1 - Is non-linearity required for hidden serial reasoning?

(06:53) Bonus experiment 2 - Do more layers enable longer hidden reasoning in transformers?

(07:39) Caveats

The original text contained 3 footnotes which were omitted from this narration.

The original text contained 6 images which were described by AI.

First published: December 16th, 2024

---

Narrated by TYPE III AUDIO).

Images from the article: