Simplifying Transformer Blocks without Sacrificing Efficiency

2024/6/19

Machine Learning Tech Brief By HackerNoon

Frequently requested episodes will be transcribed first

Shownotes Transcript

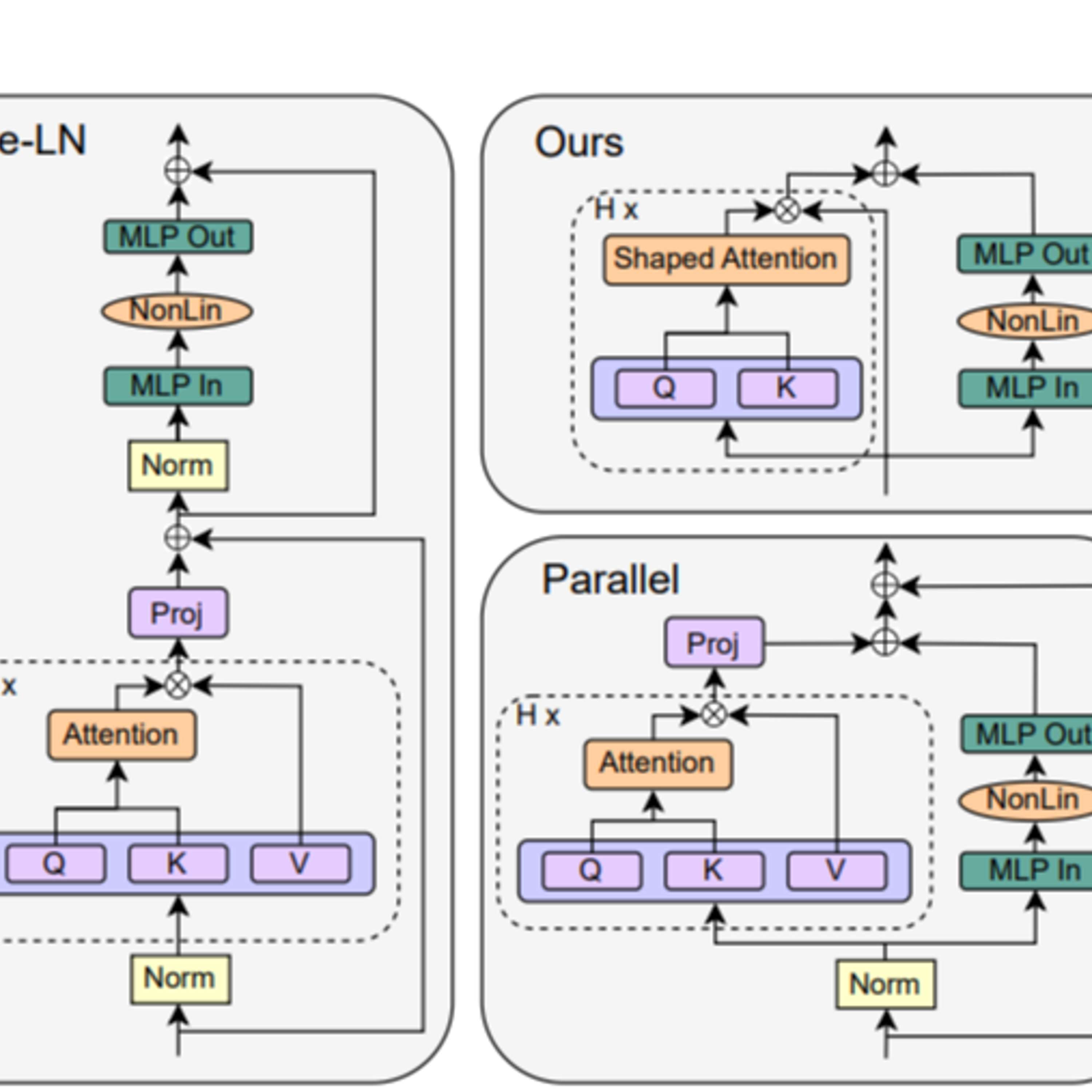

This story was originally published on HackerNoon at: https://hackernoon.com/simplifying-transformer-blocks-without-sacrificing-efficiency). Learn how simplified transformer blocks achieve 15% faster training throughput without compromising performance in deep learning models. Check more stories related to machine-learning at: https://hackernoon.com/c/machine-learning). You can also check exclusive content about #deep-learning), #transformer-architecture), #simplified-transformer-blocks), #neural-network-efficiency), #deep-transformers), #signal-propagation-theory), #neural-network-architecture), #hackernoon-top-story), and more.

This story was written by: [@autoencoder](https://hackernoon.com/u/autoencoder)). Learn more about this writer by checking [@autoencoder's](https://hackernoon.com/about/autoencoder)) about page,

and for more stories, please visit [hackernoon.com](https://hackernoon.com)).

This study simplifies transformer blocks by removing non-essential components, resulting in 15% faster training throughput and 15% fewer parameters while maintaining performance.