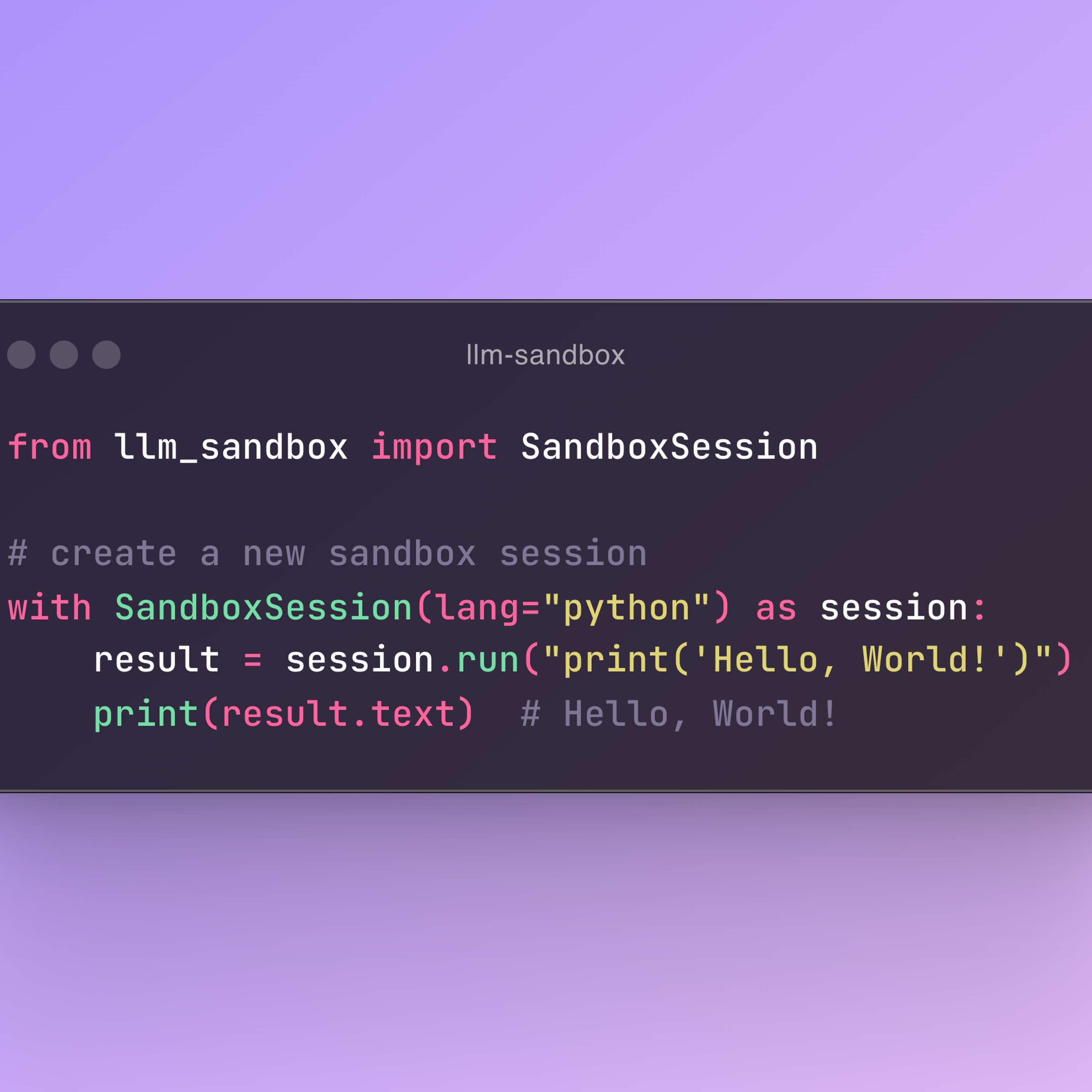

Introducing LLM Sandbox: Securely Execute LLM-Generated Code with Ease

2024/7/13

Machine Learning Tech Brief By HackerNoon

Frequently requested episodes will be transcribed first

Shownotes Transcript

This story was originally published on HackerNoon at: https://hackernoon.com/introducing-llm-sandbox-securely-execute-llm-generated-code-with-ease). LLM Sandbox: a secure, isolated environment to run LLM-generated code using Docker. Ideal for AI researchers, developers, and hobbyists. Check more stories related to machine-learning at: https://hackernoon.com/c/machine-learning). You can also check exclusive content about #llm), #langchain), #llamaindex), #ai-agent), #llm-sandbox), #ai-development), #ai-tools), #code-interpreter), and more.

This story was written by: [@vndee](https://hackernoon.com/u/vndee)). Learn more about this writer by checking [@vndee's](https://hackernoon.com/about/vndee)) about page,

and for more stories, please visit [hackernoon.com](https://hackernoon.com)).

LLM Sandbox is a lightweight and portable environment designed to run LLM-generated code in a secure and isolated manner using Docker containers. With its easy-to-use interface, you can set up, manage, and execute code within a controlled Docker environment. Support for multiple programming languages, including Python, Java, JavaScript, C++, Go, and Ruby.