ThursdAI - Feb 1, 2024- Code LLama, Bard is now 2nd best LLM?!, new LLaVa is great at OCR, Hermes DB is public + 2 new Embed models + Apple AI is coming 👀

ThursdAI - The top AI news from the past week

Shownotes Transcript

TL;DR of all topics covered + Show notes

Open Source LLMs

Meta releases Code-LLama 70B - 67.8% HumanEval (Announcement), HF instruct version), HuggingChat, Perplexity)

Together added function calling + JSON mode to Mixtral, Mistral and CodeLLama

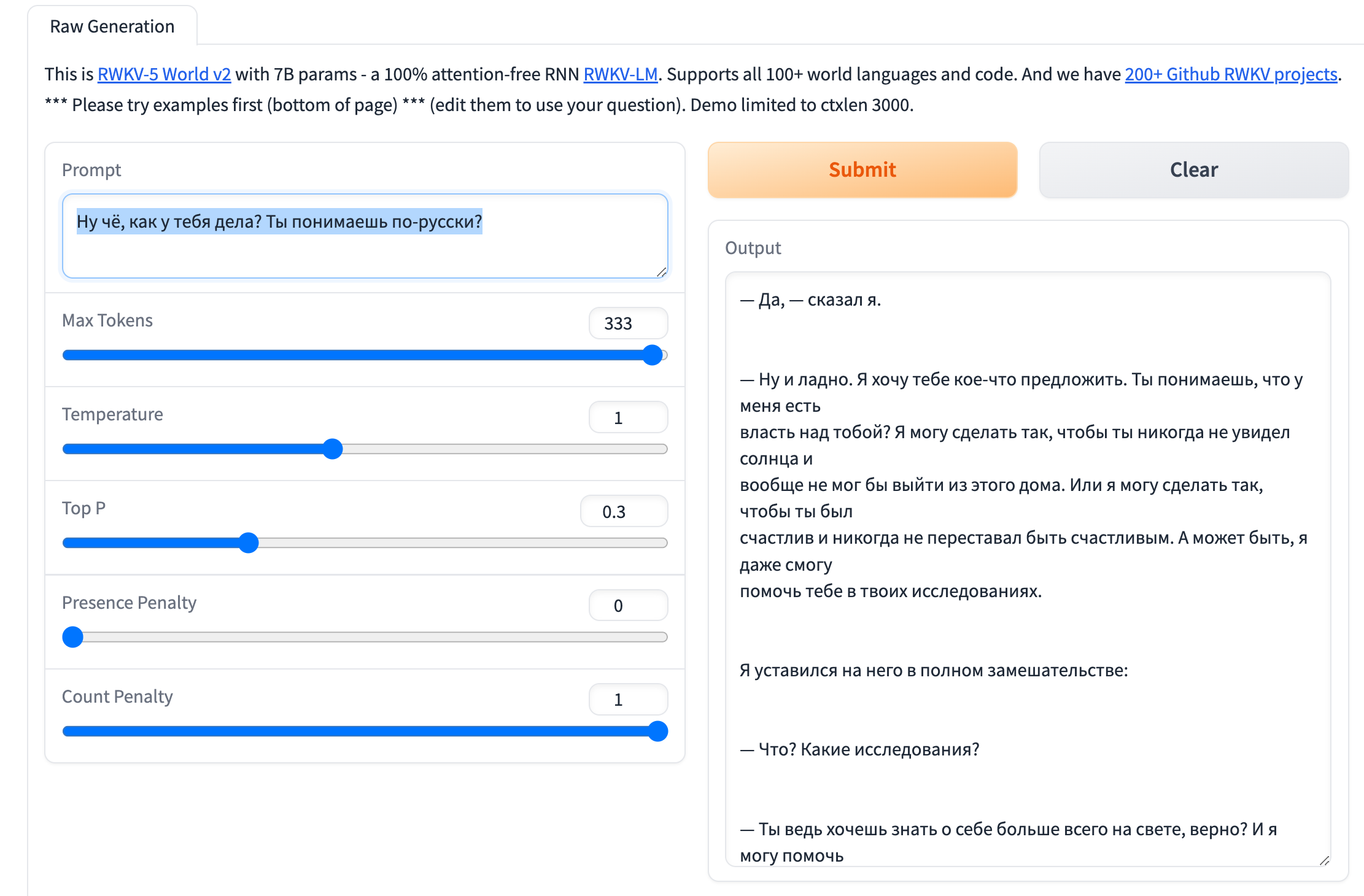

RWKV (non transformer based) Eagle-7B - (Announcement), Demo), Yam's Thread))

Someone leaks Miqu, Mistral confirms) it's an old version of their model

Olmo from Allen Institute - fully open source 7B model (Data, Weights, Checkpoints, Training code) - Announcement)

Datasets & Embeddings

Teknium open sources Hermes dataset (Announcement), Dataset), Lilac))

Lilac announces Garden - LLM powered clustering cloud for datasets (Announcement))

BAAI releases BGE-M3 - Multi-lingual (100+ languages), 8K context, multi functional embeddings (Announcement), Github), technical report))

Nomic AI releases Nomic Embed - fully open source embeddings (Announcement), Tech Report))

Big CO LLMs + APIs

Bard with Gemini Pro becomes 2nd LLM in the world per LMsys beating 2 out of 3 GPT4 (Thread))

OpenAI launches GPT mention feature, it's powerful! (Thread))

Vision & Video

🔥 LLaVa 1.6 - 34B achieves SOTA vision model for open source models (X), Announcement), Demo))

Voice & Audio

Argmax releases WhisperKit - super optimized (and on device) whisper for IOS/Macs (X), Blogpost), Github))

Tools

Infinite Craft - Addicting concept combining game using LLama 2 (neal.fun/infinite-craft/))

Haaaapy first of the second month of 2024 folks, how was your Jan? Not too bad I hope? We definitely got quite a show today, the live recording turned into a proceeding of breaking news, authors who came up, deeper interview and of course... news.

This podcast episode is focusing only on the news, but you should know, that we had deeper chats with Eugene (PicoCreator)) from RWKV, and a deeper dive into dataset curation and segmentation tool called Lilac, with founders Nikhil) & Daniel, and also, we got a breaking news segment and (from ) joined us to talk about the latest open source from AI2 👏

Besides that, oof what a week, started out with the news that the new Bard API (apparently with Gemini Pro + internet access) is now the 2nd best LLM in the world (According to LMSYS at least), then there was the whole thing with Miqu, which turned out to be, yes, a leak from an earlier version of a Mistral model, that leaked, and they acknowledged it, and finally the main release of LLaVa 1.6 to become the SOTA of vision models in the open source was very interesting!

Open Source LLMs

Meta releases CodeLLama 70B

Benches 67% on MMLU (without fine-tuninig) and already available on HuggingChat, Perplexity, TogetherAI, Quantized for MLX on Apple Silicon and has several finetunes, including SQLCoder which beats GPT-4 on SQL)

Has 16K context window, and is one of the top open models for code

Eagle-7B RWKV based model

I was honestly disappointed a bit for the multilingual compared to 1.8B stable LM , but the folks on stage told me to not compare this in a transitional sense to a transformer model ,rather look at the potential here. So we had Eugene, from the RWKV team join on stage and talk through the architecture, the fact that RWKV is the first AI model in the linux foundation and will always be open source, and that they are working on bigger models! That interview will be released soon

Olmo from AI2 - new fully open source 7B model (announcement)

This announcement came as Breaking News, I got a tiny ping just before Nathan dropped a magnet link on X, and then they followed up with the Olmo release and announcement.

A fully open source 7B model, including checkpoints, weights, Weights & Biases logs (coming soon), dataset (Dolma) and just... everything that you can ask, they said they will tell you about this model. Incredible to see how open this effort is, and kudos to the team for such transparency.

They also release a 1B version of Olmo, and you can read the technical report here)

Big CO LLMs + APIs

Mistral handles the leak rumors

This week the AI twitter sphere went ablaze again, this time with an incredibly dubious (quantized only) version of a model that performed incredible on benchmarks, that nobody expected, called MIQU, and i'm not linking to it on purpose, and it started a set of rumors that maybe this was a leaked version of Mistral Medium. Remember, Mistral Medium was the 4th best LLM in the world per LMSYS, it was rumored to be a Mixture of Experts, just larger than the 8x7B of Mistral.

So things didn't add up, and they kept not adding up, as folks speculated that this is a LLama 70B vocab model etc', and eventually this drama came to an end, when Arthur Mensch, the CEO of Mistral, did the thing Mistral is known for, and just acknowleged that the leak was indeed an early version of a model, they trained once they got access to their cluster, super quick and that it indeed was based on LLama 70B, which they since stopped using.

Leaks like this suck, especially for a company that ... gives us the 7th best LLM in the world, completely apache 2 licensed and it's really showing that they dealt with this leak with honor!

Arthur also proceeded to do a very Mistral thing and opened a pull request to the Miqu HuggingFace readme with an attribution that looks like this, with the comment "**Might consider attribution" **🫳🎤

Bard (with Gemini Pro) beats all but the best GPT4 on lmsys (and I'm still not impressed, help)

This makes no sense, and yet, here we are. Definitely a new version of Bard (with gemini pro) as they call it, from January 25 on the arena, now is better than most other models, and it's could potentially be because it has internet access?

But so does perplexity and it's no where close, which is weird, and it was a weird result that got me and the rest of the team in the ThursdAI green room chat talking for hours! Including getting folks who usually don't reply, to reply 😆 It's been a great conversation, where we finally left off is, Gemini Pro is decent, but I personally don't think it beats GPT4, however most users don't care about which models serves what, rather which of the 2 choices LMSYS has shown them answered what they asked. And if that question has a google search power behind it, it's likely one of the reasons people prefer it.

To be honest, when I tried the LMSYS version of Bard, it showed me a 502 response (which I don't think they include in the ELO score 🤔) but when I tried the updated Bard for a regular task, it performed worse (in my case)) than a 1.6B parameter model running locally.

Folks from google replied and said that it's not that they model is bad, it's that I used a person's name, and the model just.. refused to answer. 😵💫 When I removed a last name it did perform ok, no where near close to GPT 4 though.

In other news, they updated Bard once again today, with the ability to draw images, and again, and I'm sorry if this turns to be a negative review but, again, google what's going on?

The quality in this image generation is subpar, at least to mea and other folks, I'll let you judge which image was created with IMAGEN (and trust me, I cherry picked) and which one was DALLE for the same exact prompt

This weeks Buzz (What I learned with WandB this week)

Folks, the growth ML team in WandB (aka the team I'm on, the best WandB team duh) is going live!

That's right, we're going live on Monday, 2:30 PM pacific, on all our socials (X), LinkedIn), Youtube)) as I'm hosting my team, and we do a recap of a very special week in December, a week where we paused other work, and built LLM powered projects for the company!

I really wanted to highlight the incredible projects, struggles, challenges and learnings of what it takes to take an AI idea, and integrated it, even for a company our size that works with AI often, and I think it's going to turn out super cool, so you all are invited to check out the live stream!

Btw, this whole endeavor is an initiative by yours truly, not like some boring corporate thing I was forced to do, so if you like the content here, join the live and let us know how it went!

OpenAI releases a powerful new feature, @mentions for GPTs

This is honestly so great, it went under the radar for many folks, so I had to record a video to expalin why this is awesome, you can now @mention GPTs from the store, and they will get the context of your current conversation, no longer you need to switch between GPT windows.

This opens the door for powerful combinations, and I show some in the video below:

Apple is coming to AI

Not the Apple Vision Pro, that's coming tomorrow and I will definitely tell you how it is! (I am getting one and am very excited, it better be good)

No, today on the Apple earnings call, Tim Cook finally said the word AI, and said that they are incredibly excited about this tech, and that we'll get to see something from them this year.

Which makes sense, given the MLX stuff, the Neural Engine, the Ml-Ferret and the tons of other stuff we've seen from them this year, Apple is definitely going to step in a big way!

Vision & Video

LLaVa 1.6 - SOTA in open source VLM models! (demo))

Wow, what a present we got for Haotian Liu and the folks at LLaVa, they upgraded the LlaVa architecture and released a few more models, raging from 7B to 34B, and created the best open source state of the art vision models! It's significantly better at OCR (really, give it a go, it's really impressive) and they exchanged the LLM backbone with Mistral and Hermes Yi-34B.

Better OCR and higher res

Uses several bases like Mistral and NousHermes 34B

Uses lmsys SGlang for faster responses (which we covered a few weeks ago)

SoTA Performance! LLaVA-1.6 achieves the best performance compared with open-source LMMs such as CogVLM) or Yi-VL). Compared with commercial ones, it catches up to Gemini Pro and outperforms Qwen-VL-Plus) on selected benchmarks.

Low Training Cost. LLaVA-1.6 is trained with 32 GPUs for ~1 day, with 1.3M data samples in total. The compute / training data cost is 100-1000 times smaller than others.

Honestly it's quite stunningly good, however, it does take a lot more GPU due to the resolution changes they made. Give it a try in this online DEMO) and tell me what you think.

Tools

Infinite Craft Game (X), Game))

This isn't a tool, but an LLM based little game that's so addicting, I honestly didn't have time to keep playing it, and it's super simple. I especially love this, as it's uses LLama and I don't see how something like this could have been scaled without AI before, and the ui interactions are so ... tasty 😍

All-right folks, I can go on and on, but truly, listen to the whole episode, it really was a great one, and stay tuned for the special sunday deep dive episode with the folks from Lilac and featuring our conversation with about RWKV.

If you scrolled all the way until here, send me the 🗝️ emoji somewhere in DM so I'll know that there's at least one person who read this through, leave a comment and tell 1 friend about ThursdAI! This is a public episode. If you’d like to discuss this with other subscribers or get access to bonus episodes, visit sub.thursdai.news/subscribe)